In October 2016, we launched a request for proposals (RFP) in which applicants to the 2016 NIH Director’s Transformative Research Awards1 (“TRA”) program whose proposals had not received funding could re-submit their applications to us for consideration. We viewed this RFP as a way to both identify particular high-risk, high-reward projects within our funding category of scientific research and to test our hypothesis that high-risk, high-reward research is underfunded in general.

We’d like to express our gratitude to Ravi Basavappa at the NIH, who provided information about the TRA program. We’d also like to thank all the applicants, who trusted us with their proposals and reviews, and our external reviewers, who provided valuable insights. Ultimately, we received 120 funding applications that had been declined by the 2016 TRA program. Our review and selection process narrowed this field to four projects that we decided to fund, for a combined total of $10,800,544. The projects we decided to fund were as follows:

- $6,421,402 to Arizona State University to support a canine clinical trial assessing the effectiveness of a multivalent cancer vaccine.

- $2,054,142 to the University of Notre Dame over three years for a nanopore protein sequencing project.

- $1,500,000 to Rockefeller University for research on viral histone mimics.

- $825,000 to the University of California, San Francisco over three years for an organ regenerative surgery project.

In addition to identifying potentially impactful funding opportunities, the RFP and our discussions with the NIH led to several lessons we believe will be useful for future investigations (detailed below).

1 Background

1.1 The cause

These grants fall within our interest in funding scientific research. It has been argued, plausibly in our opinion, that high-risk, high-return opportunities are relatively under-funded by both philanthropists and U.S. government entities.2

1.2 The NIH Director’s Transformative Research Award Program

In response to a perceived deficit of high-risk, high-reward funding opportunities, the NIH launched the TRA program in 2009, with an explicit goal to support “exceptionally innovative, high-risk, and/or unconventional research projects that have the potential to create or overturn fundamental paradigms or otherwise have unusually broad impact” in the areas of biomedical or behavioral research.3

According to our conversations with the NIH, the TRA program in recent years has received approximately 150-300 proposals per year, and funded between 8 to 12 of these proposals, though more proposals were considered meritorious by peer-review. Grant amounts for selected projects can vary widely, ranging roughly from $250,000 to $3.5 million per project, and there is no cap to the funding for any one grant.

Based on the above, we asked the NIH to inform those 2016 TRA applicants whose applications were not funded of the opportunity to re-submit their applications to us through an Open Philanthropy Project RFP.

2 Rationale for the Open Philanthropy Project RFP

We decided to pursue this project because:

- As stated above, we are sympathetic to the idea that high-risk, high-reward research is underfunded and therefore found it plausible that this strategy would yield proposals we would be excited to fund.

- Funding some work under this project would be likely to give us a sense of what to expect in the future if we had a call for high-risk, high-return proposals ourselves.

- A small fraction of TRA proposals are funded, consistent with the idea that research of this kind is underfunded.

- We knew of at least one Open Philanthropy Project grantee whose project had been funded by us but originally declined by the TRA program.

- We wanted to learn about research projects from many domains, in addition to those that our scientific advisors have investigated.

3 Results of the Open Philanthropy Project RFP

3.1 Initial idea and development process

Once we decided that an RFP could be worth pursuing, we developed a vision document, then contacted TRA organizers at the NIH, who agreed to inform declined 2016 TRA program applicants of the opportunity to re-submit their proposals to our RFP. We then moved forward with finalizing project details such as the timeline, review criteria, and the outreach and submission process. Once the details were finalized, the NIH sent an email to declined 2016 TRA applicants informing them of the opportunity to re-apply to our RFP.

Our RFP opened on October 26th 2016, and applicants were instructed to re-submit their original TRA proposals along with NIH reviewer notes (when applicable), through a website we built especially for the RFP project. The website included a brief RFP description, frequently-asked-question section, and contact form. The deadline for submission was midnight on November 15th.

3.2 Grant selection process

By the submission deadline, we had received 120 applications through the RFP, representing over half of the original 2016 TRA program applications. Our review process consisted of three stages, and our core review team included Chris Somerville, Heather Youngs, Stephan Guyenet, and Daniel Martin-Alarcon, with additional input from Nick Beckstead and Claire Zabel.

The review process proceeded roughly as follows:

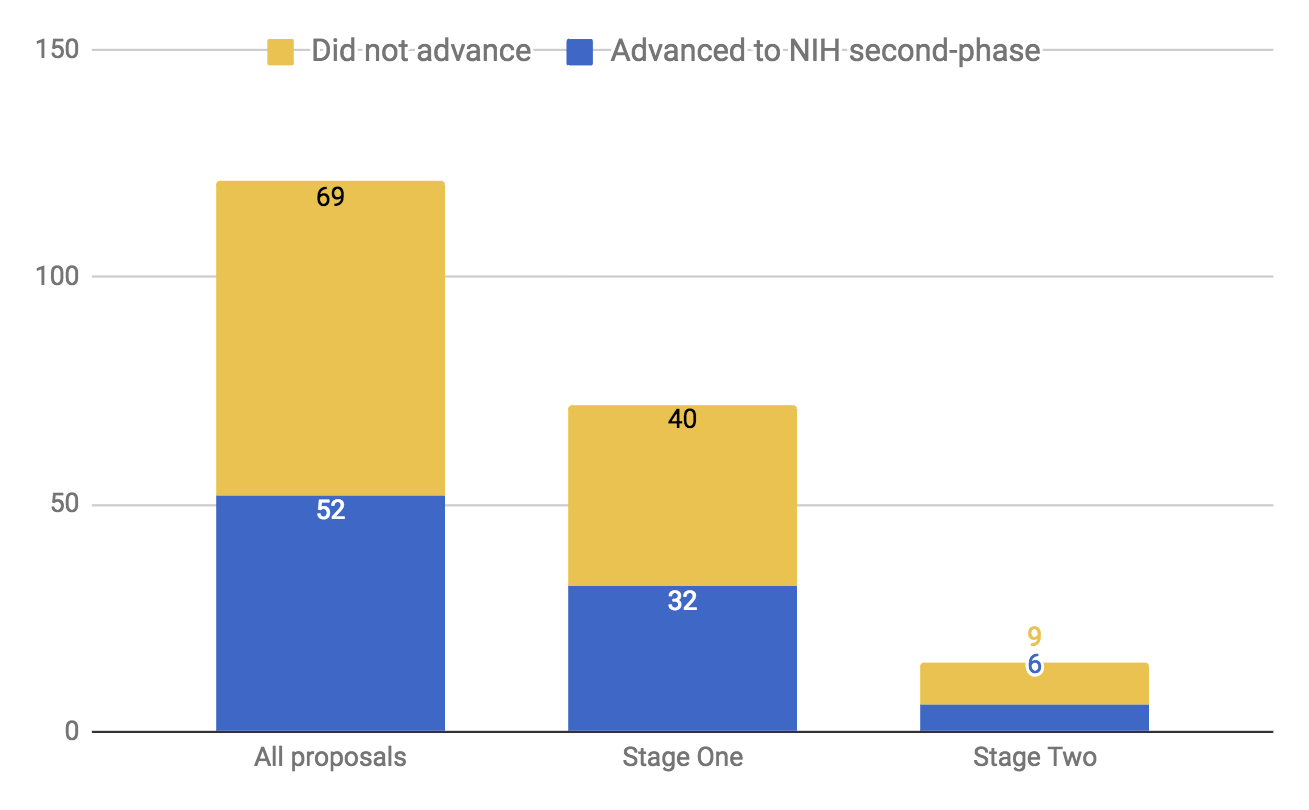

- In stage one, each member of the review team individually reviewed all 120 application abstracts. We then collected interest levels from each reviewer, and conducted three discussion sessions totaling approximately nine hours. From this, we eliminated approximately 40% of the submitted proposals from further consideration.

- In stage two, we conducted a more detailed examination of the remaining 73 proposals. Each proposal was randomly assigned to a primary and in most cases a secondary reviewer, who each produced written evaluations of every reviewed proposal. These evaluations typically took between three to four hours of investigation each. This was followed by three discussion sessions with the review team totaling approximately 18 hours. From this point, our reviewers identified approximately 20 proposals for further consideration, and presented these as well as examples of rejected proposals from both stage one and stage two to our internal decision-makers on grants.

- In the final stage, we solicited external reviews for some of the remaining proposals and performed rough calculations on potential impact for some remaining proposals. In some instances, we contacted the application’s primary investigator to ask for additional information or clarity. Through this process, we eliminated 6 of the 10 remaining finalists, and proceeded to investigate the remaining four through our standard Open Philanthropy Project investigation process.

The overall RFP process took us about 360 calendar days from the initial launch to approval for all selected grants. Roughly 20% of that time consisted of coordinating the proposal call and conducting our internal review to determine the top 12 candidates, 40% of the time was used to collect external reviews and narrow the final field to 4 proposals, and 40% of the time was used to contact the prospective grantees and conduct our standard grant investigation process.

3.3 Selected grants

The projects we decided to fund were as follows (more detail can be found on their individual grant pages):

- $6,421,402 to Arizona State University to support a canine clinical trial assessing the effectiveness of a multivalent cancer vaccine.

- $2,054,142 to the University of Notre Dame over three years for a nanopore protein sequencing project.

- $1,500,000 to Rockefeller University for research on viral histone mimics.

- $825,000 to the University of California, San Francisco over three years for an organ regenerative surgery project.

4 Lessons learned

Some of the lessons we learned through this process were relatively unsurprising to us. For example, we learned that:

- The NIH was willing to coordinate with us on this project. From this experience, we would guess that arrangements similar to this could be pursued in other contexts as well.

- Most TRA applicants were interested in and willing to send us their proposals as well as the NIH reviews they received. This was therefore an easy way to receive many proposals and reviews at once.

- Evaluating a heterogeneous set of proposals created fairly substantial additional work for our science team compared to typical investigations, because our advisors needed to conduct background reading in many areas and compare proposals that had little in common.

Other lessons learned were more surprising and interesting to us.

First, we found very little correlation between our evaluations of these proposals and the NIH’s evaluation of these proposals.

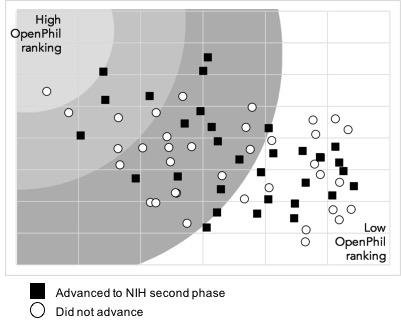

Applications to the 2016 TRA program received an initial three-phase peer review followed by a second-level review by the NIH Council of Councils. In the first phase of the initial peer review process, an Editorial Board (consisting of 12-15 scientists) evaluated all applications and selected a subset to advance to the second phase; in the second phase, subject matter experts reviewed this subset of applications and provided comments; in the third phase, the Editorial Board—informed from the second-phase comments—selected a further subset to discuss and score in a panel meeting.4 There seemed to us to be no apparent alignment between what we found interesting and what fared well in the NIH peer review process. 44% of the proposals that survived our initial review (stage one, above) had been selected for the second phase by the NIH peer review process, and 40% of our final 15 proposals had been selected for the second phase of peer review by the NIH. See charts below indicating A) our review team’s ranking of proposal value with whether or not the proposal advanced to the second-phase peer review by the NIH and B) the trajectory of proposals moving through our review stages and whether or not they had advanced to the second phase in the NIH peer review.

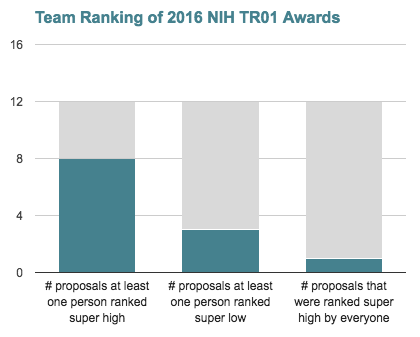

After making our final RFP selections, the review team ranked the public abstracts of the 12 proposals that had received an NIH award through the 2016 TRA program. Ranking was done blindly and on a 5-point scale. Overall, 2016 TRA awarded applications averaged a score of 3.6 out of 5 when ranked by our review team. Based on these scores, all TRA awarded proposals would have passed our stage one review. Two-thirds of these proposals were ranked “super high” by at least one member of our review team, and one-fourth were ranked “low” by at least one member of our review team. No proposals that received 2016 TRA grants were ranked “low” or “extremely low” by more than one member of our review team (see accompanying chart). We think this suggests that a few TRA awardee proposals might not have fared well in our final ranking, but none were ranked low by all team members.

Second, according to our scientific advisors, many of the proposals were similar to proposals in more typical RFPs in terms of their novelty and potential impact. This surprised us given the premise and focus of the TRA program. We speculate that this may be due to the constraints within which applicants feel they must work to get through panel reviews. One notable exception to this rule was a proposal led by Stephen Albert Johnston of Arizona State University to conduct an animal trial of a preventive, broad-spectrum cancer vaccine, which we decided to fund. While there were other proposals that were very interesting to us scientifically, they did not meet our other criteria.

Ultimately, we found the overall RFP process to be fairly smooth and believe it was a useful undertaking both for the lessons learned and funding opportunities identified. We are still determining if we will pursue a similar process in future years for scientific research, but expect that elements of this process could also be applied to some of our other focus areas.

5 Sources

| DOCUMENT | SOURCE |

|---|---|

| GiveWell Blog, Breakthrough Fundamental Science | Source |

| NIH Project Information, September 2017 [archive only] | Source |

| NIH TRA 2016 Application Instructions | Source (archive) |

| NIH TRA FAQ, September 2017 | Source (archive) |

| NIH TRA Website, September 2017 [archive only] | Source |