This is the fourth in a series of posts (intro, deterrence, incapacitation, aftereffects) summarizing the Open Philanthropy review of the evidence on the impacts of incarceration on crime. Read the full report here.

The two other in-depth posts in this series share what I learned about the incarceration’s “before” effect, deterrence of crime, and the “during” effect, which is called “incapacitation.” In sum, in the current U.S. policy context, I doubt deterrence and believe in incapacitation.

Going by the analysis so far, rolling back mass incarceration would increase crime. But that tally is incomplete. This post turns to the “after” effects of crime, which I call, cleverly, “aftereffects.” Unlike deterrence and incapacitation, even the overall sign of aftereffects cannot be determined from general principles. Having been in jail or prison could “rehabilitate” you or “harden” you into greater criminality.

The traditionally favored term for aftereffects, “specific deterrence,” captures the idea that doing time viscerally strengthens the fear of punishment and deters people from reoffending. The corrections system corrects. Penitentiaries elicit penitence. No doubt, those things do often happen. And prisons do good in other ways. Some help people off of addictive substances, teach job and life skills, or improve literacy and self-control.

However, the prison experience can also manufacture criminality. It can alienate people from society, giving them less psychological stake in its rules. It can make people better criminals by bringing them together to learn from each other. It can strengthen their allegiances to gangs whose reach extends into prisons. While some may get drug treatment, others may not, even as they suffer through withdrawal or preserve access to drugs. And incarceration can permanently mark people in the eyes of employers, making it hard to find legal work.

My review includes 15 aftereffects studies. Six conclude that more time (or time in harsher conditions) leads to less crime, eight that it leads to more crime. One study is neutral, but it involves sentences of only a day or two, for drunk driving. If we give each study one vote, then the view that prison generally increases criminality wins, narrowly. Of course, all the studies could be correct for their setting, since the prison experience varies from place to place. Bearing in mind the potential for diversity, it is still worth searching for a consensus view, as the basis for a first-order generalization about the likely impacts of decarceration nationwide. In fact, I think closer inspection of the literature tends to strengthen the view that in the U.S. today, aftereffects are typically harmful. Some reasons:

- Of the six studies in the minority, two come from Georgia; their results appear explicable by a statistical artifact I have christened “parole bias.”

- Another is set in California nearly a half century ago, before retribution overturned rehabilitation as the dominant philosophy of corrections in the U.S.

- A study set in contemporary Seattle appears to suffer from baseline imbalance, meaning that the treatment and control groups differed from beginning.

- One study in the minority looks compelling, yet is set in Norway, which appears to be much more committed to rehabilitating inmates than most American prisons (see this, this).

(The sixth looks at the impact of up to a month’s detention on juvenile offenders in Washington state. I find no serious problems with it.)

I also discovered reasons to doubt some of the studies in the majority. For example, I noticed baseline imbalance in a randomized trial that put some inmates in higher-security prison. And in the study I’ll detail next, the quasi-experiment looks imperfect.

Nevertheless a substantial family of studies coalesces around the finding that when incapacitation and aftereffects are measured in the same setting, the first is offset by the second, over time. That is to say: putting someone in prison cuts crime in the short run but increases it in the long run, on net.

The full review gives you my takes on all 15 studies. Here, I’ll focus on the three for which I managed to obtain data and code, which allowed me to critique them more thoroughly.

“Judge randomization” in the national capital

The single greatest reason for the abundance of research on aftereffects is that someone thought of a clever way to study the topic. Many court systems assign defendants to a judge, public defender, or prosecutor in a way that is substantially arbitrary, even random. Yet those judges, public defenders, and prosecutors can differ systematically in the outcomes they deliver. For example, some judges may imprison more defendants, or imprison them longer. The situation can then be viewed as an experiment in which defendants are arbitrarily assigned to harsher or milder judges. Researchers can then follow the data trail to find which defendants commit more crime later. I will call this technique “judge randomization” even though judges are not always the central actors and assignment to them is often not truly random.

Susan Martin, Sampson Annan, and Brian Forst debuted judge randomization in 1993, in their study of the aftereffects of a couple nights in jail for drunk driving offenders. Donald Green and Daniel Winik brought the method to the heart of our inquiry: drug cases that put months or years of liberty at stake. And almost uniquely within this swelling stream of research, they freely shared their data and code.

The Green and Winik quasi-experiment took place among drug defendants in the Washington, DC, court system just down the road from me. There, each courtroom had a rolling calendar of cases. The process of assigning each defendant to a calendar was fairly arbitrary, but not actually random. Green and Winik write:

During 2002 and 2003…the Court used a mechanical wheel to rotate the assignment of new cases among the calendars—assigning one case to calendar 1, the next case to calendar 2, and so on. The arraignment court coordinator…explained that she deviated from the cycle when the caseload of a calendar was out of balance with the rest, generally because the judge in question had processed cases faster or slower than the norm. When such imbalances occur, she explained, the coordinator can skip an overloaded calendar in the cycle or assign additional cases to an underloaded one.

To see whether going before a “tougher” judge led to more or less crime after, Green and Winik obtained subsequent arrest records for about 1,000 defendants.

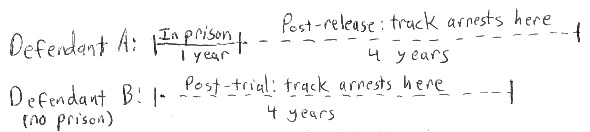

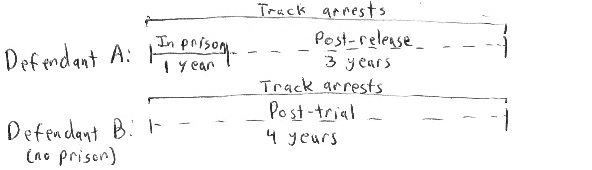

One could imagine Green and Winik running their study this way: Each defendant became free on some day. For defendants not sent to prison, that day came when the trial ended. Those imprisoned, on the other hand, gained freedom when released from prison months or years later. Green and Winik could have looked at whether each defendant was rearrested within a set period starting on that day—four years, in this case. If defendant A was sentenced to a year, Green and Winik would have checked whether A was rearrested within 1–5 years after the judge decided the case. If defendant B was sentenced to no time, they would have tracked arrest within 0–4 years of the decision. Here’s a diagram of this study design:

But doing that could bias results. People sent to prison longer will emerge older. And, beyond the early 20’s, older people commit less crime. (That is true today as it was 200 years ago.) So starting follow-up at release could create the false appearance of more time causing less crime.

To avoid that trap, Green and Winik start everyone’s follow-up clocks when the judge decided their case:

That is, they determine whether defendant A got arrested within four years of trial, including that first year in prison, when A couldn’t possibly have been arrested. That may seems strange, and from a certain perspective also biases the results: now, the more of those four years that A spends in prison, the less chance for A to get arrested. Once again, more time could falsely appear to cause less crime.

But, looked at another way, this new bias is a feature, not a bug. Green and Winik’s approach can give unbiased estimates of the sum of aftereffects and incapacitation. And the total matters more for public safety than either alone. It captures the impacts on public safety of putting A in prison for a year both during and after that year.

Green and Winik find that, if anything, more doing more time increased the chance of rearrest within four years, even though it narrowed the window for rearrest. (A one-tailed test rejects the hypothesis that the net effect was negative at p =.125.) Evidently putting drug defendants in prison made them more likely to commit crime after, enough so to cancel out the temporary incapacitation.

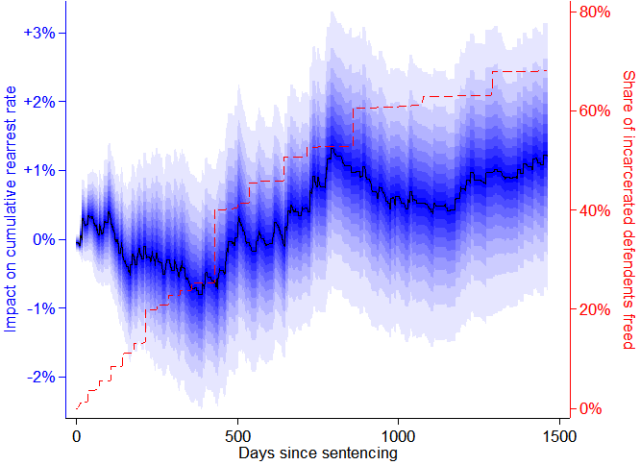

With access to the Green and Winik data, I made this graph to probe the timing of the effects. It plots the estimated impact of an extra month of incarceration on cumulative rearrest probability as function of follow-up time:

The black squiggle starts at 0% and heads downward for the first 365 days or so: that means that receiving an extra month of sentence from a judge depressed the probability of being rearrested within the first year. And that makes sense since you can’t get arrested while in prison. However, as more people complete their terms and go free—a trend shown by the dotted red line—the black squiggle reverses course. By about two years, having been sentenced to more time raises the chance of rearrest since trial. (The blue bands show 95%, 85%, etc., confidence ranges.)

I ran some statistical tests that suggested that the Green and Winik experiment is imperfect, in that defendants assigned to some courtrooms had systematically different rearrest rates for reasons separate from their sentences. Possibly they differed systematically to begin with as in the Helland and Tabarrok deterrence study. Or perhaps the judges in those courtrooms treated them differently in some way not captured in the study such as by levying fines. (Green and Winik do also account for sentences to probation.) But I took steps to reduce this problem and these hardly affected the results.

Other judge randomization papers reach similar conclusions—in Chicago (among adults and youths), Philadelphia, five Pennsylvania counties, and Houston. In Houston, harmful aftereffects are reported to vastly overshadow the short-term benefit of incapacitation.

That said, a Seattle study dissents, with evidence of more time leading to less crime; but it also suffers from statistical imbalance at the outset. Separately, judge-randomization research from Norway shows that that country’s prisons help inmates move from illegal activity to legal work.

Thus while the verdicts from judge randomization studies are not unanimous, I believe there is a consensus that when considering incapacitation and aftereffects together in typical American settings today, putting more people in prison is not preventing crime on net.

Two studies out of Georgia

Many U.S. states split the decision-making over how much time a person spends in prison. A judge hands down a sentence. Later, a parole boards decides how much of the sentence an inmate will serve behind bars and how much in the “community,” under the more or less watchful eye of a parole officer.

Giving judges and parole boards such (collective) power has weighty consequences. For one, it makes their jobs hard: how is one to know whether 12 or 18 months is the punishment that fits the crime? Also, discretion opens the door to injustice if, say, people are found to serve different terms by virtue of their skin color. To make decisions on time served easier and more consistent, many states have drafted guidelines, even scoring systems that factor in the severity of a crime, priors, and so on. Ironically, these often inject new forms of arbitrariness into the system. When guidelines are revised, as in the Maryland study in my incapacitation post, similar people whose cases are decided just before and just after the revision can meet different fates. The same goes when guidelines draw sharp but debatable lines, such as between “mild” and “severe” crimes. However unjust, those foibles aid research by generating quasi-experiments.

Among the aftereffects studies I reviewed, one by Keith Chen and Jesse Shapiro found that federal prisoners who just cleared a threshold for assignment to low- rather than minimum-security prison recidivated more, if anything. Here, the harsher quality of a sentence, as distinct from quantity, seems to have raised recidivism. On the other hand, Randi Hjalmarsson discovered that Washington state juvenile offenders who scored just badly enough to be given up to a month’s detention, instead of milder sanctions such as community service, were 36% less likely to reappear in court in the next year or so. Roughly speaking, they seem to have been rehabilitated.

I found two other studies working from such discontinuities in guidance. Both focus on parole decisions in Georgia. Both find that the longer the parole board kept someone in prison, all else equal, the less likely the person was to return to prison in the three years following release: more time, less crime. And both I doubt.

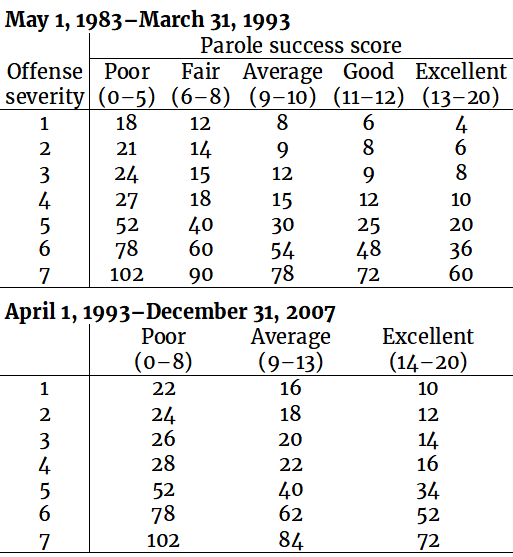

Since 1979, the Georgia parole board has taken guidance in a table of recommendations called the “grid.” To use the grid, a parole official first looks up the official severity rating of a prisoner’s crime. For example, “Bad Checks – under $2,000” is Level 1 while home burglary is level 5. That tells the official what row of the grid to look in. To get the column, the official then has to compute the prisoner’s parole success score, which reflects age, prior offenses, and other traits thought to predict recidivism. This too is bracketed into categories, such as poor and average. Then the official finds the number of months in the grid entry. For example, for someone who had a poor parole success score and was convicted of a level-1 crime, the 1983 edition of the grid recommended release after 18 months. You can find that number in the upper left of this table of two historical editions of the Georgia “grid” of recommendations for months in prison before parole:

In one of the Georgia studies, Ilyana Kuziemko asks: within the first four rows of the later grid, how did crossing the threshold from the Poor to the Average column (cutting recommended time served by six months) affect recidivism? In the other study, Peter Ganong asks: since the later edition boosted recommended time served for almost everyone, did its arrival on April 1, 1993, raise or lower recidivism? (In fact, the Kuziemko paper discerns and exploits four quasi-experiments in the Georgia data. But the grid-based one is most relevant here. See the full review for more.)

Kuziemko finds powerful, beneficial aftereffects. Each additional month served because of being on the “poor” side of the poor-average cut-off led to 1.3 percentage points less chance of return to prison within three years. Multiplying that by 12 roughly implies that each year of extra time cuts return-to-prison by 15.6 percentage points, nearly half the average rate of 34. Ganong concurs on sign, if not quite on size. Each extra month served because of coming up for parole just after the new guidelines took effect cut three-year return-to-prison by 0.5 percentage points, or about 6.0 points per year. And while both studies start their follow-up clocks at release, opening the door to aging bias, I think even Ganong’s impact number is too big for aging to explain. Criminality doesn’t fall that fast.

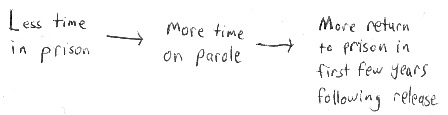

These results suggest that Georgia prisons rehabilitate their inmates. But another mechanism could generate the results, and I haven’t seen it written about before. I call it “parole bias.” In both Georgia studies, all else equal, the sooner people were paroled, the more of their three-year follow-up periods they spent on parole. And parole is a time of heightened risk of return to prison. The term derives from the French “parole d’honneur,” meaning “word of honor,” which captures the idea that the freedom is conditional. Unlike people fully at liberty, parolees can be yanked back to prison, or “revoked,” for violating their parole rules, such as by failing a drug test. They also can be revoked for misdemeanors that don’t normally carry prison sanctions. And if arrested on a serious, new charge, they may be reimprisoned more swiftly and surely, there being no need for a trial.

Consider this snippet from the recent New York Times account of the struggles of Connecticut parolee Erroll Brantley and his girlfriend Katherine Eaton:

On Easter Sunday, Mr. Brantley asked Ms. Eaton for money to buy drugs. They argued. Mr. Brantley, who had been drinking, backed Ms. Eaton against a wall, kicked her foot, pushed her into her bedroom and turned his back on her, saying, “I just want to punch you.” Instead, he kicked a door so hard it left a hole. The next day, Ms. Eaton went to the police.

By evening, Mr. Brantley was back where he started — behind bars.

Probably if he had been off parole, Brantley would not have wound up in jail or prison that fast.

The following causal chain could thus operate in the Georgia studies:

This could make it falsely appear that early release increased crime. The Georgia results are especially susceptible to this competing explanation because they largely measure recidivism as return to prison rather than rearrest or reconviction or reappearance in court. And return to prison is what parole makes more probable.

As I describe in the full review, I replicated both Georgia studies, then attempted to expunge parole bias from them. My changes did indeed erase the finding that speedier release was followed by quicker return to prison. But for complex and unavoidable reasons, I may have over-corrected. So I can’t be sure that parole bias entirely accounts for Kuziemko and Ganong’s findings. But nor can I be sure that it doesn’t.

In the same spirit, I found and worked to correct other technical concerns in the studies. And I tested for robustness. For example, since Kuziemko worked across the Poor-Average divide in the newer grid, I did the same across the Average-Excellent divide, and across all the thresholds in the older grid. And what Ganong did with the adoption of the newer grid in 1993, I repeated with the adoption of the older one in 1983.

I also recast the Ganong analysis from before-after to discontinuity-focused. An analogy: instead of asking whether 18-year-olds as a group get arrested more than 17-year-olds as a group, asking whether the arrest rate jumps right at the 18th birthday, as in the Florida study in my deterrence post. The first might just be picking up a gradual aging effect, whereas if the second finds a jump right at the 18th birthday, that is compelling.

Ganong’s findings proved more robust than Kuziemko’s. That put the Ganong study in a special position as I turned to cost-benefit analysis. It embodies what for Open Philanthropy is the devil’s advocate view that in American settings, more time in prison reduces recidivism. And because Peter Ganong had shared the data with me, I could break out his findings by crime type, in order to feed into a devil’s advocate cost-benefit analysis. Indeed, I extended Ganong’s methods to ask if, in Georgia, longer prison spells reduced returns to prison for burglary, say, or drug charges. Just as with Helland and Tabarrok’s deterrence study, I needed the breakout because the cost-benefit analysis should reflect that some crimes do more harm than others.

The answers tended to reinforce the theory of parole bias. The only category of offense clearly reduced was not murder, or burglary, or car theft, but…parole revocations for new felony charges. People paroled later had fewer of those. This helps explain why in Ganong’s own costing estimate, an additional year in Georgia prison cut a person’s total crime in the 10 years after release by an amount worth just $50.

Summary & cost-benefit analysis

I imagine that it is a profound experience to be imprisoned. Because not all prisons are the same and not all prisoners are the same, the lifelong reverberations must be diverse. We should expect that doing time will drive some people away from crime, draw others toward it, and leave still others back where they started. In this light, we should not expect prison’s average effects to be the same in all places and times. And we should not be surprised to find studies that disagree.

Yet, it is often useful to generalize, to the extent one reasonably can. It helps us estimate, for instance, the likely impacts of decarceration in Mississippi when the closest good studies were done in Georgia. It also helps a national actor such as Open Philanthropy think about whether and how to engage with nationwide criminal justice reform movements.

I read the evidence on the aftereffects on incarceration to say that:

- Run right, prisons can rehabilitate, by preparing people to enter the legal job market. Witness Norway.

- After tough review, the bulk of the evidence says that in the United States today, prison is making people more criminal. The short-term crime reduction from incarcerating more people gets cancelled out in the long run.

- Most of the studies opposing this generalization have serious problems or come from contexts far removed from present-day America.

- Many of the studies supporting this generalization have problems of their own—if not all fatal, still adequate to generate some doubt. I don’t have 100% confidence in the cleanliness of the Green and Winik quasi-experiment, for example, even if my work-arounds did not change the answer much. Call it 80%.

- We are left with a half dozen or more contemporary, U.S.-based studies that point with substantial credibility to harmful aftereffects, and only one, involving a month’s detention for juveniles, pointing the other way.

If the aftereffects of incarceration at least cancel out the during effects, and the before effects are practically zero, as the intro post essentially concludes, then at current margins, building and filling prisons is not making people safer. It may even be endangering the public. In that case, the cost-benefit case for decarceration is a no-brainer: all benefit and no cost.

But since I may have allowed bias to creep into my scrutiny and filtration of the evidence, I close my review with a cost-benefit analysis under a devil’s advocate interpretation of the evidence. The devil’s advocate says that prisons still reduce crime–citing Helland and Tabarrok on the deterrence of Three Strikes, Lofstrom and Raphael on the reverse-incapacitation of Public Safety Realignment, and Ganong on the lower recidivism of prisoners held longer.

If the devil’s advocate is right, that forces a shift in question, from whether to how much. Perhaps the typical crime increase from decarceration would still be modest enough that most people would accept it in return for the taxpayer savings, the curtailment of government encroachment on liberty, and the disruption of prisoners’ families.

To explore this possibility (somewhat) systematically, I close my report with a cost-benefit analysis. As one should expect, this requires making many debatable calls. I take the value of human life to be $100,000/year, and halve that for the value of freedom. Adding in taxpayer savings brings the benefit of decarceration to some $92,000/person-year.

On the cost side, a big swing factor turns out to be how one puts dollar values on crimes. Two main approaches have emerged among academics. Bottom-up analyses tally concrete expenditures on prevention (e.g., on burglar alarms) and treatment (e.g., for post-assault medical care) and even add in intangible harms inferred from jury awards to crime victims. Crunching such numbers under the devil’s advocate reading of the evidence produces a cost figure of $27,000, most of that for rape and motor vehicle theft. That is less than a third of the $92,000 benefit figure.

Alternatively, willingness-to-pay studies run surveys that directly ask people how much they would be willing to pay to prevent crimes—or think their community ought to be willing to pay. From those, I derive a higher number, $92,000, more than half of which represents increased burglaries. Despite the seeming precision in the match to the benefit of decarceration, which I also put at $92,000, this estimate should only be taken to say that when the devil’s-advocate reading of the evidence is fed into the least favorable crime costing method, decarceration comes out as roughly break-even for society.

In fact, I argue that the willingness-to-pay surveys that undergird that higher cost figure are especially shaky. People have little idea of how much crime happens around them. In almost every year since 1993, a majority of Americans have believed that crime was rising, even as it almost never was. And if Americans don’t know how much crime there is, it’s not clear what their answers mean when asked what they would be willing to pay to cut crime 10%.

Of course, under the reading of the evidence that looks more accurate to me, decarceration would not generally increase, and might even reduce, the rate of crime in the United States. In this view, decarceration comes at no aggregate cost, only benefit.

Code and data for all replications are here (800 MB, right-click and select “save link as”). The cost-benefit spreadsheet is here.