Updated January 2018.

We aspire to extend empathy to every being that warrants moral concern, including animals. And while many experts, government agencies, and advocacy groups agree that some animals live lives worthy of moral concern,1 there seems to be little agreement on which animals warrant moral concern.2 Hence, to inform our long-term giving strategy, I (Luke Muehlhauser) investigated the following question: “In general, which types of beings merit moral concern?” Or, to phrase the question as some philosophers do, “Which beings are moral patients?”3

For this preliminary investigation, I focused on just one commonly endorsed criterion for moral patienthood: phenomenal consciousness, a.k.a. “subjective experience.” I have not come to any strong conclusions about which (non-human) beings are conscious, but I think some beings are more likely to be conscious than others, and I make several suggestions for how we might make progress on the question.

In the long run, to form well-grounded impressions about how much we should value grants aimed at (e.g.) chicken or fish welfare, we need to form initial impressions not just about which creatures are more and less likely to be conscious, but also about (a) other plausible criteria for moral patienthood besides consciousness, and also about (b) the question of “moral weight” (see below). However, those two questions are beyond the scope of this initial report on consciousness. In the future I hope to build on the initial framework and findings of this report, and come to some initial impressions about other criteria for moral patienthood and about moral weight.

This report is unusually personal in nature, as it necessarily draws heavily from the empirical and moral intuitions of the investigator. Thus, the rest of this report does not necessarily reflect the intuitions and judgments of the Open Philanthropy Project in general. I explain my views in this report merely so they can serve as one input among many as the Open Philanthropy Project considers how to clarify its values and make its grantmaking choices.

1. How to read this report

The length of this report, compared to the length of my other reports for the Open Philanthropy Project, might suggest to the reader that I am a specialist on consciousness and moral patienthood. Let me be clear, then, that I am not a specialist on these topics. This report is long not because it engages its subject with the depth of an expert, but because it engages an unusual breadth of material — with the shallowness of a non-expert.

The report’s unusual breadth is a consequence of the fact that, when it comes to examining the likely distribution of consciousness (what I call “the distribution question”), we barely even know which kinds of evidence are relevant (besides human self-report), and thus I must survey an unusually broad variety of types of evidence that might be relevant. Compare to my report on behavioral treatments for insomnia: in that case, it was quite clear which studies would be most informative, so I summarized only a tiny portion of the available literature.4 But when it comes to the distribution-of-consciousness question, there is extreme expert disagreement about which types of evidence are most informative. Hence, this report draws from a very large set of studies across a wide variety of domains — comparative ethology, comparative neuroanatomy, cognitive neuroscience, neurology, moral philosophy, philosophy of mind, etc. — and I am not an expert in any of those fields.5

Given all this, my goal for this report cannot be to argue for my conclusions, in the style of a scholarly monograph on consciousness, written by a domain expert.6 Nor is it my goal to survey the evidence which plausibly bears on the distribution question, as such a survey would likely run thousands of pages, and require the input of dozens of domain experts. Instead, my more modest goals for this report are to:

- survey the types of evidence and argument that have been brought to bear on the distribution question,

- briefly describe example pieces of evidence of each type,7 without attempting to summarize the vast majority of the evidence (of each type) that is currently available,

- report what my own intuitions and conclusions are as a result of my shallow survey of those data and arguments,

- try to give some indication of why I have those intuitions, without investing the months of research that would be required to rigorously argue for each of my many reported intuitions, and

- list some research projects that seem (to me) like they could make progress on the key questions of this report, given the current state of evidence and argument.

Given these limited goals, I don’t expect to convince career consciousness researchers of any non-obvious substantive claims about the distribution of consciousness. Instead, I focused on finding out whether I could convince myself of any non-obvious substantive claims about the distribution of consciousness. As you’ll see, even this goal proved challenging enough.

Despite this report’s length, I have attempted to keep the “main text” (sections 2-4) modular and short (roughly 20,000 words).8 I provide many clarifications, elaborations, and links to related readings (that I don’t necessarily endorse) in the appendices and footnotes.

In my review of the relevant literature, I noticed that it’s often hard to interpret claims about consciousness because they are often grounded in unarticulated assumptions, and (perhaps unavoidably) stated vaguely. To mitigate this problem somewhat for this report, section 2 provides some background on “where I’m coming from,” and can be summarized in a single jargon-filled paragraph:

This report examines which beings and processes might be moral patients given their phenomenal consciousness, but does not examine other possible criteria for moral patienthood, and does not examine the question of moral weight. I define phenomenal consciousness extensionally, with as much metaphysical innocence and theoretical neutrality as I can. My broad philosophical approach is naturalistic (a la Dennett or Wimsatt) rather than rationalistic (a la Chalmers or Chisholm),9 and I assume physicalism, functionalism, and illusionism about consciousness. I also assume the boundary around “consciousness” is fuzzy (a la “life”) rather than sharp (a la “water” = H2O), both between and within individuals. My meta-ethical approach employs an anti-realist kind of ideal advisor theory.

If that paragraph made sense to you, then you might want to jump ahead to section 3, where I survey the types of evidence and arguments that have been brought to bear on the distribution question. Otherwise, you might want to read the full report.

In section 3, I conclude that no existing theory of consciousness (that I’ve seen) is satisfying, and thus I investigate the distribution question via relatively theory-agnostic means, examining analogy-driven arguments, potential necessary or sufficient conditions for consciousness, and some big-picture considerations that pull toward or away from a “consciousness is rare” conclusion.

To read only my overall tentative conclusions, see section 4. In short, I think mammals, birds, and fishes,10 are more likely than not to be conscious, while (e.g.) insects are unlikely to be conscious. However, my probabilities are very “made-up” and difficult to justify, and it’s not clear to us what actions should be taken on the basis of such made-up probabilities.

I also prepared a list of potential future investigations that I think could further clarify some of these issues for us — at least, given my approach to the problem.

This report includes several appendices:

- Appendix A explains how I use my moral intuitions, reports some of my moral intuitions about particular cases, and illustrates how existing theories of consciousness and moral patienthood could be clarified by frequent reference to code snippets or existing computer programs.

- Appendix B explains what I find unsatisfying about current theories of consciousness, and says a bit about what a more satisfying theory of consciousness could look like.

- Appendix C summarizes the evidence concerning unconscious vision and the “two streams of visual processing” theory that is discussed briefly in my section on whether a cortex is required for consciousness.

- Appendix D makes several clarifications concerning the distinction between nociception (which can be unconscious) and pain (which cannot).

- Appendix E makes some clarifications about how I’m currently estimating “neuroanatomical similarity,” which plays a role in my “theory-agnostic estimation process” for guessing whether a being is conscious (described here).

- Appendix F explains illusionism in a bit more detail, and makes some brief comments about how illusionism interacts with my intuitions about moral patienthood.

- Appendix G elaborates my views on the “fuzziness” of consciousness.

- Appendix H examines how the possibility of hidden qualia might undermine the central argument for higher-order theories.

- Appendix Z collects a variety of less-important sub-appendices, for example a list of theories of consciousness, a list of varieties of conscious experience, a list of questions about which consciousness scholars exhibit extreme disagreement, a list of candidate dimensions of moral concern (for estimating moral weight), some brief comments on unconscious emotions, some reasons for my default skepticism about published studies, and some recommended readings.

Acknowledgements: Many thanks to those who gave me substantial feedback on earlier drafts of this report: Scott Aaronson, David Chalmers, Daniel Dewey, Julia Galef, Jared Kaplan, Holden Karnofsky, Michael Levine, Buck Shlegeris, Carl Shulman, Taylor Smith, Brian Tomasik, and those who participated in a series of GiveWell discussions on this topic. I am also grateful to several people for helping me find some of the data related to potentially consciousness-indicating features presented below: Julie Chen, Robin Dey, Laura Ong, and Laura Muñoz. My thanks also to Oxford University Press and MIT Press for granting permission to reproduce some images to which they own the copyright.

2 Explaining my approach to the question

2.1 Why we care about the question of moral patienthood

How does the question of moral patienthood fit into our framework for thinking about effective giving?

The Open Philanthropy Project focuses on causes that score well on our three criteria — importance, neglectedness, and tractability. Our “importance” criterion is: “How many individuals does this issue affect, and how deeply?” Elaborating on this, we might say our importance criterion is: “How many moral patients does this issue affect, and how much could we benefit them, with respect to appropriate dimensions of moral concern (e.g. pain, pleasure, desire fulfillment, self-actualization)?”

As with many framing choices in this report, this is far from the only way to approach the question,11 but we find it to be a framing that is pragmatically useful to us as we try to execute our mission to “accomplish as much good as possible with our giving” without waiting to first resolve all major debates in moral philosophy.12 (See also our blog post on radical empathy.)

In the long run, we’d like to have better-developed views not just about which beings are moral patients, but also about how to weigh the interests of different kinds of moral patients against each other. For example: suppose we conclude that fishes, pigs, and humans are all moral patients, and we estimate that, for a fixed amount of money, we can (in expectation) dramatically improve the welfare of (a) 10,000 rainbow trout, (b) 1,000 pigs, or (c) 100 adult humans. In that situation, how should we compare the different options? This depends (among other things) on how much “moral weight” we give to the well-being of different kinds of moral patients. Or, more granularly, it depends on how much moral weight we give to various “appropriate dimensions of moral concern,” which then collectively determine the moral weight of each particular moral patient.13

This report, however, focuses on articulating my early thinking about which beings are moral patients at all.14 We hope to investigate plausibly appropriate dimensions of moral concern — i.e., the question of “moral weight” — in the future. For now, I merely list some candidate dimensions in Appendix Z.7.

2.2 Moral patienthood and consciousness

2.2.1 My metaethical approach

Philosophers, along with everyone else, have very different views about “metaethics,” i.e. about the foundations of morality and the meaning of moral terms.15 In this section, I explain my own metaethical approach — not because my moral judgments depend on this metaethical approach (they don’t), but merely to give my readers a sense of “where I’m coming from.”

Terms like “moral patient” and “moral judgment” can mean different things depending on one’s metaethical views, for example whether one is a “moral realist.” I have tried to phrase this report in a relatively “metaethically neutral” way, so that e.g. if you are a moral realist you can interpret “moral judgment” to mean “my best judgment as to what the moral facts are,” whereas if you are a certain kind of moral anti-realist you can interpret “moral judgment” to mean “my best guess as to what I would personally value if I knew more and had more time to think about my values,” and if you have different metaethical views, you might mean something else by “moral judgment.” But of course, my own metaethical views unavoidably lead my report down some paths and not others.

Personally, I use moral terms in such a way that my “moral judgments” are not about objective moral facts, but instead about my own values, idealized in various ways, such as by being better-informed (more on this in Appendix A).16 Under such a view, the question of (e.g.) whether some particular fish is a moral patient, given my values, is a question about whether that fish has certain properties (e.g. conscious experience, or desires that can be satisfied or frustrated) about which I have idealized preferences. For example: if the fish isn’t conscious, I’m not sure I care whether its preferences are satisfied or not, any more than I care whether a (presumably non-conscious) chess-playing computer wins its chess matches or not. But if the fish is conscious (in a certain way), then I probably do care about how much pleasure and how little pain it experiences, for the same reason I care about the pleasure and pain of my fellow humans.

Thus, my aim is not to conduct a conceptual analysis17 of “moral patient,” nor is my aim to discover what the objective moral facts are about which beings are moral patients. Instead, my aim is merely to examine which beings I should consider to be moral patients, given what I predict my values would be if they were better-informed, and idealized in other ways.

I suspect my metaethical approach and my moral judgments overlap substantially with those of at least some other Open Philanthropy Project staff members, and also with those of many likely readers, but I also assume there will be a great deal of non-overlap with my colleagues at the Open Philanthropy Project and especially with other readers. My only means for dealing with that fact is to explain as clearly as I can which judgments I am making and why, so that others can consider what the findings of this report might imply given their own metaethical approach and their own moral judgments.

For example, in Appendix A I discuss my moral intuitions with respect to the following cases:

- An ankle injury that I don’t notice right away.

- A fictional character named Phenumb who is conscious in general but has no conscious feelings associated with the satisfaction or frustration of his desires.

- A short computer program that continuously increments a variable called

my_pain. - A Mario-playing program that engages in fairly sophisticated goal-directed behavior using a simple search algorithm called A* search.

- A briefly-sketched AI program that controls the player character in a puzzle game in a way that (seemingly/arguably) exhibits some commonly-endorsed indicators of consciousness, and that (seemingly/arguably) satisfies some theories of moral patienthood.

I suspect most readers share my moral intuitions about some of these cases, and have differing moral intuitions with respect to others.

2.2.2 Proposed criteria for moral patienthood

Presumably a cognitively unimpaired adult human is a moral patient, and a rock is not.18 But what about someone in a persistent vegetative state? What about an anencephalic infant? What about a third-trimester human fetus?19 What about future humans? What about chimpanzees, dogs, cows, chickens, fishes, squid, lobsters, beetles, bees, Venus flytraps, and bacteria? What about sophisticated artificial intelligence systems, such as Facebook’s face recognition system or a self-driving car?20 What about a (so-called) self-aware, self-expressive, and self-adaptive camera network?21 What about a non-player character in a first-person shooter video game, which makes plans and carries them out, ducks for cover when the player shoots virtual bullets at it, and cries out when hit?22 What about the enteric nervous system in your gut, which employs about 5 times as many neurons as the brain of a rat, and would continue to autonomously coordinate your digestion even if its main connection with your brain was severed?23 Is each brain hemisphere in a split-brain patient a separate moral patient?24 Can ecosystems or companies or nations be moral patients?25

Such questions are usually addressed by asking whether a potential moral patient satisfies some criteria for moral patienthood. Criteria I have seen proposed in the academic literature include:

- Personhood or interests. (I won’t discuss these criteria separately, as they are usually composed of one or more of the criteria listed below.26)

- Phenomenal consciousness, a.k.a. “subjective experience.” See the detailed discussion below.27

- Valenced experience: This criterion presumes not just phenomenal consciousness but also some sense in which phenomenal consciousness can be “valenced” (e.g. pleasure vs. pain).

- Various sophisticated cognitive capacities such as rational agency, self-awareness, desires about the future, ability to abide by moral responsibilities, ability to engage in certain kinds of reciprocal relationships, etc.28

- Capacity to develop these sophisticated cognitive capacities, e.g. as is true of human fetuses.29

- Less sophisticated cognitive capacities, or the capacity to develop them, e.g. learning, nociception, memory, selective attention, etc.30

- Group membership: e.g. all members of the human species, or all living things.31

Note that moral patienthood can be seen as binary or scalar,32 and the boundary between beings that are and are not moral patients might be “fuzzy” (see below).

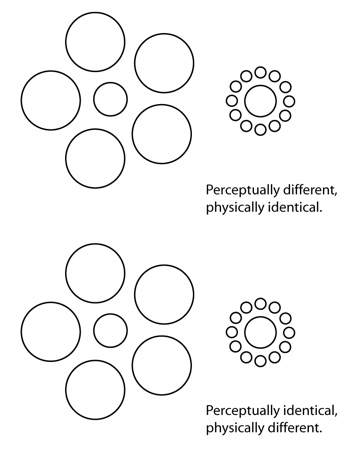

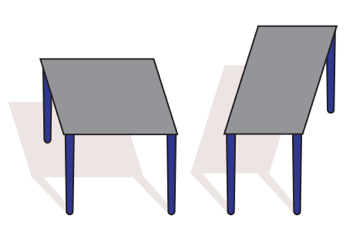

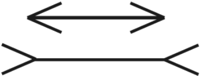

It is also important to remember that, whatever criteria for moral patienthood we endorse upon reflection, our intuitive attributions of moral patienthood are probably unconsciously affected33 by factors that we would not endorse if we understood how they were affecting us. For example, we might be more likely to attribute moral patienthood to something if it has a roughly human-like face, even though few if any of us would endorse “possession of a human-like face” as a legitimate criterion of moral patienthood. A similar warning can be made about factors which might affect our attributions of phenomenal consciousness and other proposed criteria for moral patienthood.34

An interesting test case is this video of a crab tearing off its own claw. To me, the crab looks “nonchalant” while doing this, which gives me the initial intuition that the crab must not be consciousness, or else it would be “writhing in agony.” But crabs are different from humans in many ways. Perhaps this is just what a crab in conscious agony looks like. Or perhaps not.35

2.2.3 Why I investigated phenomenal consciousness first

The only proposed criterion of moral patienthood I have investigated in any depth thus far is phenomenal consciousness. I chose to examine phenomenal consciousness first because:

- My impression is that phenomenal consciousness is perhaps the most commonly-endorsed criterion of moral patienthood, and that it is often considered to be the most important such criterion (by those who use multiple criteria). Self-awareness (sometimes called “self-consciousness”) and valenced experience are other contenders for being the most commonly-endorsed criterion of moral patienthood, but in most cases it is assumed that the kinds of self-awareness or valenced experience that confer moral patienthood necessarily involve phenomenal consciousness as well.

- My impression is that phenomenal consciousness, or a sort of valenced experience that presumes phenomenal consciousness, are especially commonly-endorsed criteria of moral patienthood among consequentialists, whose normative theories most easily map onto our mission to “accomplish as much good as possible with our giving.”

- Personally, I’m not sure whether consciousness is the only thing I care about, but it is the criterion of moral patienthood I feel most confident about (for my own values, anyway).

However, it’s worth bearing in mind that most of us probably intuitively morally care about other things besides consciousness. I focus on consciousness in this report not because I’m confident it’s the only thing that matters, but because my report on consciousness alone is long enough already! I hope to investigate other potential criteria for moral patienthood in the future.

I’m especially eager to think more about valenced experiences such as pain and pleasure. As I explain below, my own intuitions are that if a being had conscious experience, but literally none of it was “valenced” in any way, then I might not have any moral concern for such a creature. But in this report, I focus on the issue of phenomenal consciousness itself, and say very little about the issue of valenced experience.

2.3 My approach to thinking about consciousness

In consciousness studies there is so little consensus on anything — what’s meant by “consciousness,” what it’s made of, what kinds of methods are useful for studying it, how widely it is distributed, which theories of consciousness are most promising, etc. (see Appendix Z.5) — that there are no safe guesses about “where someone is coming from” when they write about consciousness. This often made it difficult for me to understand what writers on consciousness were trying to say, as I read through the literature.

To mitigate this problem somewhat for this explanation of my own tentative views about consciousness, I’ll try to explain “where I’m coming from” on consciousness, even if I can’t afford the time to explain in much detail why I make the assumptions I do.

2.3.1 Consciousness, innocently defined

Van Gulick (2014) describes six different senses in which “an animal, person, or other cognitive system” can be regarded as “conscious,” and the four I can explain most quickly are:

- Sentience: capable of sensing and responding to its environment

- Wakefulness: awake (e.g. not asleep or in a coma)

- Self-consciousness: aware of itself as being aware

- What it is like: subjectively experiencing36 a certain “something it is like to be” (Nagel 1974), a.k.a. “phenomenal consciousness” (Block 1995), a.k.a. “raw feels” (Tolman 1932)

When I say “consciousness,” I have in mind the fourth concept.37

In particular, I have in mind a relatively “metaphysically and epistemically innocent” definition, a la Schwitzgebel (2016):38

Phenomenal consciousness can be conceptualized innocently enough that its existence should be accepted even by philosophers who wish to avoid dubious epistemic and metaphysical commitments such as dualism, infallibilism, privacy, inexplicability, or intrinsic simplicity. Definition by example allows us this innocence. Positive examples include sensory experiences, imagery experiences, vivid emotions, and dreams. Negative examples include growth hormone release, dispositional knowledge, standing intentions, and sensory reactivity to masked visual displays. Phenomenal consciousness is the most folk psychologically obvious thing or feature that the positive examples possess and that the negative examples lack…

There are many other examples we can point to.39 For example, when I played sports as a teenager, I would occasionally twist my ankle or acquire some other minor injury while chasing after (e.g.) the basketball, and I didn’t realize I had hurt myself until after the play ended and I exited my flow state. In these cases, a “rush of pain” suddenly “flooded” my conscious experience — not because I had just then twisted my ankle, but because I had twisted it 5 seconds earlier, and was only just then becoming aware of it. The pain I felt 5 seconds after I twisted my ankle is a positive example of conscious experience, and whatever injury-related processing occurred in my nervous system during those initial 5 seconds is, as far as I know, a negative example.

However, I would qualify Schwitzgebel’s extensional definition of consciousness by noting that the negative examples, in particular, are at least somewhat contested. A rock is an obvious negative example for most people, but panpsychists disagree, and it is easy to identify other contested examples.40

More plausible than rock consciousness, I think, is the possibility that somewhere in my brain, there was a conscious experience of my injured ankle before “I” became aware of it. Indeed, there may be many conscious cognitive processes that “I” never have cognitive access to. If this is the case, it can in principle be weakly suggested by certain kinds of studies (see Appendix H), and could in principle be strongly suggested once we have a compelling theory of consciousness.41 But for now, I’ll count the injury-related cognitive processing that happened “before I noticed it” as a likely negative example of conscious experience, while allowing that it could be discovered to be a positive example due to future scientific progress.

So perhaps we should say that “Phenomenal consciousness is the most folk psychologically obvious thing (or set of things) that the uncontested positive examples possess, and that the least-contested negative examples plausibly lack,”42 or something along those lines. Similarly, when I use related terms like “qualia” and “phenomenal properties,” I intend them to be defined by example as above, with as much metaphysical innocence as possible. Ideally, one would “flesh out” these definitions with many more examples and clarifications, but I shall leave that exercise to others.43

Importantly, this definition is as “innocent” and theory-neutral as I know how to make it. On this definition, consciousness could still be physical or non-physical, scientifically tractable or intractable, ubiquitous or rare, ineffable or not-ineffable, “real” or “illusory” (see next section), and so on. And in my revised version of Schwitzgebel’s definition, we are not committed to absolute certainty that purported negative examples will turn out to actually be negative examples as we learn more.

Furthermore, I do not define consciousness as “cognitive processes I morally care about,” as that blends together scientific explanation and moral judgment (see Appendix A) in a way can be confusing to disentangle and interpret.

No doubt our concept of “consciousness” and related concepts will evolve over time in response to new discoveries, and our evolving concepts will influence which empirical inquiries we prioritize, and those inquiries will suggest further revisions to our concepts, as is typically the case.44 But in our current state of ignorance, I prefer to use a notion of “consciousness” that is defined as innocently as I can manage.

I must also stress that my aim here is not to figure out what we “mean” by “consciousness,” any more than Antonie van Leeuwenhoek (1632-1723) was, in studying microbiology, trying to figure out what people meant by “life.”45 Rather, my aim is to understand how the cluster of stuff we now naively call “consciousness” works. Once we understand how those things work, we’ll be in a better position to make moral judgments about which beings are and aren’t moral patients (insofar as consciousness-related properties affect those judgments, anyway). Whether we continue to use the concept of “consciousness” at that point is of little consequence. But for now, since we don’t yet know the details of how consciousness works, I will use terms like “consciousness” and “subjective experience” to point at the ill-defined cluster of stuff I’m talking about, as defined by example above.

2.3.2 My assumptions about the nature of consciousness

Despite preferring a metaphysically innocent definition of consciousness, I will, for this report, make four key assumptions about the nature of consciousness. It is beyond the scope of this report to survey and engage with the arguments for or against these assumptions; instead, I merely report what my assumptions are, and provide links to the relevant scholarly debates. My purpose here isn’t to contribute to these debates, but merely to explain “where I’m coming from.”

First, I assume physicalism. I assume consciousness will turn out to be fully explained by physical processes.46 Specifically, I lean toward a variety of physicalism called “type A materialism,” or perhaps toward the varieties of “type Q” or “type C” materialism that threaten to collapse into “type A” materialism anyway (see footnote47).

Second, I assume functionalism. I assume that anything which “does the right thing” — e.g., anything which implements a certain kind of information processing — is an example of consciousness, regardless of what that thing is made of.48 Compare to various kinds of memory, attention, learning, and so on: these processes are found not just in humans and animals, but also in, for example, some artificial intelligence systems.49 These kinds of memory, attention, and learning are implemented by a wide variety of substrates (but, they are all physical substrates). On the case for functionalism, see footnote.50

Third, I assume illusionism, at least about human consciousness. What this means is that I assume that some seemingly-core features of human conscious experience are illusions, and thus need to be “explained away” rather than “explained.” Consider your blind spot: your vision appears to you as continuous, without any spatial “gaps,” but physiological inspection shows us that there aren’t any rods and cones where your optic nerve exits the eyeball, so you can’t possibly be transducing light from a certain part of your (apparent) visual field. Knowing this, the job of cognitive scientists studying vision is not to explain how it is that your vision is really continuous despite the existence of your physiological blind spot, but instead to explain why your visual field seems to you to be continuous even though it’s not.51 We might say we are “illusionists” about continuous visual fields in humans.

Similarly, I think some core features of consciousness are illusions, and the job of cognitive scientists is not to explain how those features are “real,” but rather to explain why they seem to us to be real (even though they’re not). For example, it seems to us that our conscious experiences have “intrinsic” properties beyond that which could ever be captured by a functional, mechanistic account of consciousness. I agree that our experiences seem to us to have this property, but I think this “seeming” is simply mistaken. Consciousness (as defined above) is real, of course. There is “something it is like” to be us, and I doubt there it is “something it is like” to be a chess-playing computer, and I think the difference is morally important. I just think our intuitions mislead us about some of the properties of this “something it’s like”-ness. (For elaborations on these points, see Appendix F.)

Fourth, I assume fuzziness about consciousness, both between and within individuals.52 In other words, I suspect that once we understand how “consciousness” works, there will be no clear dividing line between individuals that have no conscious experience at all and individuals that have any conscious experience whatsoever (I’ll call this “inter-individual fuzziness”),53 and I also suspect there will be no clear dividing line, within a single individual, between mental states or processes that are “conscious” and those which are “not conscious” (I’ll call this “intra-individual fuzziness”).54

Unfortunately, assuming fuzziness means that “wondering whether it is ‘probable’ that all mammals have [consciousness] thus begins to look like wondering whether or not any birds are wise or reptiles have gumption: a case of overworking a term from folk psychology that has [lost] its utility along with its hard edges.”55 One could say the same of questions about which cognitive processes within an individual are “conscious” vs. “unconscious,” which play a key role in arguments about which beings are conscious.

As the scientific study of “consciousness” proceeds, I expect our naive concept of consciousness to break down into a variety of different capacities, dispositions, representations, and so on, each of which will vary along many different dimensions in different beings. As that happens, we’ll be better able to talk about which features we morally care about and why, and there won’t be much utility to arguing about “where to draw the line” between which beings and processes are and aren’t “conscious.” But, given that we currently lack such a detailed decomposition of “consciousness,” I reluctantly organize this report around the notion of “consciousness,” and I write about “which beings are conscious” and “which cognitive processes are conscious” and “when such-and-such cognitive processing becomes conscious,” while pleading with the reader to remember that I think the line between what is and isn’t “conscious” is extremely “fuzzy” (and as a consequence I also reject any clear-cut “Cartesian theater.”)56 For more on the fuzziness of consciousness, see Appendix G.

My assumptions of physicalism and functionalism are quite confident, but probably don’t affect my conclusions about the distribution of consciousness very much anyway, except to make some common pathways to radical panpsychism less plausible.57 My assumption of illusionism is also quite confident, at least about human consciousness, but I’m not sure it implies much about the distribution question (see Appendix F). My assumption of fuzziness is moderately confident, and implies that the distribution question is difficult even to formulate, let alone answer, though I’m not sure it directly implies much about how extensive we should expect “consciousness” to be.

As with any similarly-sized set of assumptions about consciousness (see Appendix Z.5), my own set of assumptions is highly debatable. Physicalism and functionalism are fairly widely held among consciousness researchers, but are often debated and far from universal.58 Illusionism seems to be an uncommon position.59 I don’t know how widespread or controversial “fuzziness” is.

I’m not sure what to make of the fact that illusionism seems to be endorsed by a small number of theorists, given that illusionism seems to me to be “the obvious default theory of consciousness,” as Daniel Dennett argues.60 In any case, the debates about the fundamental nature of consciousness are well-covered elsewhere,61 and I won’t repeat them here.

A quick note about “eliminativism”: the physical processes which instantiate consciousness could turn out be so different from our naive guesses about their nature that, for pragmatic reasons, we might choose to stop using the concept of “consciousness,” just as we stopped using the concept of “phlogiston.” Or, we might find a collection of processes that are similar enough to those presumed by our naive concept of consciousness that we choose to preserve the concept of “consciousness” and simply revise our definition of it, as happened when we eventually decided to identify “life” with a particular set of low-level biological features (homeostasis, cellular organization, metabolism, reproduction, etc.) even though life turned out not to be explained by any Élan vital or supernatural soul, as many people throughout history62 had assumed.63 But I consider this only a possibility, not an inevitability. In other words, I’m not trying to take a strong position on “eliminativism” about consciousness here — I see that as a pragmatic issue to be decided later (see Appendix Z.6). For now, I think it’s easiest to talk about “consciousness,” “qualia,” and so on as truly existing phenomena that can be defined by example as above, despite those concepts having very “fuzzy” boundaries.

3 Specific efforts to sharpen my views about the distribution question

Now we turn to the key question: What is the likely distribution of phenomenal consciousness — as defined by example — across different taxa? (I call this the “distribution question.”)

Note that in this report, I’ll use “taxa” very broadly to mean “classes of systems,” including:

- Phylogenetic taxa, such as “primates,” “fishes,” “rainbow trout,” “plants,” and “bacteria.”

- Subsets of phylogenetic taxa, such as “humans in a persistent vegetative state” and “anencephalic infants.”

- Biological sub-systems, such as “human enteric nervous systems” and “non-dominant brain hemispheres of split-brain patients.”

- Classes of computer software and/or hardware, such as “deep reinforcement learning agents,” “industrial robots,” “versions of Microsoft Windows,” and “such-and-such application-specific integrated circuit.”

In the academic literature on the distribution question, the three most common argumentative strategies I’ve seen are:64

- Theory: Assume a particular theory of consciousness, then consider whether a specific taxon is likely to be conscious if that theory is true. [More]

- Potentially consciousness-indicating features: Rather than relying on a specific theory of consciousness, instead suggest a list of behavioral and neurobiological/architectural features which intuitively suggest a taxon might be conscious. Then, check how many of those potentially consciousness-indicating features (PCIFs) are possessed by a given taxon. If the taxon possesses all or nearly all the PCIFs, conclude that its members are probably conscious. If the taxon possesses very few of the proposed PCIFs, conclude that its members probably are not conscious. [More]

- Necessary or sufficient conditions: Another approach is to argue that some feature is likely necessary for consciousness (e.g. a neocortex), or that some feature is likely sufficient for consciousness (e.g. mirror self-recognition), without relying on any particular theory of consciousness. If successful, such arguments might not give us a detailed picture of which systems are and aren’t conscious, but they might allow us to conclude that some particular taxa either are or aren’t conscious. [More]

Below, I consider each of these approaches in turn, and then I consider various big-picture considerations that “pull” toward or away from a “consciousness is rare” conclusion (here).

3.1 Theories of consciousness

I briefly familiarized myself with several physicalist functionalist theories of consciousness, listed in Appendix Z.1. Overall, my sense is that the current state of our scientific knowledge is such that it is difficult to tell whether any currently proposed theory of consciousness is promising. My impression from the literature I’ve read, and from the conversations I’ve had, is that many (perhaps most) consciousness researchers agree,65 even though some of the most well-known consciousness researchers are well-known precisely because they have put forward specific theories they see as promising. But if most researchers agreed with their optimism, I would expect theories of consciousness to have been winnowed over the last couple decades, rather than continuing to proliferate,66 under a huge variety of metaphysical and methodological assumptions, as they currently do. (In other words, consciousness studies seems to be in what Thomas Kuhn called a “pre-paradigmatic stage of development.”67)

One might also argue about the distribution question not from the perspective of theories of how consciousness works, but from theories of how consciousness evolved (see the list in Appendix Z.5). Unfortunately, I didn’t find any of these theories any more convincing than currently available theories of how consciousness works.68

Given the unconvincing-to-me nature of current theories of consciousness (see also Appendix B), I decided to pursue investigation strategies that do not require me to put much emphasis on any specific theories of consciousness, starting with the “potentially consciousness-indicating features” strategy described in the next section.

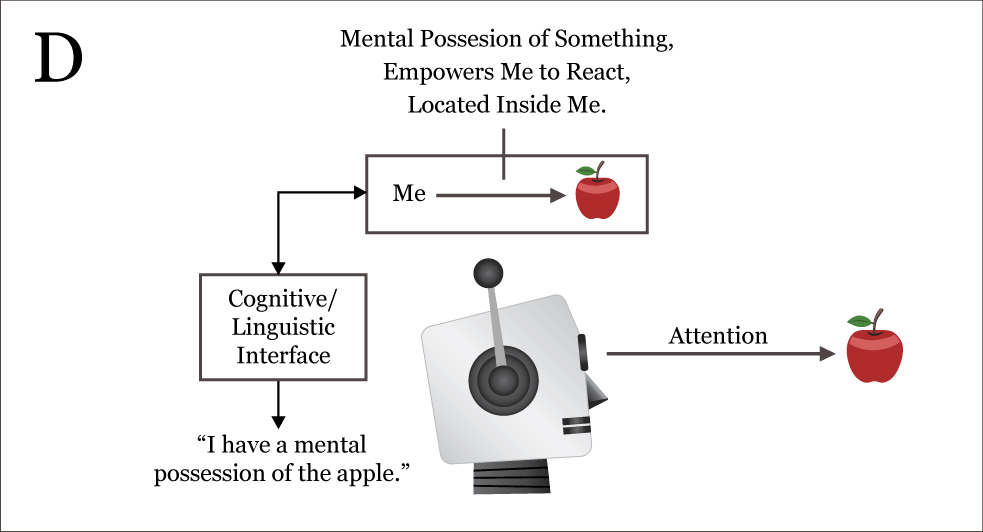

First, though, I’ll outline one example theory of consciousness, so that I can explain what I find unsatisfying about currently-available theories of consciousness. In particular, I’ll describe Michael Tye’s PANIC theory,69 which is an example of “first-order representationalism” (FOR) about consciousness.

3.1.1 PANIC as an example theory of consciousness

To explain Tye’s PANIC theory, I need to explain what philosophers mean by “representation.”70 For philosophers, a representation is a thing that carries information about something else. An image of a flower carries information about a flower. The sentence “The flower smells good” carries information about a flower — specifically, the information that it smells good. Perhaps a nociceptive signal represents, to some brain module, that there is tissue damage of a certain sort occurring at some location on the body. There can also be representations that carry information about things that don’t exist, such as Luke Skywalker. If a representation mischaracterizes the thing it is about in some important way, we say that it is misrepresenting its target. Representational theories of consciousness, then, say that if a system does the right kind of representing, then that system is conscious.

Michael Tye’s PANIC theory, for example, claims that a mental state is phenomenally conscious if it has some Poised, Abstract, Nonconceptual, Intentional Content (PANIC). I’ll briefly summarize what that means.

To simplify just a bit, “intentional content” is just a phrase that (in philosophy) means “representational content” or “representational information.” What about the other three terms?

- Poised: Conscious representational contents, unlike unconscious representational contents, must be suitably “poised” to play a certain kind of functional role. Specifically, they are poised to impact beliefs and desires. E.g. conscious perception of an apple can change your belief about whether there are apples in your house, and the conscious feeling of hunger can create a desire to eat.

- Abstract: Conscious representational contents are representations of “general features or properties” rather than “concrete objects or surfaces,” e.g. because in hallucinatory experiences, “no concrete objects need be present at all,” and because under some circumstances two different objects can “look exactly alike phenomenally.”71

- Nonconceptual: The representational contents of consciousness are more detailed than anything we have words or concepts for. E.g. you can consciously perceive millions of distinct colors, but you don’t have separate concepts for red17 and red18, even though you can tell them apart when they are placed next to each other.

So according to Tye, conscious experiences have poised, abstract, nonconceptual, representational contents. If a representation is missing one of these properties, then it isn’t conscious. For example, consider how Tye explains the consistency of his PANIC theory of consciousness with the phenomenon of blindsight:72

…given a suitable elucidation of the “poised” condition, blindsight poses no threat to [my theory]. Blindsight subjects are people who have large blind areas or scotoma in their visual fields due to brain damage… They deny that they can see anything at all in their blind areas, and yet, when forced to guess, they produce correct responses with respect to a range of simple stimuli (for example, whether an X or an O is present, whether the stimulus is moving, where the stimulus is in the blind field).

If their reports are to be taken at face value, blindsight subjects… have no phenomenal consciousness in the blind region. What is missing, on the PANIC theory, is the presence of appropriately poised, nonconceptual, representational states. There are nonconceptual states, no doubt representationally impoverished, that make a cognitive difference in blindsight subjects. For some information from the blind field does reach the cognitive centers and controls their guessing behavior. But there is no complete, unified representation of the visual field, the content of which is poised to make a direct difference in beliefs. Blindsight subjects do not believe their guesses. The cognitive processes at play in these subjects are not belief-forming at all.

Now that I’ve explained Tye’s theory, I’ll use it to illustrate why I find it (and other theories of consciousness) unsatisfying.

In my view, a successful explanation of consciousness would show how the details of some theory (such as Tye’s) predict, with a fair amount of precision, the explananda of consciousness — i.e., the specific features of consciousness that we know about from our own phenomenal experience and from (reliable, validated) cases of self-reported conscious experience (e.g. in experiments, or in brain lesion studies). For example, how does Tye’s theory of consciousness explain the details of the reports we make about conscious experience? What concept of “belief” does the theory refer to, such that the guesses of blindsight subjects do not count as “beliefs,” but (presumably) some other weakly-held impressions do count? Does PANIC theory make any testable, fairly precise predictions akin to the testable prediction Daniel Dennett made on the final page of Consciousness Explained?73

In short, I think that current theories of consciousness (such as Tye’s) simply do not “go far enough” — i.e., they don’t explain enough consciousness explananda, with enough precision — to be compelling (yet). In Appendix B, I discuss this issue in more detail, and describe how one might construct a theory of consciousness that explains more consciousness explananda, with more precision, than Tye’s theory (or any other theory I’m aware of) does.

3.2 Potentially consciousness-indicating features (PCIFs)

3.2.1 How PCIF arguments work

The first theory-agnostic approach to the distribution question that I examined was the approach of “arguments by analogy” or, as I call them, “arguments about potentially consciousness-indicating features (PCIFs).”74

As Varner (2012) explains, analogy-driven arguments appeal to the principle that because things P and Q share many “seemingly relevant” features (a, b, c, …n), and we know that P has some additional property x, we should infer that Q probably has property x, too.

After all, it is by such an analogy that I believe other humans are conscious. I cannot directly observe that my mother is conscious, but she talks about consciousness like I do, she reacts to stimuli like I do, she has a brain that is virtually identical to my own in form and function and evolutionary history, and so on. And since I know I am conscious, I conclude that my mother is conscious as well.75 The analogy between myself and a chimpanzee is weaker than that between myself and my mother, but it is, we might say, “fairly strong.” The analogy between myself and a pig is weaker still. The analogies between myself and a fish are even weaker but, some argue, still strong enough that we should put some substantial probability on fish consciousness.

One problem with analogy-driven arguments, and one reason they are difficult to fully separate from theory-driven arguments, is this: to decide how salient a given analogy between two organisms is, we need some “guiding theory” about what consciousness is, or what its function is. Varner (2012) explains:76

[The] point about needing such a “guiding theory” can be illustrated with this obviously bad argument by analogy:

- Both turkeys (P) and cattle (Q) are animals, they are warm blooded, they have limited stereoscopic vision, and they are eaten by humans (a, b, c, …, and n).

- Turkeys are known to hatch from eggs (x).

- So probably cattle hatch from eggs, too.

One could come up with more and more analogies to list (e.g., turkeys and cattle both have hearts, they have lungs, they have bones, etc., etc.). The above argument is weak, not because of the number of analogies considered, but because it ignores a crucial disanalogy: that cattle are mammals, whereas turkeys are birds, and we have very different theories about how the two are conceived, and how they develop through to birth and hatching, respectively. Another way of putting the point would be to say that the listed analogies are irrelevant because we have a “guiding theory” about the various ways in which reproduction occurs, and within that theory the analogies listed above are all irrelevant…

So in assessing an argument by analogy, we do not just look at the raw number of analogies cited. Rather, we look at both how salient are the various analogies cited and whether there are any relevant disanalogies, and we determine how salient various comparisons are by reference to a “guiding theory.”

Unfortunately, as explained above, it isn’t clear to me what our guiding theory about consciousness should be. Because of this, I present below a table that includes an unusually wide variety of PCIFs that I have seen suggested in the literature, along with a few of my own. From this initial table, one can use one’s own guiding theories to discard or de-emphasize various PCIFs (rows), perhaps temporarily, to see what doing so seems to suggest about the likely distribution of consciousness.

Another worry is that one’s choices about which PCIFs to include, and which taxa to check for those PCIFs, can bias the conclusions of such an exercise.77 To mitigate this problem, my table below is unusually comprehensive with respect to both PCIFs and taxa. As a result, I could not afford the time to fill out most cells in the table, and thus my conclusions about the utility and implications of this approach (below) are limited.

3.2.2 A large (and incomplete) table of PCIFs and taxa

The taxa represented in the table below were selected either (a) for comparison purposes (e.g. human, bacteria), or (b) because they are killed or harmed in great numbers by human activity and are thus plausible targets of welfare interventions if they are thought of as moral patients (e.g. chickens, fishes), or (c) a mix of both. To represent each very broad taxon of interest (e.g. fishes, insects), I chose a representative sub-taxon that has been especially well-studied (e.g. rainbow trout, common fruit fly). More details on the taxa and PCIFs I chose, and why I chose them, are provided in a footnote.78

One column below — “Function sometimes executed non-consciously in humans?” — requires special explanation. Many behavioral and neurofunctional PCIFs can be executed by humans either consciously or non-consciously. In fact, most cognitive processing in humans seems to occur non-consciously, and humans sometimes engage in fairly sophisticated behaviors without conscious awareness of them, as in (it is often argued) cases of sleepwalking, or when someone daydreams while driving a familiar route, or in cases of absence seizures involving various “automatisms” like this one described by Antonio Damasio:79

…a man sat across from me… [and] we talked quietly. Suddenly the man stopped, in midsentence, and his face lost animation; his mouth froze, still open, and his eyes became vacuously fixed on some point on the wall behind me. For a few seconds he remained motionless. I spoke his name but there was no reply. Then he began to move a little, he smacked his lips, his eyes shifted to the table between us, he seemed to see a cup of coffee and a small metal vase of flowers; he must have, because he picked up the cup and drank from it. I spoke to him again and again he did not reply. He touched the vase. I asked him what was going on, and he did not reply, his face had no expression. He did not look at me. Now, he rose to his feet and I was nervous; I did not know what to expect. I called his name and he did not reply. When would this end? Now he turned around and walked slowly to the door. I got up and called him again. He stopped, he looked at me, and some expression returned to his face — he looked perplexed. I called him again, and he said, “What?”

For a brief period, which seemed like ages, this man suffered from an impairment of consciousness. Neurologically speaking, he had an absence seizure followed by an absence automatism, two among the many manifestations of epilepsy…

If such PCIFs are observed in humans either with or without consciousness, then perhaps the case for them as being indicative of consciousness in other taxa is less strong than one might think:80 e.g. are fishes conscious of their behavior, or are they continuously “sleepwalking”?81

In the table below, a cell is left blank if I didn’t take the time to investigate, or in some cases even think about, what its value should be, or if I investigated briefly but couldn’t find a clear value for the cell. A cell’s value is “n/a” when a PCIF is not applicable to that taxon, and it is “unavailable” when I’m fairly confident the relevant data has not (as of December 2016) been collected. In some cases, data are not available for my taxon of choice, but I guess or estimate the value of that cell from data available for a related taxon (e.g. a closely related species), and in cases where this leaves me with substantial uncertainty about the appropriate value for that cell, I indicate my extra uncertainty with a question mark, an “approximately” tilde symbol (“~”) for scalar data, or both. To be clear: a question mark does not necessarily indicate that domain experts are uncertain about the appropriate value for that cell of the table; it merely means that I am substantially uncertain, given the very few sources I happened to skim. Sources and reasoning for the value in each cell are given in the footnote immediately following each row’s PCIF.

The values of the cells in this table have not been vetted by any domain experts. In many cases, I populated a cell with a value drawn from a single study, without reading the study carefully or trying hard to ensure I was interpreting it correctly. Moreover, I suspect many of the studies used to populate the cells in this table would not hold up under deeper scrutiny or upon a rigorous replication attempt (see below). Hence, the contents of this table should be interpreted as a set of tentative estimates and guesses, collected hastily by a non-expert.

Because the table doesn’t fit on the page, the table must be scrolled horizontally and vertically to view all its contents.

| POTENTIALLY CONSCIOUSNESS-INDICATING FEATURE | HUMAN | CHIMPANZEE | COW | CHICKEN | RAINBOW TROUT | GAZAMI CRAB | COMMON FRUIT FLY | E. COLI | FUNCTION SOMETIMES EXECUTED NON-CONSCIOUSLY IN HUMANS? | HUMAN ENTERIC NERVOUS SYSTEM |

|---|---|---|---|---|---|---|---|---|---|---|

| Last common ancestor with humans (Mya)82 | n/a | 6.7 | 96.5 | 311.9 | 453.3 | 796.6 | 796.6 | 4290 | n/a | n/a |

| Category: Neurobiological features | ||||||||||

| Adult average brain mass (g)83 | 1509 | 385 | 480.5 | 3.5 | 0.2 | n/a | n/a | |||

| Neurons in brain (millions)84 | 86060 | unavailable | unavailable | ~221 | unavailable | unavailable | 0.12 | n/a | n/a | 400 |

| Neurons in pallium (millions)85 | 16340 | unavailable | unavailable | ~60.7 | unavailable | n/a | n/a | n/a | n/a | n/a |

| Encephalization quotient86 | 7.6 | 2.35 | n/a | n/a | n/a | |||||

| Has a neocortex87 | Yes | Yes | Yes | No | No | n/a | n/a | n/a | ||

| Has a central nervous system88 | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | n/a | n/a |

| Category: Nociceptive features | ||||||||||

| Has nociceptors89 | Yes | Yes | Yes | Yes | Yes | Yes? | Yes | Yes? | n/a | Yes? |

| Has neural nociceptors90 | Yes | Yes | Yes | Yes | Yes | Yes? | Yes | No | n/a | Yes? |

| Nociceptive reflexes91 | Yes | Yes | Yes | Yes | Yes | Yes? | Yes? | Yes? | Yes | |

| Physiological responses to nociception or handling92 | Yes | Yes | Yes | Yes | Yes | n/a | n/a | |||

| Long-term alteration in behavior to avoid noxious stimuli93 | Yes | |||||||||

| Taste aversion learning94 | Yes | |||||||||

| Protective behavior (e.g. wound guarding, limping, rubbing, licking)95 | Yes | Yes | Yes? | Yes? | Yes? | |||||

| Nociceptive reflexes or avoidant behaviors reduced by analgesics96 | Yes | Yes | Yes | Yes | Yes | |||||

| Self-administration of analgesia97 | Yes | Yes | Yes | |||||||

| Will pay a cost to access analgesia98 | Yes | |||||||||

| Selective attention to noxious stimuli over other concurrent events99 | Yes | Yes | ||||||||

| Pain-relief learning100 | Yes | Yes | ||||||||

| Category: Other behavioral/cognitive features | ||||||||||

| Reports details of conscious experiences to scientists101 | Yes | No | No | No | No | No | No | No | No | No |

| Cross-species measures of general cognitive ability102 | ||||||||||

| Plastic behavior103 | Yes | |||||||||

| Detour behaviors104 | Yes | Yes | ||||||||

| Play behaviors105 | Yes | Yes | Yes | Yes? | Yes? | n/a | ||||

| Grief behaviors106 | Yes | |||||||||

| Expertise107 | Yes | |||||||||

| Goal-directed behavior108 | Yes | |||||||||

| Mirror self-recognition109 | Yes | Yes | unavailable? | unavailable? | unavailable? | unavailable? | unavailable? | n/a | n/a | |

| Mental time travel110 | Yes | |||||||||

| Distinct sleep/wake states111 | Yes | |||||||||

| Advanced social politics112 | Yes | |||||||||

| Uncertainty monitoring113 | Yes | probably? | unavailable? | unavailable? | unavailable? | unavailable? | unavailable? | |||

| Intentional deception114 | Yes | |||||||||

| Teaching others115 | ||||||||||

| Abstract language capabilities116 | Yes | |||||||||

| Intentional agency117 | Yes | |||||||||

| Understands pointing at distant objects118 | Yes | |||||||||

| Non-associative learning119 | Yes | |||||||||

| Tool use120 | Yes | |||||||||

| Can spontaneously plan for future days without reference to current motivational state121 | Yes | unavailable? | unavailable? | unavailable? | unavailable? | unavailable? | ||||

| Can take into account another’s spatial perspective122 | Yes | Yes? | unavailable? | unavailable? | unavailable? | unavailable? | n/a | n/a | ||

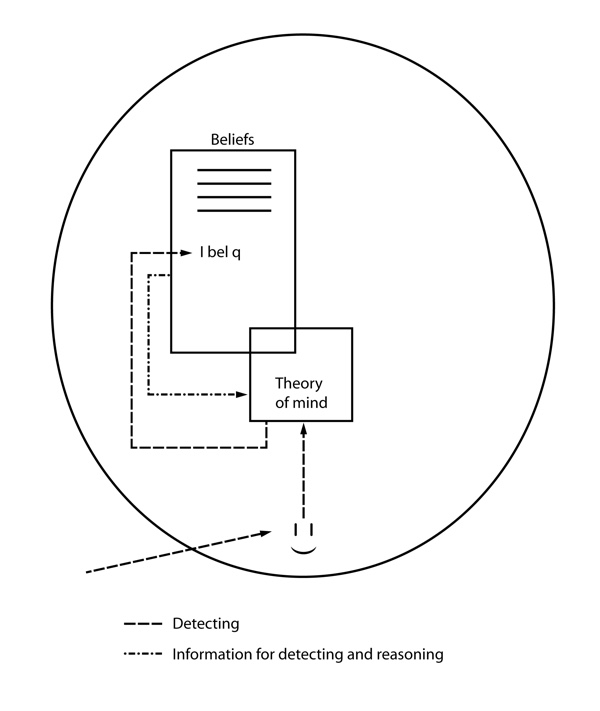

| Theory of mind123 | Yes |

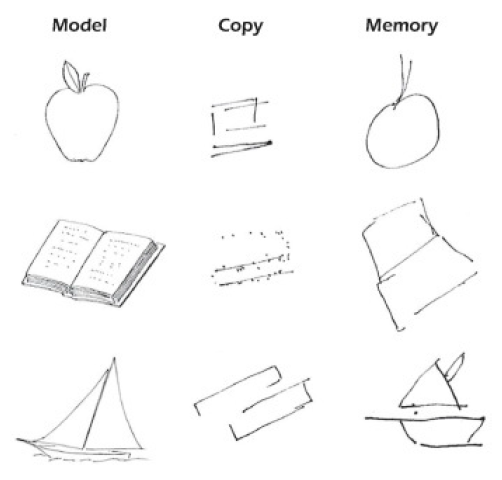

A fuller examination of the PCIFs approach, which I don’t conduct here, would involve (1) explaining these PCIFs in some detail, (2) cataloging and explaining the strength of the evidence for their presence or absence (or scalar value) for a wide variety of taxa, (3) arguing for some set of “weights” representing how strongly each of these PCIFs indicate consciousness and why, with some PCIFs perhaps being assigned ~0 weight, and (4) arguing for some resulting substantive conclusions about the likely distribution of consciousness.

3.2.3 My overall thoughts on PCIF arguments

Given that my table of PCIFs and taxa is so incomplete, not much can be concluded from it concerning the distribution question. However, my investigation into analogy-driven arguments, and my incomplete attempt to construct my own table of analogies, left me with some impressions I will now share (but not defend).

First, I think that analogy-driven arguments about the distribution of consciousness typically draw from far too narrow a range of taxa and PCIFs. In particular, it seems to me that analogy-driven arguments, as they are typically used, do not take seriously enough the following points:

- Many commonly-used PCIFs are executed both with and without conscious awareness in humans (e.g. at different times), and are thus not particularly compelling evidence for the presence of consciousness in non-humans (without further argument).124

- Many commonly-used PCIFs are possessed by biological subsystems which are typically thought to be non-conscious, for example the enteric nervous system and the spinal cord.125

- Many commonly-used PCIFs are possessed by simple, short computer programs, or in other cases by more complicated programs in widespread use (such as Microsoft Windows or policy gradients). Yet, these programs are typically thought to be non-conscious, even by functionalists.126

- Many commonly-used PCIFs are possessed by plants and bacteria and other very “simple” organisms, which are typically thought to be non-conscious.127 For example, a neuron-less slime mold can store memories, transfer learned behaviors to conspecifics, escape traps, and solve mazes.128

- Analogy-driven arguments typically make use of a very short list of PCIFs, and a very short list of taxa. Including more taxa and PCIFs would, I think, give a more balanced picture of the situation.

Second, I think analogy-driven arguments about consciousness too rarely stress the general point that “functionally similar behavior, such as communicating, recognizing neighbors, or way finding, may be accomplished in different ways by different kinds of animals.”129 This holds true for software as well130 — consider the many different algorithms that can be used to sort information, or implement a shared memory system, or make complex decisions,131 or learn from data.132 Clearly, many behavioral PCIFs can be accomplished by many different means, and for any given behavioral PCIF, it may be the case that it is achieved with the help of conscious awareness in some cases, and without conscious awareness in other cases.

Third, analogy-driven arguments typically do not (in my opinion) take seriously enough the possibility of hidden qualia (i.e. qualia inaccessible to introspection; see Appendix H), which has substantial implications for how one should weight the evidential importance of various PCIFs against each other. For example, one might argue that the presence of feature A should be seen as providing stronger evidence of consciousness than the presence of feature B does, because in humans we only observe feature A with conscious accompaniments, but we do sometimes (in humans) observe feature B without conscious accompaniments. But if hidden qualia exist, then perhaps our observations of B “without conscious accompaniments” are mistaken, and these are merely observations of B without conscious accompaniments accessible to introspection.

Fourth, analogy-driven arguments typically do not (in my opinion) take seriously enough the possibility that the ethology literature would suffer from its own “replication crisis” if rigorous attempts at mass replication were undertaken (see here).

3.3 Searching for necessary or sufficient conditions

How else might we learn something about the distribution question, without putting much weight on any single, specific theory of consciousness?

One possibility is to argue that some structure or capacity is likely necessary for consciousness — without relying much on any particular theory of consciousness — and then show that this structure or capacity is present for some taxa and not others. This wouldn’t necessarily prove which taxa are conscious, but it would tell us something about which ones aren’t.

Another possibility is to argue that some structure or capacity is likely sufficient for consciousness, and then show that this structure or capacity is present for some taxa and not others. This wouldn’t say much about which taxa aren’t conscious, but it would tell us something about which ones are.

(Technically, all potential necessary or sufficient conditions are just PCIFs, but with a different “strength” to their indication of consciousness.133 I discuss them separately in this report mainly for organizational reasons.)

Of course, we’d want such necessary or sufficient conditions to be “substantive.” For example, I think there’s a pretty strong case that information processing of some sort is a necessary condition for consciousness, but this doesn’t tell me much about distribution of consciousness: even bacteria process information. I also think there’s a pretty strong case that “human neurobiology plus detailed self-report of conscious experience” should be seen as sufficient evidence for consciousness, but again this doesn’t tell me anything novel or interesting about the distribution question.

I assume that at this stage of scientific progress we cannot definitely prove a “substantive” necessary or sufficient condition for consciousness, but can we make a “moderately strong argument” for some such necessary or sufficient condition?

Below I consider the case for just one proposed necessary or sufficient condition for consciousness.134 There are other candidates I could have investigated,135 but decided not to at this time.

3.3.1 Is a cortex required for consciousness?

One commonly-proposed necessary condition for phenomenal consciousness is possession of a cortex, or sometimes possession of a neocortex, or possession of a specific part of the neocortex such as the association cortex. Collectively, I’ll refer to these as “cortex-required views” (CRVs). Below, I report my findings about the credibility of CRVs.

Even sources arguing against CRVs often acknowledge that, for many years, it has been commonly believed by cognitive scientists and medical doctors that the cortex is the organ of consciousness in humans,136 though it’s not clear whether they would have also endorsed the much stronger claim that a cortex is required for consciousness in general. However, some experts have recently lost confidence that the cortex is required for consciousness (even just in humans), for several reasons (which I discuss below).137

One caveat about the claims above, and throughout the rest of this section, is that different authors appeal to slightly different definitions of “consciousness,” and so it is not always the case that the authors I cite or quote explicitly argued for or against the view that a cortex is required for “consciousness” as defined above. Still, these are arguments are sometimes used by others to make claims about the dependence or non-dependence of consciousness (as defined above) on a cortex, and certainly the arguments could easily be adapted to make such claims.

In this section, I describe some of the evidence used to argue for and against a variety of cortex-required views. As with the table of PCIFs and taxa above, please keep in mind that I am not an expert on the topics reviewed below, and my own understanding of these topics is based on a quick and shallow reading of various overview books and articles, plus a small number of primary studies.138

3.3.1.1 Arguments for cortex-required views

For decades, much (but not all) of the medical literature and the bioethics literature more-or-less assumed one or another CRV, at least in the case of humans, without much argument.139 In those sources which argue for a CRV,140 I typically see two types of arguments:

- For multiple types of cognitive processing (visual processing, emotional processing, etc.), we have neuroimaging evidence and other evidence showing that we are consciously aware of activity occurring in (some regions of) the cortex, but we are not aware of activity occurring outside (those regions of) the cortex.

- In cases where (certain kinds of) cortical operations are destroyed or severely disrupted, conscious experience seems to be abolished. When those cortical operations are restored, conscious experience returns.

According to James Rose141 and some other proponents of one or another CRV, the case for CRVs about consciousness comes not from any one line of evidence, but from several converging lines of evidence of types (1) and (2), all of which somewhat-independently suggest that conscious processing must be subserved by certain regions of the cortex, whereas unconscious processing can be subserved by other regions. If this is true, this could provide a very suggestive case in favor of some kind of CRV about consciousness. (Though, this suggestive case would still be undercut somewhat by the possibility of hidden qualia.)

Unfortunately, I did not have the time to survey several different lines of evidence to check whether they converged in favor of some kind of CRV. Instead, I examine below just one of these lines of evidence — concerning conscious and unconscious vision — in order to illustrate how the case for a CRV could be constructed, if other lines of evidence showed a similar pattern of results.

3.3.1.2 Unconscious vision

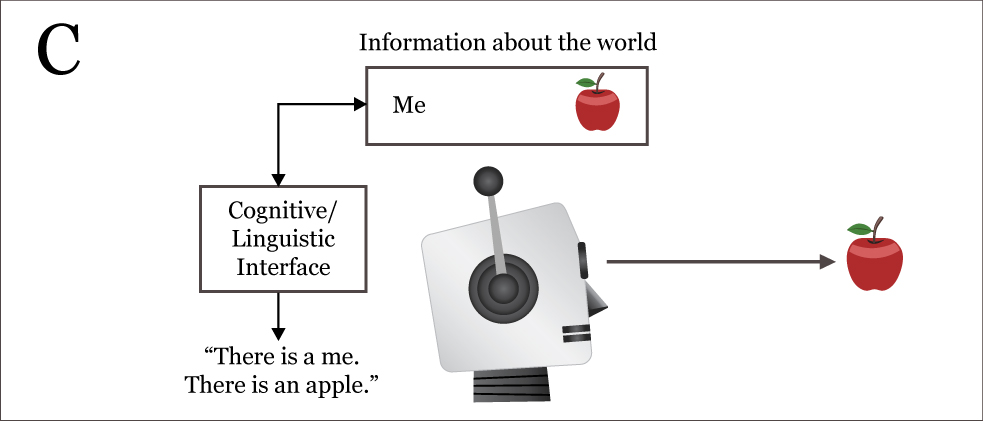

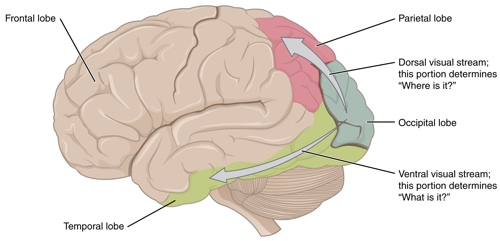

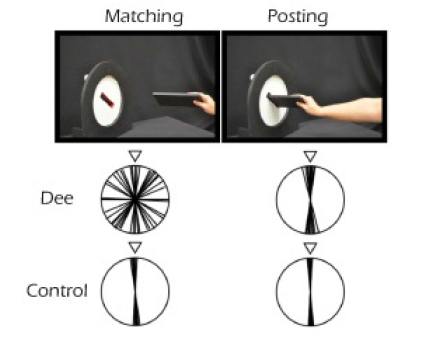

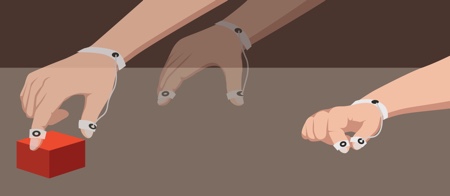

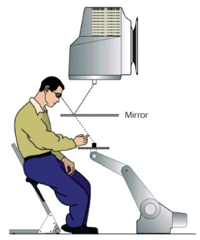

Probably the dominant142 (but still contested) theory of human visual processing holds that most human visual processing occurs in two largely (but not entirely) separate streams of processing. According to this theory, the ventral stream, also known “vision for perception,” serves to recognize and identify objects and people, and typically leads to conscious visual experience. The dorsal stream, also known as “vision for action,” serves to locate objects precisely and interact with them, but is not part of conscious experience.143 Below, I summarize what this theory says, but I don’t summarize the evidence in favor of the theory. I summarize that evidence in Appendix C.

These two streams are thought to be supported by different regions of the cortex, as shown below:

In primates, most visual information from the retina passes through the lateral geniculate nucleus (LGN) in the thalamus on its way to the primary visual cortex (V1) in the occipital lobe at the back of the skull.144 From there, visual information is passed from V1 to two separate streams of processing. The ventral stream leads into the inferotemporal cortex in the temporal lobe, while the dorsal stream leads into the posterior parietal cortex in the parietal lobe. (The dorsal stream also receives substantial input from several subcortical structures in addition to its inputs from V1, whereas the the ventral stream depends almost entirely on inputs from V1.145)

To illustrate how these systems are thought to interact, consider an analogy to the remote control of a robot in a distant or hostile environment (e.g. Mars):146

In tele-assistance, a human operator identifies and “flags” the goal object, such as an interesting rock on the surface of Mars, and then uses a symbolic language to communicate with a semi-autonomous robot that actually picks up the rock.

A robot working with tele-assistance is much more flexible than a completely autonomous robot… Autonomous robots work well in situations such as an automobile assembly line, where the tasks they have to perform are highly constrained and well specified… But autonomous robots… [cannot] cope with events that its programmers [have] not anticipated…

At present, the only way to make sure that the robot does the right thing in unforeseen circumstances is to have a human operator somewhere in the loop. One way to do this is to have the movements or instructions of the human operator… simply reproduced in a one-to-one fashion by the robot… [but this setup] cannot cope well with sudden changes in scale (on the video monitor) or with a significant delay between the communicated action and feedback from that action [as with a Mars robot]. This is where tele-assistance comes into its own.

In tele-assistance the human operator doesn’t have to worry about the real metrics of the workspace or the timing of the movements made by the robot; instead, the human operator has the job of identifying a goal and specifying an action toward that goal in general terms. Once this information is communicated to the semi-autonomous robot, the robot can use its on-board range finders and other sensing devices to work out the required movements for achieving the specified goal. In short, tele-assistance combines the flexibility of tele-operation with the precision of autonomous robotic control.

…[By analogy,] the perceptual systems in the ventral stream, along with their associated memory and other higher-level cognitive systems in the brain, do a job rather like that of the human operator in tele-assistance. They identify different objects in the scene, using a representational system that is rich and detailed but not metrically precise. When a particular goal object has been flagged, dedicated visuomotor networks in the dorsal stream, in conjunction with output systems elsewhere in the brain… are activated to perform the desired motor act. In other words, dorsal stream networks, with their precise egocentric coding of the location, size, orientation, and shape of the goal object, are like the robotic component of tele-assistance. Both systems have to work together in the production of purposive behavior — one system to help select the goal object from the visual array, the other to carry out the required metrical computations for the goal-directed action.

If something like this account is true — and it might not be; see Appendix C — then it could be argued to fit with a certain kind of CRV, according to which some parts of the cortex — those which include the ventral stream but not the dorsal stream — are required for conscious experience (at least in humans).147

3.3.1.3 Suggested other lines of evidence for CRVs

On its own, this theory of conscious and unconscious vision is not very suggestive, but if several different types of cognitive processing tell a similar story — with all of them seeming to depend on certain areas of the cortex for conscious processing, with unconscious processing occurring elsewhere in the brain — then this could add up to a suggestive argument for some kind of CRV.

Here are some other bodies of evidence that could (but very well might not) turn out to collectively suggest some sort of CRV about consciousness:

- Preliminary evidence suggests there may be multiple processing streams for other sense modalities, too, but I haven’t checked whether this evidence is compatible with CRVs.148

- There is definitely “unconscious pain” (technically, unconscious nociception), but I haven’t checked whether the evidence is CRVs-compatible. (See my linked sources on this in Appendix D.)

- There are both conscious and unconscious aspects to our emotional responses, but I haven’t checked whether the relevant evidence is CRVs-compatible. (See Appendix Z.4.)

- Likewise, there are both conscious and unconscious aspects of (human) learning and memory,149 but I haven’t checked whether the relevant evidence is CRVs-compatible.

- According to Laureys (2005), patients in a persistent vegetative state (PVS), who are presumed to be unconscious, show greatly reduced activity in the associative cortices, and also show disrupted cortico-cortical and thalamo-cortical connectivity. Laureys also says that recovery from PVS is accompanied by restored connectivity of some of these thalamo-cortical pathways.150

- The mechanism by which general anesthetics abolish consciousness in humans isn’t well-understood, but at least one live hypothesis is that (at least some) general anesthetics abolish consciousness primarily by disrupting cortical functioning. If true, perhaps this account would lend some support to some CRVs.151

- Coma states, in which consciousness is typically assumed to be absent, seem to be especially associated with extensive cortical damage.152

3.3.1.4 Overall thoughts on arguments for CRVs

I have not taken the time to assess the case for CRVs about consciousness. I can see how such a case could be made, if multiple lines of evidence about a variety of cognitive functions aligned with the suggestive evidence concerning the neural substrates of conscious vs. unconscious vision. On the other hand, my guess is that if I investigated these additional lines of evidence, I would find the following:

- I expect I would find that the evidence base on these other topics is less well-developed than the evidence base concerning conscious vs. unconscious vision, since vision neuroscience seems to be the most “developed” area within cognitive neuroscience.

- I expect I would find that the evidence concerning which areas of the brain subserve specifically conscious processing of each type would be unclear, and subject to considerable expert debate.

- I expect I would find that the underlying studies often suffer from the weaknesses described in Appendix Z.8.

And, as I mentioned earlier, the possibility of hidden qualia undermines the strength of any pro-CRVs argument one could make from empirical evidence about which processes are conscious vs. unconscious, since the “unconscious” processes might actually be conscious, but in a way that is not accessible to introspection.

Overall, then, my sense is that the case for CRVs about consciousness is currently weak or at least equivocal, though I can imagine how the case could turn out to be quite suggestive in the future, after much more evidence is collected in several different subdomains of neurology and cognitive neuroscience.

3.3.1.5 Arguments against cortex-required views

One influential paper, Merker (2007), pointed to several pieces of evidence that seem, to some people, to argue against CRVs. One piece of evidence is the seemingly-conscious behavior of hydranencephalic children,153 whose cerebral hemispheres are almost entirely missing and replaced by cerebrospinal fluid filling that part of the skull: