This report evaluates the likelihood of ‘explosive growth’, meaning > 30% annual growth of gross world product (GWP), occurring by 2100. Although frontier GDP/capita growth has been constant for 150 years, over the last 10,000 years GWP growth has accelerated significantly. Endogenous growth theory, together with the empirical fact of the demographic transition, can explain both trends. Labor, capital and technology were accumulable over the last 10,000 years, meaning that their stocks all increased as a result of rising output. Increasing returns to these accumulable factors accelerated GWP growth. But in the late 19th century, the demographic transition broke the causal link from output to the quantity of labor. There were not increasing returns to capital and technology alone and so growth did not accelerate; instead frontier economies settled into an equilibrium growth path defined by a balance between a growing number of researchers and diminishing returns to research.

This theory implies that explosive growth could occur by 2100. If automation proceeded sufficiently rapidly (e.g. due to progress in AI) there would be increasing returns to capital and technology alone. I assess this theory and consider counter-arguments stemming from alternative theories; expert opinion; the fact that 30% annual growth is wholly unprecedented; evidence of diminishing returns to R&D; the possibility that a few non-automated tasks bottleneck growth; and others. Ultimately, I find that explosive growth by 2100 is plausible but far from certain.

1. How to read this report

Read the summary (~1 page). Then read the main report (~30 pages).

The rest of the report contains extended appendices to the main report. Each appendix expands upon specific parts of the main report. Read an appendix if you’re interested in exploring its contents in greater depth.

I describe the contents of each appendix here. The best appendix to read is probably the first, Objections to explosive growth. Readers may also be interested to read reviews of the report.

Though the report is intended to be accessible to non-economists, readers without an economics background may prefer to read the accompanying blog post.

2. Why we are interested in explosive growth

Open Philanthropy wants to understand how far away we are from developing transformative artificial intelligence(TAI). Difficult as it is, a working timeline for TAI helps us prioritize between our cause areas, including potential risks from advanced AI.

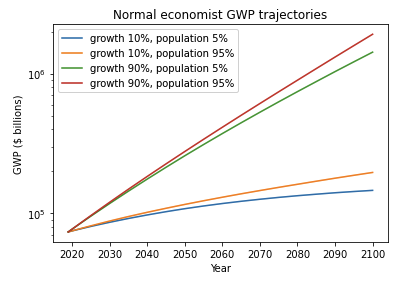

In her draft report, my colleague Ajeya Cotra uses TAI to mean ‘AI which drives Gross World Product (GWP) to grow at ~20-30% per year’ – roughly ten times faster than it is growing currently. She estimates a high probability of TAI by 2100 (~80%), and a substantial probability of TAI by 2050 (~50%). These probabilities are broadly consistent with the results from expert surveys,1 and with plausible priors for when TAI might be developed.2

Nonetheless, intuitively speaking these are high probabilities to assign to an ‘extraordinary claim’. Are there strong reasons to dismiss these estimates as too high? One possibility is economic forecasting. If economic extrapolations gave us strong reasons to think GWP will grow at ~3% a year until 2100, this would rule out explosive growth and so rule out TAI being developed this century.

I find that economic considerations don’t provide a good reason to dismiss the possibility of TAI being developed in this century. In fact, there is a plausible economic perspective from which sufficiently advanced AI systems are expected to cause explosive growth.

3. Summary

If you’re not familiar with growth economics, I recommend you start by reading this glossary or my blog post about the report.

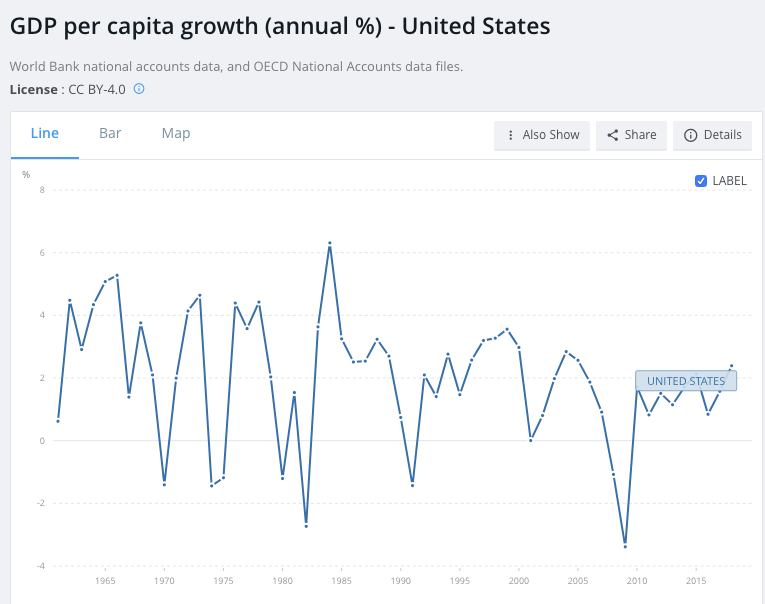

Since 1900, frontier GDP/capita has grown at about 2% annually.3 There is no sign that growth is speeding up; if anything recent data suggests that growth is slowing down. So why think that > 30% annual growth of GWP (‘explosive growth’) is plausible this century?

I identify three arguments to think that sufficiently advanced AI could drive explosive growth:

- Idea-based models of very long-run growth imply AI could drive explosive growth.

- Growth rates have significantly increased (super-exponential growth) over the past 10,000 years, and even over the past 300 years. This is true both for GWP growth, and frontier GDP/capita growth.

- Idea-based models explain increasing growth with an ideas feedback loop: more ideas → more output → more people → more ideas… Idea-based models seem to have a good fit to the long-run GWP data, and offer a plausible explanation for increasing growth.

- After the demographic transition in ~1880, more output did not lead to more people; instead people had fewer children as output increased. This broke the ideas feedback loop, and so idea-based theories expect growth to stop increasing shortly after the time. Indeed, this is what happened. Since ~1900 growth has not increased but has been roughly constant.

- Suppose we develop AI systems that can substitute very effectively for human labor in producing output and in R&D. The following ideas feedback loop could occur: more ideas → more output → more AI systems → more ideas… Before 1880, the ideas feedback loop led to super-exponential growth. So our default expectation should be that this new ideas feedback loop will again lead to super-exponential growth.

- A wide range of growth models predict explosive growth if capital can substitute for labor. Here I draw on models designed to study the recent period of exponential growth. If you alter these models with the assumption that capital can substitute very effectively for labor, e.g. due to the development of advanced AI systems, they typically predict explosive growth. The mechanism is similar to that discussed above. Capital accumulation produces a powerful feedback loop that drives faster growth: more capital → more output → more capital …. These first two arguments both reflect an insight of endogenous growth theory: increasing returns to accumulable inputs can drive accelerating growth.

- An ignorance perspective assigns some probability to explosive growth. We may not trust highly-specific models that attempt to explain why growth has increased over the long-term, or why it has been roughly constant since 1900. But we do know that the pace of growth has increased significantly over the course of history. Absent deeper understanding of the mechanics driving growth, it would be strange to rule out growth increasing again. 120 years of steady growth is not enough evidence to rule out a future increase.

I discuss a number of objections to explosive growth:

- 30% growth is very far out of the observed range.

- Models predicting explosive growth have implausible implications – like output going to infinity in finite time.

- There’s no evidence of explosive growth in any subsector of the economy.

- Limits to automation are likely to prevent explosive growth.

- Won’t diminishing marginal returns to R&D prevent explosive growth?

- And many others.

Although some of these objections are partially convincing, I ultimately conclude that explosive growth driven by advanced AI is a plausible scenario.

In addition, the report covers themes relating to the possibility of stagnating growth; I find that it is a highly plausible scenario. Exponential growth in the number of researchers has been accompanied by merely constant GDP/capita growth over the last 80 years. This trend is well explained by semi-endogenous growth models in which ideas are getting harder to find.4 As population growth slows over the century, number of researchers will likely grow more slowly; semi-endogenous growth models predict that GDP/capita growth will slow as a result.

Thus I conclude that the possibilities for long-run growth are wide open. Both explosive growth and stagnation are plausible.

Acknowledgements: My thanks to Holden Karnofsky for prompting this investigation; to Ajeya Cotra for extensive guidance and support throughout; to Ben Jones, Dietrich Vollrath, Paul Gaggl, and Chad Jones for helpful comments on the report; to Anton Korinek, Jakub Growiec, Phil Trammel, Ben Garfinkel, David Roodman, and Carl Shulman for reviewing drafts of the report in depth; to Harry Mallinson for reviewing code I wrote for this report and helpful discussion; to Joseph Carlsmith, Nick Beckstead, Alexander Berger, Peter Favaloro, Jacob Trefethen, Zachary Robinson, Luke Muehlhauser, and Luisa Rodriguez for valuable comments and suggestions; and to Eli Nathan for extensive help with citations and the website.

4. Main report

If you’re not familiar with growth economics, I recommend you start by reading this glossary or my blog post about the report.

How might we assess the plausibility of explosive growth (>30% annual GWP) occurring by 2100? First, I consider the raw empirical data; then I address a number of additional considerations.

- What do experts think (here)?

- How does economic growth theory affect the case of explosive growth (here and here)?

- How strong are the objections to explosive growth (here)?

- Conclusion (here).

4.1 Empirical data without theoretical interpretation

When looking at the raw data, two conflicting trends jump out.

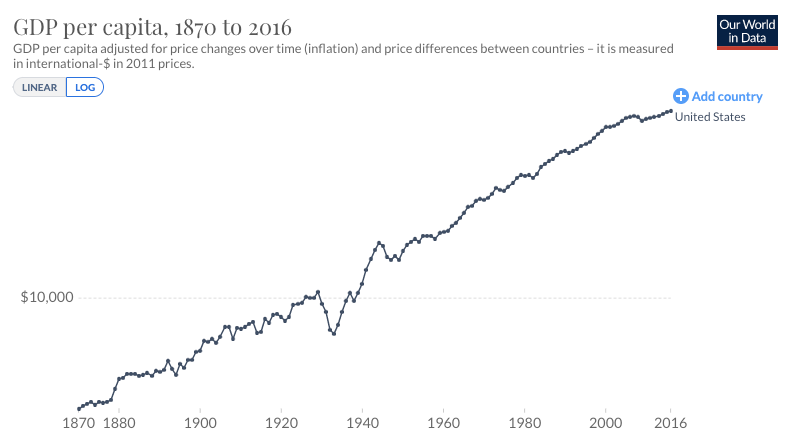

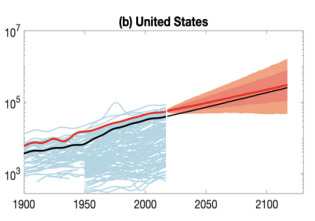

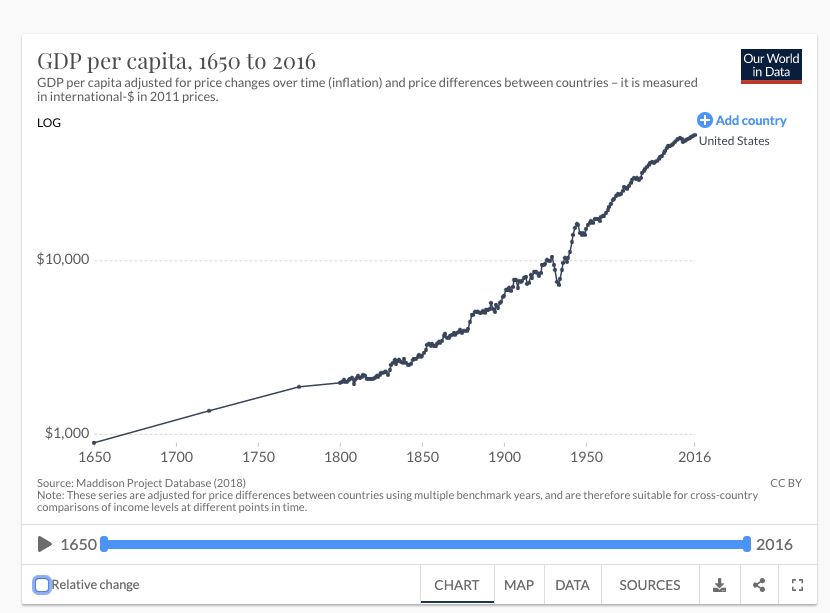

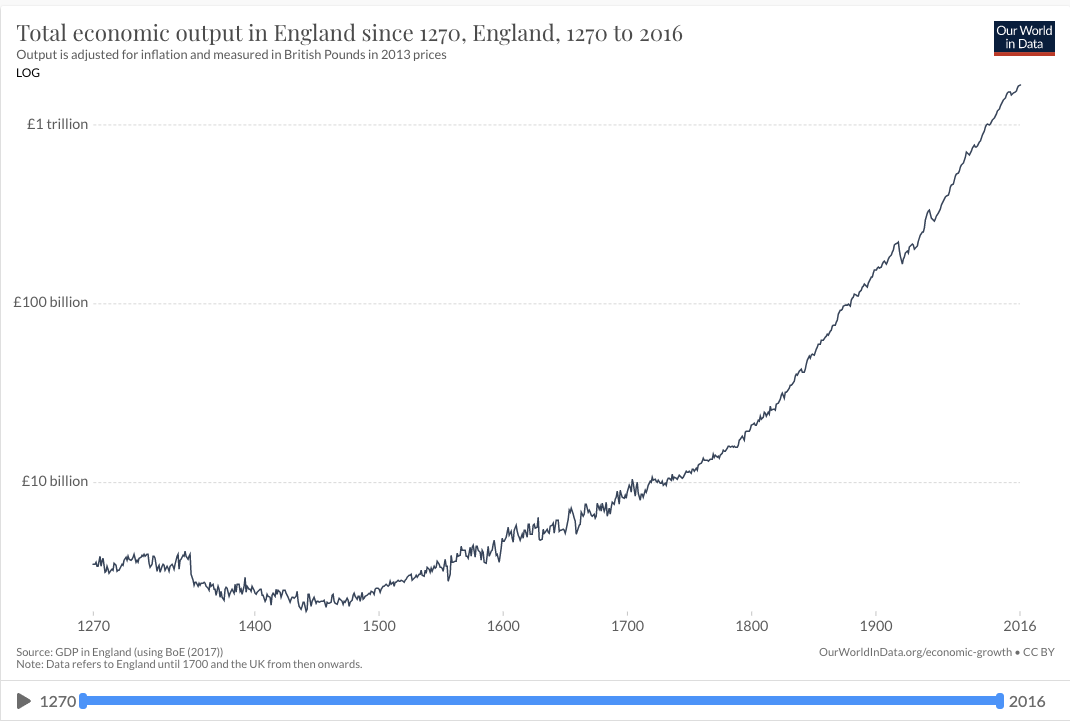

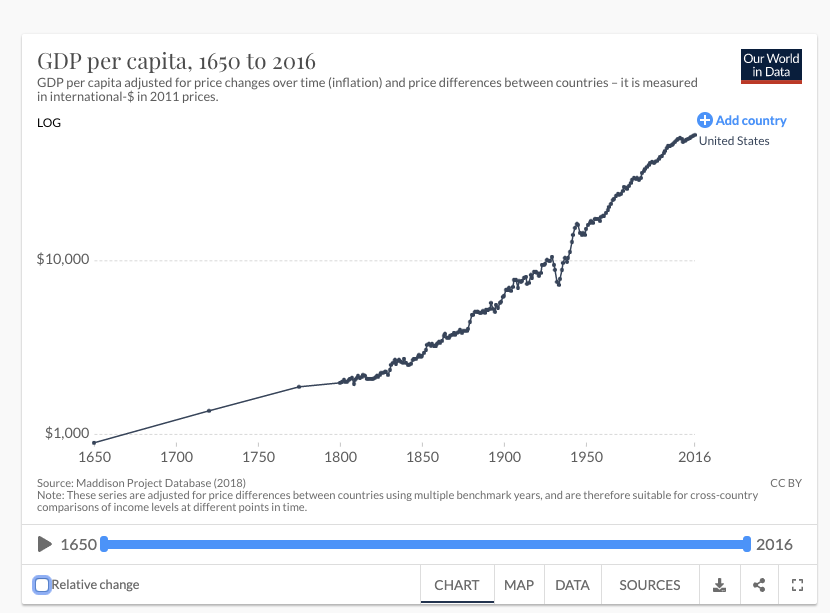

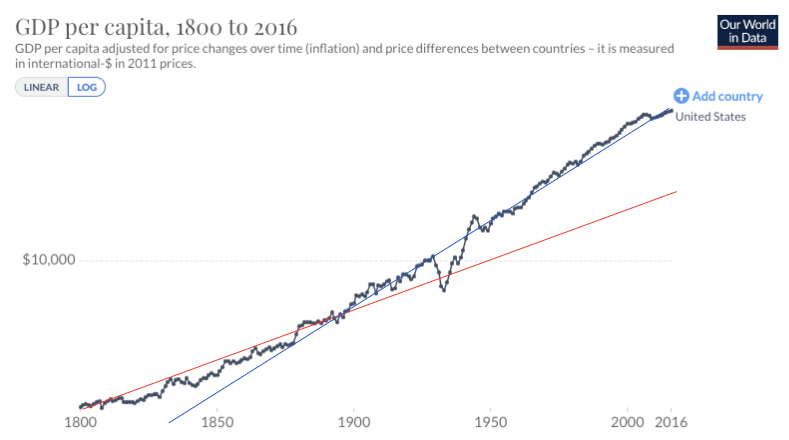

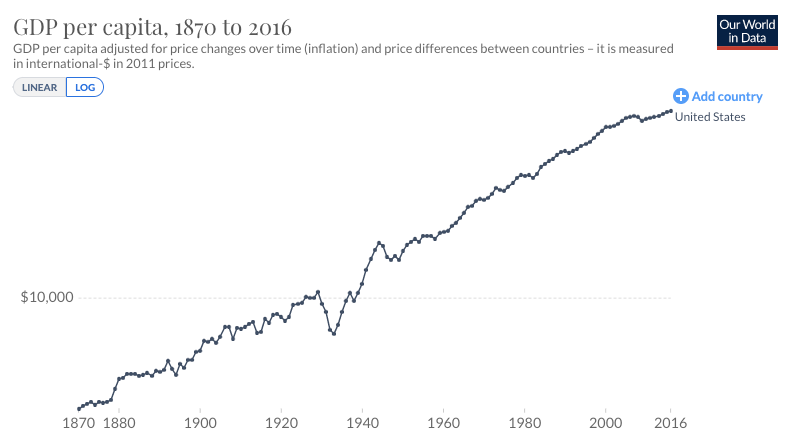

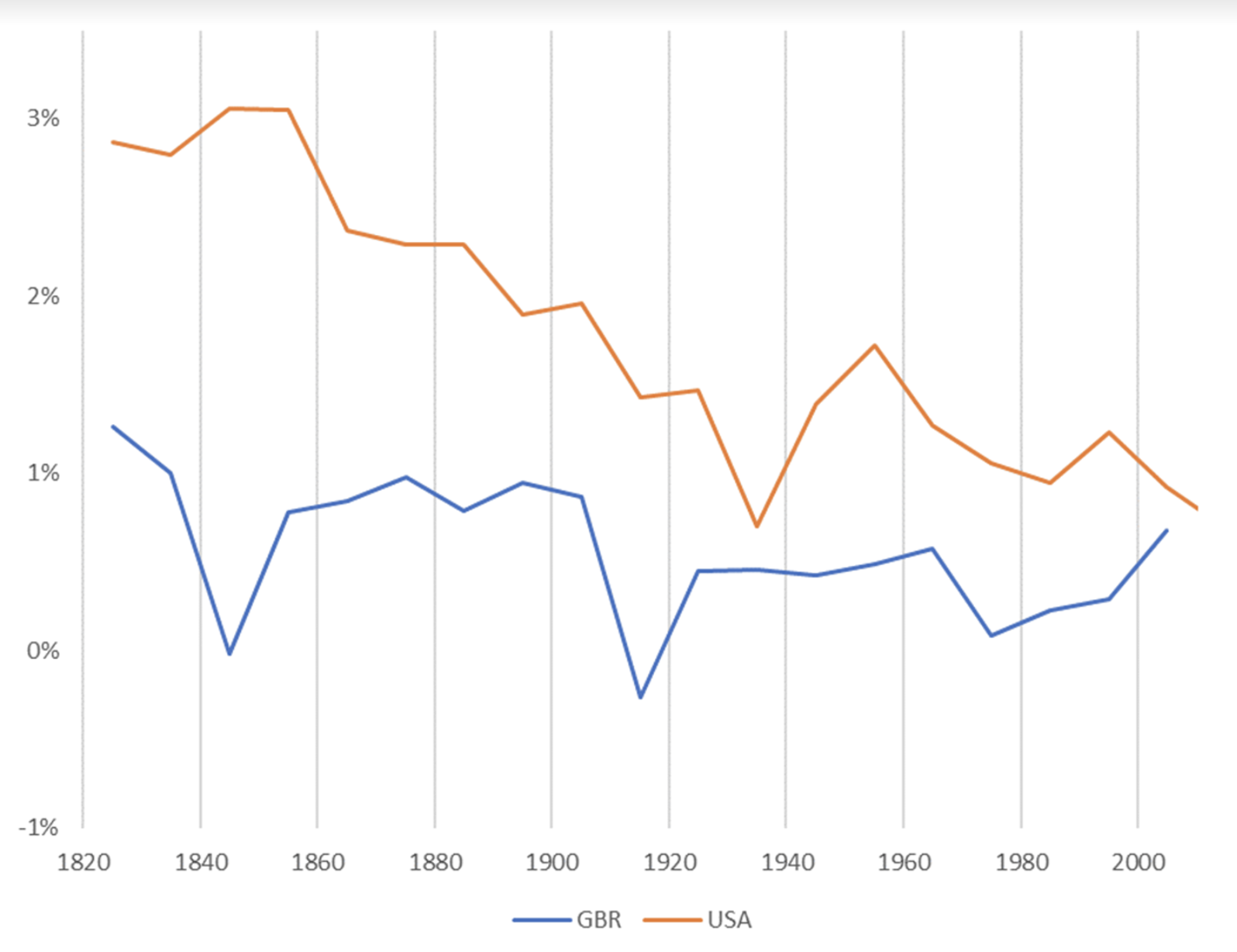

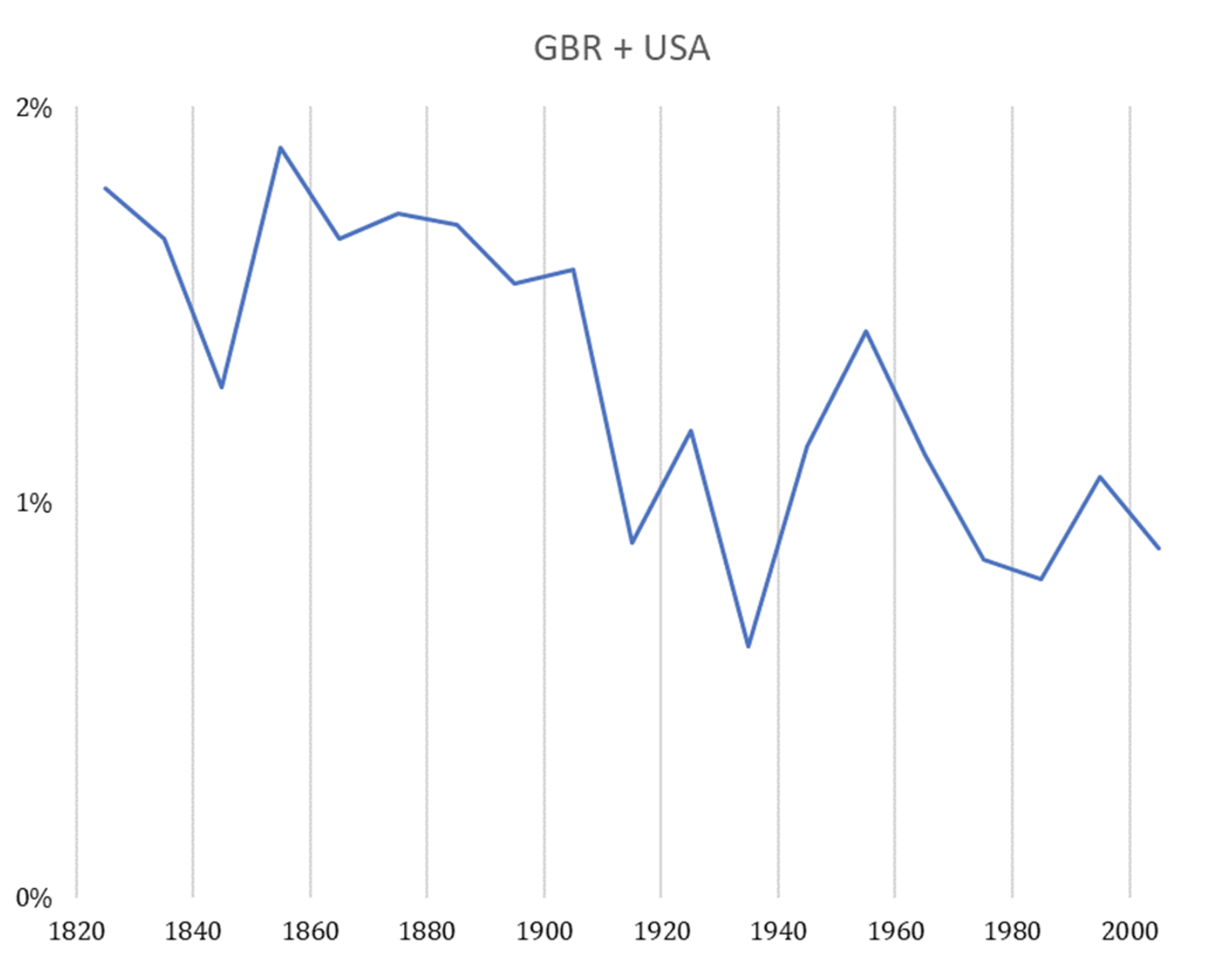

The first trend is the constancy of frontier GDP/capita growth over the last 150 years.5 The US is typically used to represent this frontier. The following graph from Our World in Data shows US GDP/capita since 1870.

The y-axis is logarithmic, so the straight line indicates that growth has happened at a constant exponential rate – ~2% per year on average.6 Extrapolating the trend, frontier GDP/capita will grow at ~2% per year until 2100. GWP growth will be slightly larger, also including a small boost from population growth and catch-up growth. Explosive growth would be a very large break from this trend.

I refer to forecasts along these lines as the standard story. Note, I intend the standard story to encompass a wide range of views, including the view that growth will slow down significantly by 2100 and the view that it will rise to (e.g.) 4% per year.

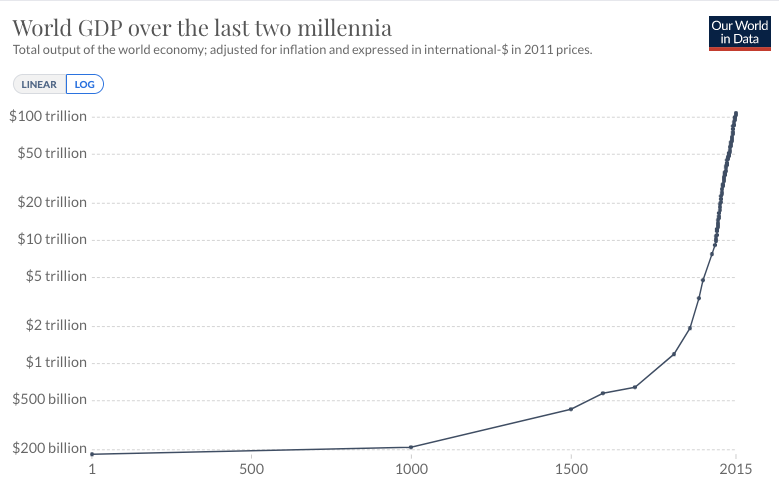

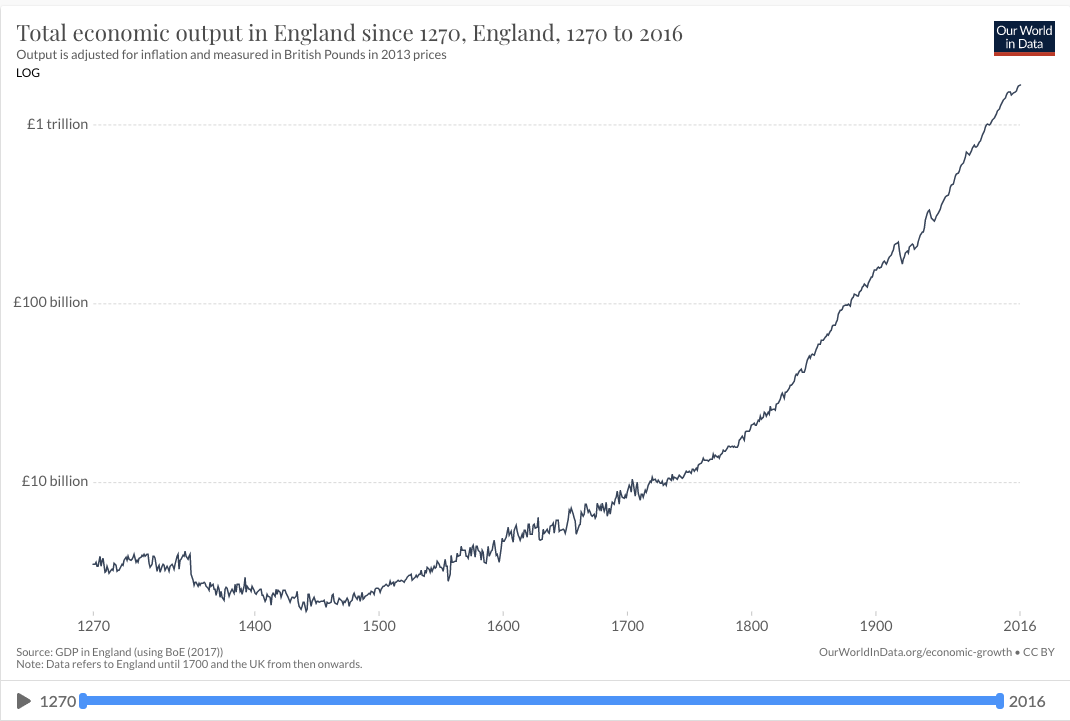

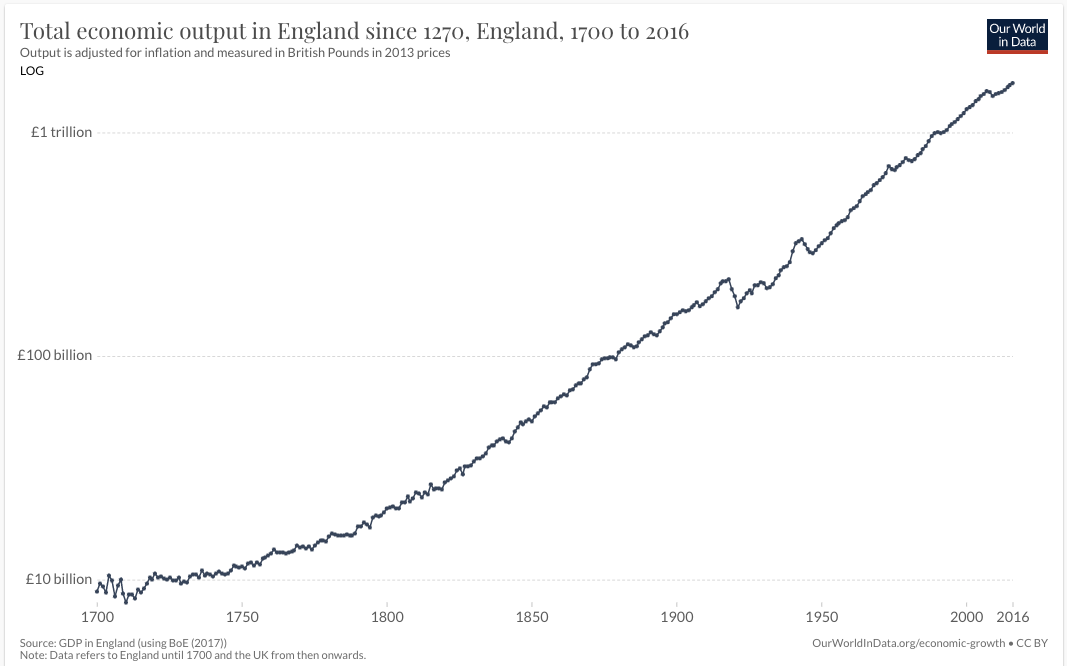

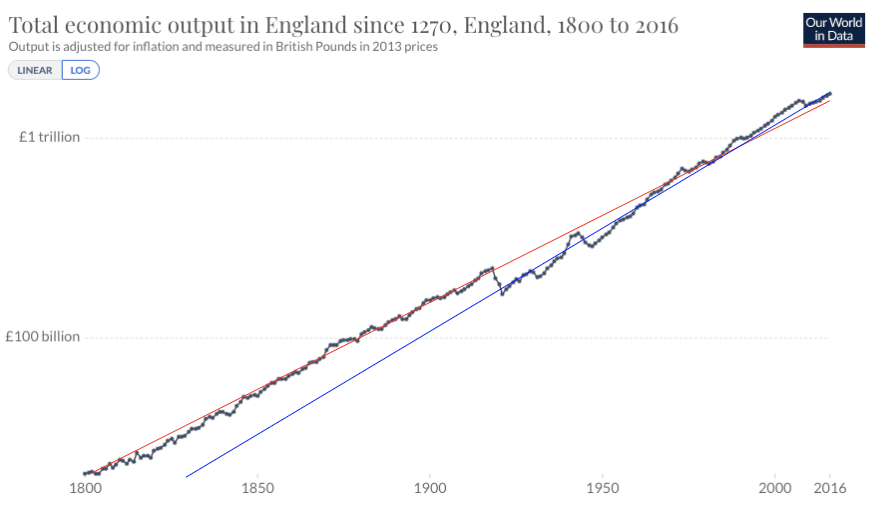

The second trend is the super-exponential growth of GWP over the last 10,000 years.7 (Super-exponential means the growth rate increases over time.) Another graph from Our World in Data shows GWP over the last 2,000 years:

Again, the y-axis is logarithmic, so the increasing steepness of the slope indicates that the growth rate has increased.

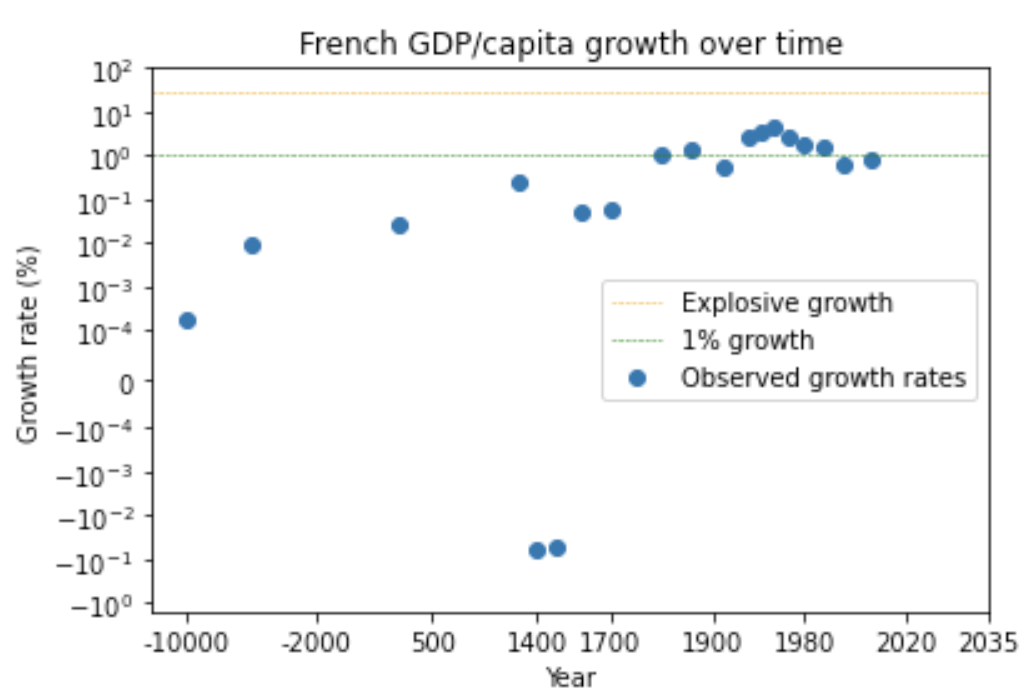

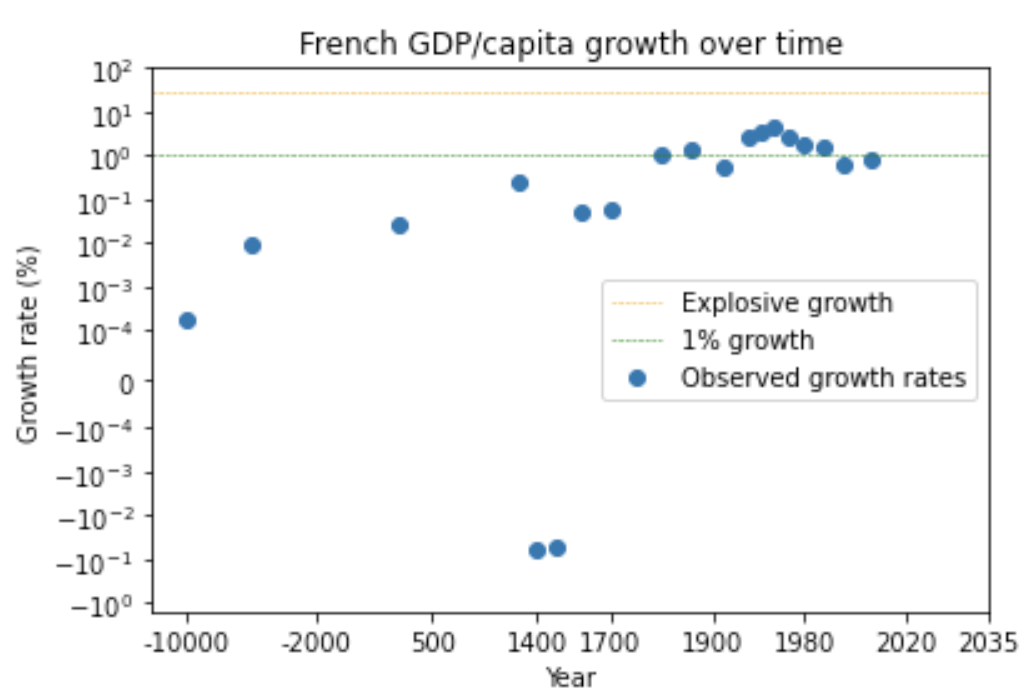

It’s not just GWP – there’s a similar super-exponential trend in long-run GDP/capita in many developed countries – see the graphs of US, English, and French GDP/capita in section 14.3.8 (Later I discuss whether we can trust these pre-modern data points.)

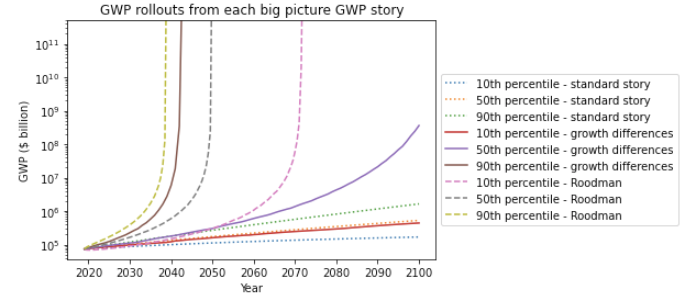

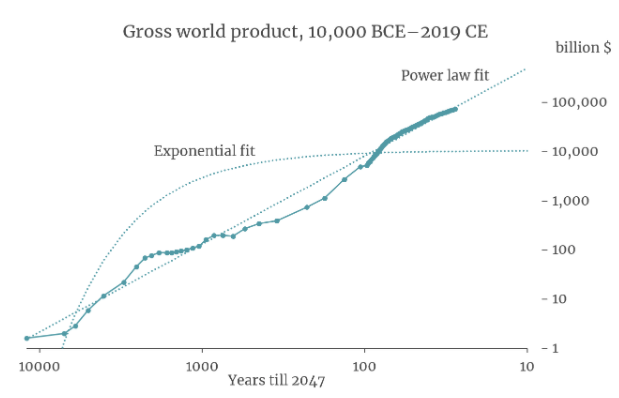

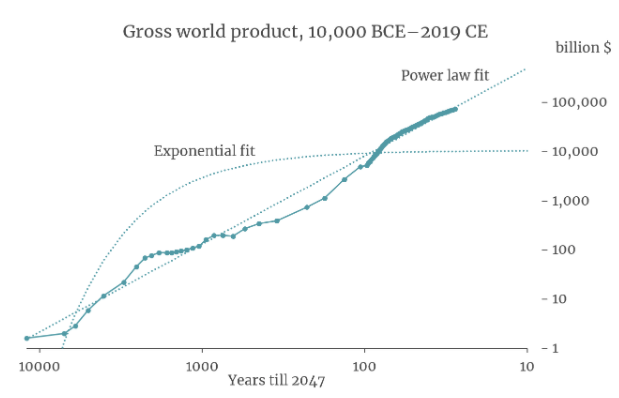

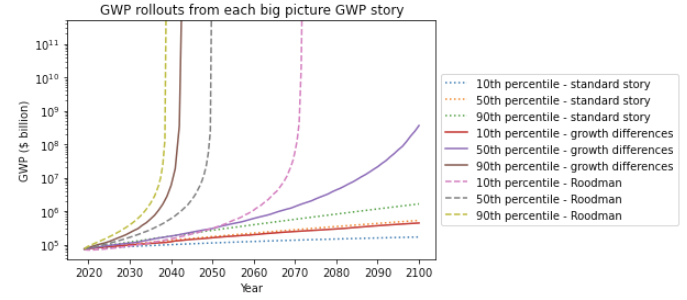

It turns out that a simple equation called a ‘power law’ is a good fit to GWP data going all the way back to 10,000 BCE. The following graph (from my colleague David Roodman) shows the fit of a power law (and of exponential growth) to the data. The axes of the graph are chosen so that the power law appears as a straight line.9

If you extrapolate this power law trend into the future, it implies that the growth rate will continue to increase into the future and that GWP will approach infinity by 2047!10

Many other simple curves fit to this data also predict explosive (>30%) growth will occur in the next few decades. Why is this? The core reason is that the data shows the growth rate increasing more and more quickly over time. It took thousands of years for growth to increase from 0.03% to 0.3%, but only a few hundred years for it to increase from 0.3% to 3%.11 If you naively extrapolate this trend, you predict that growth will increase again from 3% to 30% within a few decades.

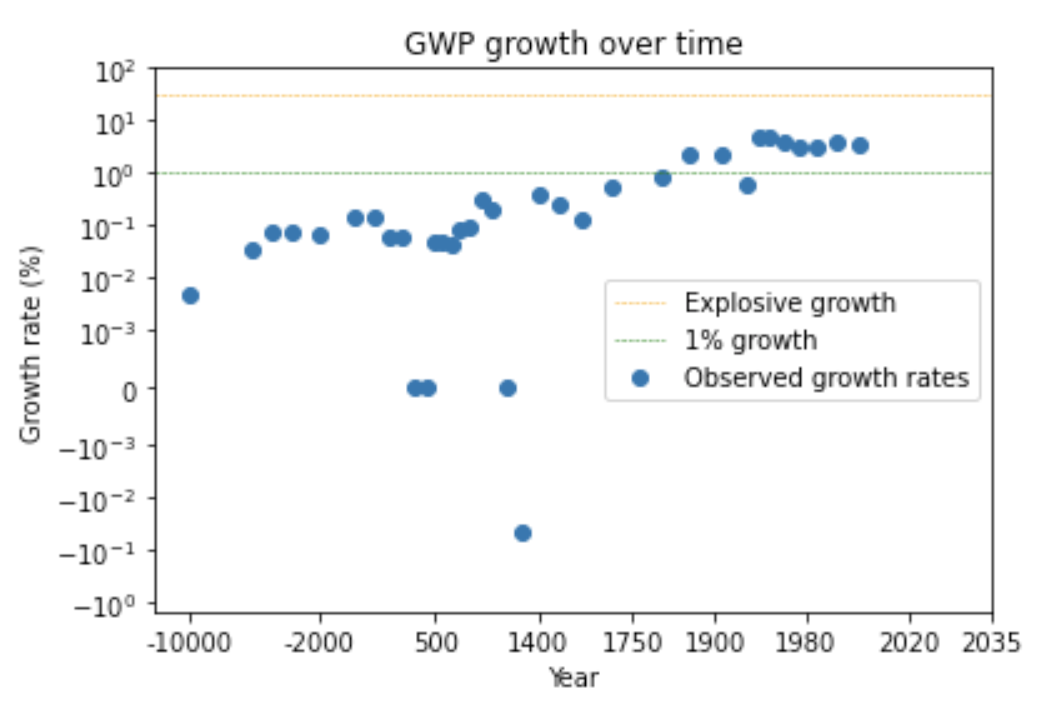

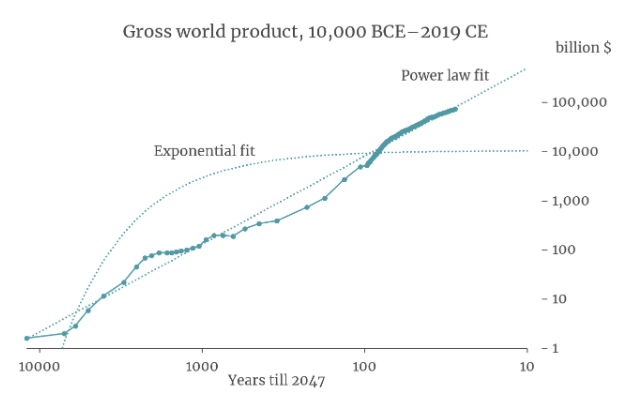

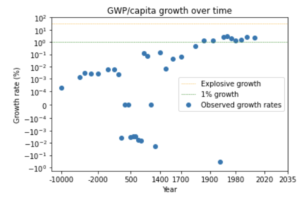

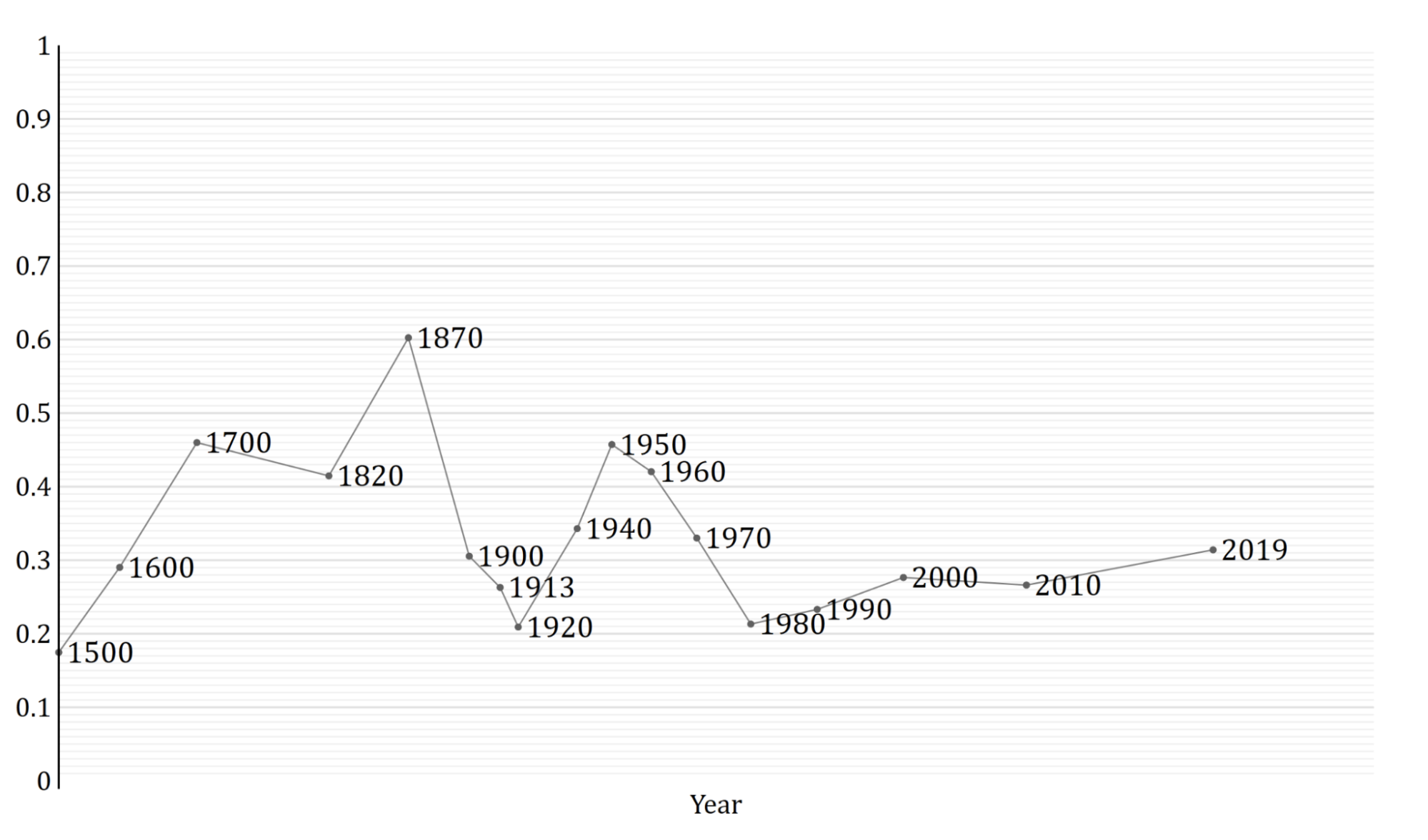

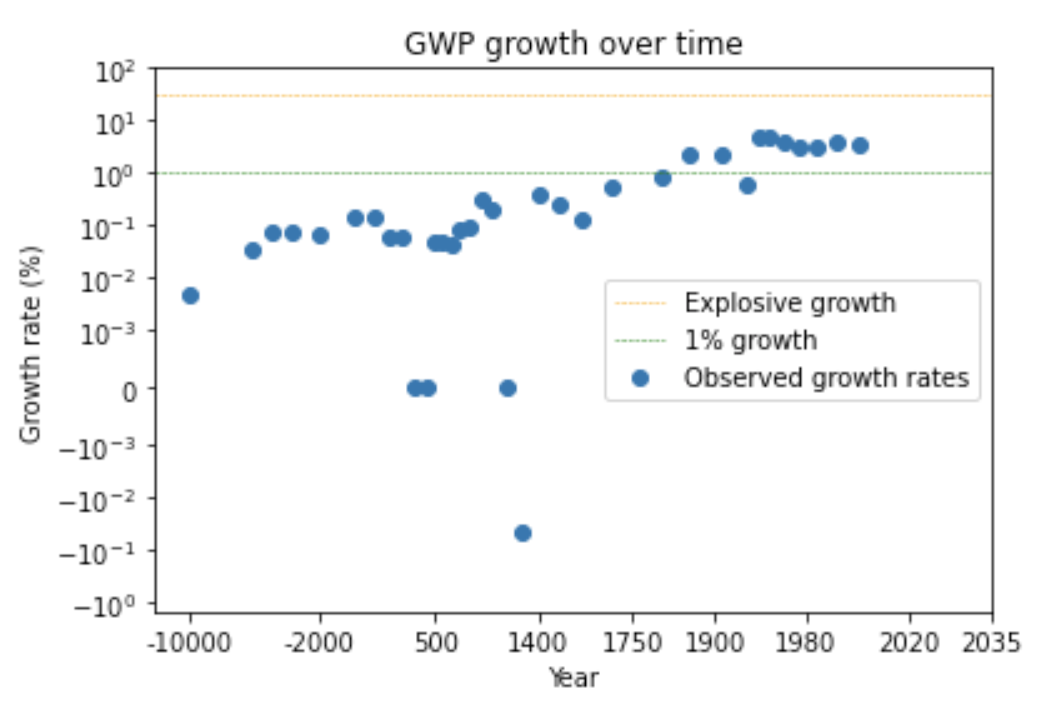

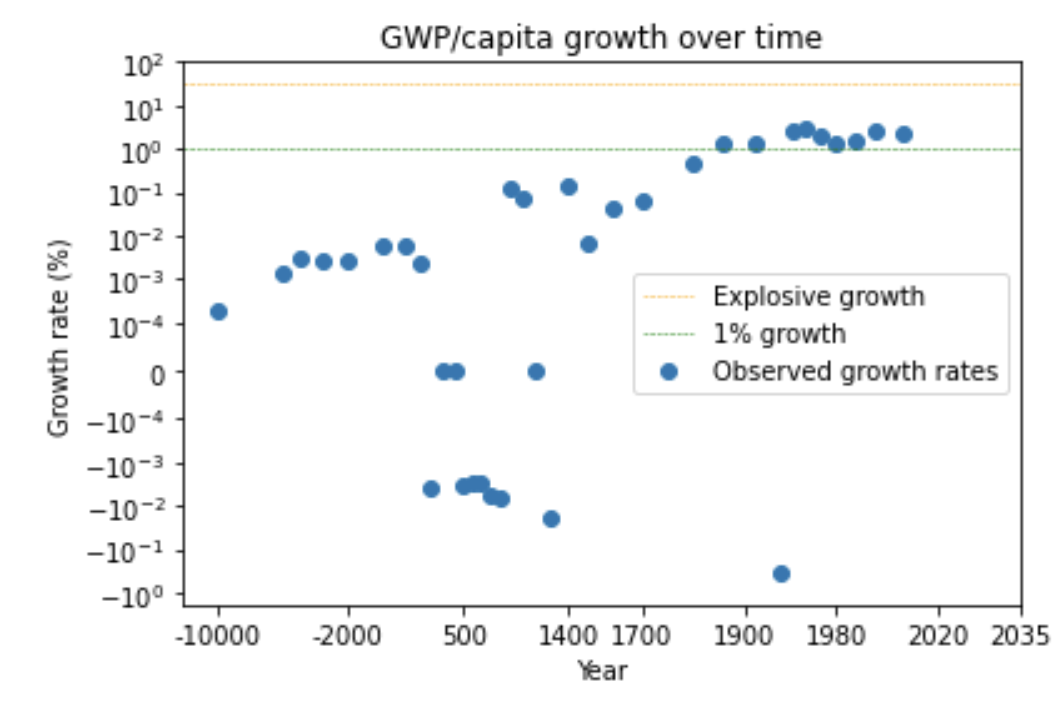

We can see this pattern more clearly by looking at a graph of how GWP growth has changed over time.12

The graph shows that the time needed for the growth rate to double has fallen over time. (Later I discuss whether this data can be trusted.) Naively extrapolating the trend, you’d predict explosive growth within a few decades.

I refer to forecasts along these lines, that predict explosive growth by 2100, as the explosive growth story.

So we have two conflicting stories. The standard story points to the steady ~2% growth in frontier GDP/capita over the last 150 years, and expects growth to follow a similar pattern out to 2100. The explosive growth story points to the super-exponential growth in GWP over the last 10,000 years and expects growth to increase further to 30% per year by 2100.

Which story should we trust? Before taking into account further considerations, I think we should put some weight on both. For predictions about the near future I would put more weight on the standard story because its data is more recent and higher quality. But for predictions over longer timescales I would place increasing weight on the explosive growth story as it draws on a longer data series.

Based on the two empirical trends alone, I would neither confidently rule out explosive growth by 2100 nor confidently expect it to happen. My attitude would be something like: ‘Historically, there have been significant increases in growth. Absent a deeper understanding of the mechanisms driving these increases, I shouldn’t rule out growth increasing again in the future.’ I call this attitude the ignorance story.13 The rest of the main report raises considerations that can move us away from this attitude (either towards the standard story or towards the explosive growth story).

4.2 Expert opinion

In the most recent and comprehensive expert survey on growth out to 2100 that I could find, all the experts assigned low probabilities to explosive growth.

All experts thought it 90% likely that the average annual GDP/capita growth out to 2100 would be below 5%.14 Strictly speaking, the survey data is compatible with experts thinking there is a 9% probability of explosive growth this century, but this seems unlikely in practice. The experts’ quantiles, both individually and in aggregate, were a good fit for normal distributions which would assign ≪ 1% probability to explosive growth.15

Experts’ mean estimate of annual GWP/capita growth was 2.1%, with standard deviation 1.1%.16 So their views support the standard story and are in tension with the explosive growth story.

There are three important caveats:

- Lack of specialization. My impression is that long-run GWP forecasts are not a major area of specialization, and that the experts surveyed weren’t experts specifically in this activity. Consonant with this, survey participants did not consider themselves to be particularly expert, self-reporting their level of expertise as 6 out of 10 on average.17

- Lack of appropriate prompts. Experts were provided with the data about the growth rates for the period 1900-2000, and primed with a ‘warm up question’ about the recent growth of US GDP/capita. But no information was provided about the longer-run super-exponential trend, or about possible mechanisms for producing explosive growth (like advanced AI). The respondents may have assigned higher probabilities to explosive growth by 2100 if they’d been presented with this information.

- No focus on tail outcomes. Experts were not asked explicitly about explosive growth, and were not given an opportunity to comment on outcomes they thought were < 10% likely to occur.

4.3 Theoretical models used to extrapolate GWP out to 2100

Perhaps economic growth theory can shed light on whether to extrapolate the exponential trend (standard story) or the super-exponential trend (explosive growth story).

In this section I ask:

- Do the growth models of the standard story give us reason beyond the empirical data to think 21st century growth will be exponential or sub-exponential?

- They could do this if they point to a mechanism explaining recent exponential growth, and this mechanism will continue to operate in the future.

- Do the growth models of the explosive growth story give us reason beyond the empirical data to think 21st century growth will be super-exponential?

- They could do this if they point to a mechanism explaining the long-run super-exponential growth, and this mechanism will continue to operate in the future.

My starting point is the models actually used to extrapolate GWP to 2100, although I draw upon economic growth theory more widely in making my final assessment. First, I give a brief explanation of how growth models work.

4.3.1 How do growth models work?

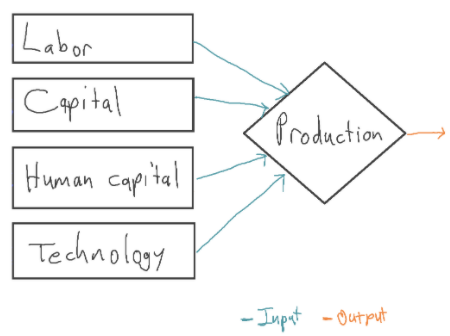

In economic growth models, a number of inputs are combined to produce output. Output is interpreted as GDP (or GWP). Typical inputs include capital (e.g. equipment, factories), labor (human workers), human capital (e.g. skills, work experience), and the current level of technology.18

Some of these inputs are endogenous,19 meaning that the model explains how the input changes over time. Capital is typically endogenous; output is invested to sustain or increase the amount of capital.20 In the following diagram, capital and human capital are endogenous:

Other inputs may be exogenous. This means their values are determined using methods external to the growth model. For example, you might make labor exogenous and choose its future values using UN population projections. The growth model does not (attempt to) explain how the exogenous inputs change over time.

When a growth model makes more inputs endogenous, it models more of the world. It becomes more ambitious, and so more debatable, but it also gains the potential to have greater explanatory power.

4.3.2 Growth models extrapolating the exponential trend to 2100

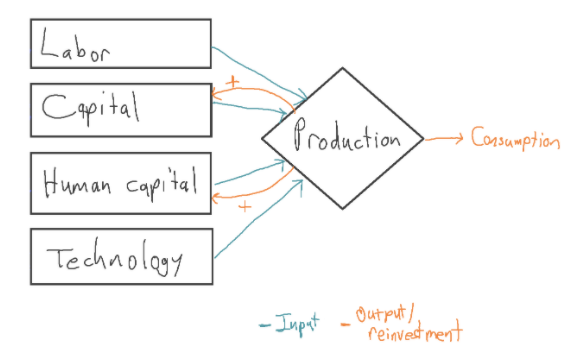

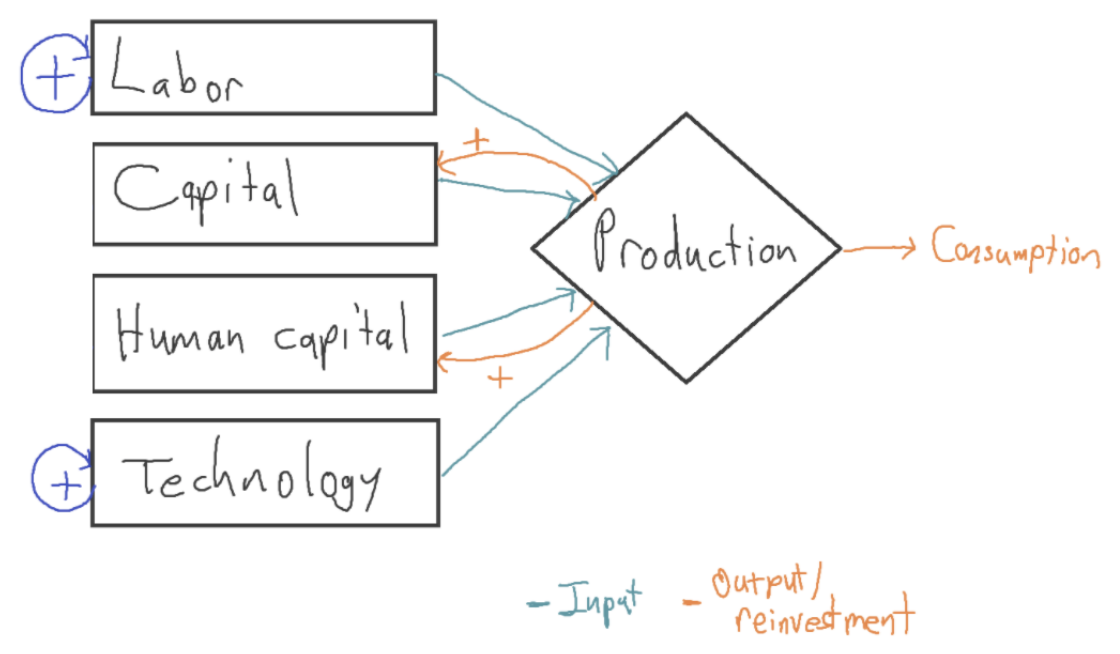

I looked at a number of papers in line with the standard story that extrapolate GWP out to 2100. Most of them treated technology as exogenous, typically assuming that technology will advance at a constant exponential rate.21In addition, they all treated labor as exogenous, often using UN projections. These growth models can be represented as follows:

The blue ‘+’ signs represent that the increases to labor and technology each year are exogenous, determined outside of the model.

In these models, the positive feedback loop between output and capital is not strong enough to produce sustained growth. This is due to diminishing marginal returns to capital. This means that each new machine adds less and less value to the economy, holding the other inputs fixed.22 Even the feedback loop between output and (capital + human capital) is not strong enough to sustain growth in these models, again due to diminishing returns.

Instead, long-run growth is driven by the growth of the exogenous inputs, labor and technology. For this reason, these models are called exogenous growth models: the ultimate source of growth lies outside of the model. (This is contrasted with endogenous growth models, which try to explain the ultimate source of growth.)

It turns out that long run growth of GDP/capita is determined solely by the growth of technology.23These models do not (try to) explain the pattern of technology growth, and so they don’t ultimately explain the pattern of GDP/capita growth.

4.3.2.1 Evaluating models extrapolating the exponential trend

The key question of this section is: Do the growth models of the standard story give us reason beyond the empirical data to think 21st century growth of frontier GDP/capita will be exponential or sub-exponential?

My answer is ‘yes’. Although the exogenous models used to extrapolate GWP to 2100 don’t ultimately explain why GDP/capita has grown exponentially, there are endogenous growth models that address this issue. Plausible endogenous models explain this pattern and imply that 21st century growth will be sub-exponential. This is consistent with the standard story. Interestingly, I wasn’t convinced by models implying that 21st century growth will be exponential.

The rest of this section explains my reasoning in more detail.

Endogenous growth theorists have for many decades sought theories where long-run growth is robustly exponential. However, they have found it strikingly difficult. In endogenous growth models, long-run growth is typically only exponential if some knife-edge condition holds. A parameter of the model must be exactly equal to some specific value; the smallest disturbance in this parameter leads to completely different long-run behavior, with growth either approaching infinity or falling to 0. Further, these knife-edges are typically problematic: there’s no particular reason to expect the parameter to have the precise value needed for exponential growth. This problem is often called the ‘linearity critique’ of endogenous growth models.

Appendix B argues that many endogenous growth models contain problematic knife-edges, drawing on discussions in Jones (1999), Jones (2005), Cesaratto (2008), and Bond-Smith (2019).

Growiec (2007) proves that a wide class of endogenous growth models require a knife-edge condition to achieve constant exponential growth, generalizing the proof of Christiaans (2004). The proof doesn’t show that all such conditions are problematic, as there could be mechanisms explaining why knife-edges hold. However, combined with the observation that many popular models contain problematic knife-edges, the proof suggests that it may be generically difficult to explain exponential growth without invoking problematic knife-edge conditions.

Two attempts to address this problem stand out:

- Claim that exponential population growth has driven exponential GDP/capita growth. This is an implication of semi-endogenous growth models (Jones 1995). These models are consistent with 20th century data: exponentially growing R&D effort has been accompanied by exponential GDP/capita growth. Appendix B argues that semi-endogenous growth models offer the best framework for explaining the recent period of exponential growth.24However, I do not think their ‘knife-edge’ assumption that population will grow at a constant exponential rate is likely to be accurate until 2100. In fact, the UN projects that population growth will slow significantly over the 21st century. With this projection, semi-endogenous growth models imply that GDP/capita growth will slow.25 So these models imply 21st century growth will be sub-exponential rather than exponential.26

- Claim that market equilibrium leads to exponential growth without knife-edge conditions.

- In a 2020 paper Robust Endogenous Growth, Peretto outlines a fully endogenous growth model that achieves constant exponential growth of GDP/capita without knife-edge conditions. The model displays increasing returns to R&D investment, which would normally lead to super-exponential growth. However, these increasing returns are ‘soaked up’ by the creation of new firms which dilute R&D investment. Market incentives ensure that new firms are created at exactly the rate needed to sustain exponential growth.

- The model seems to have some implausible implications. Firstly, it implies that there should be a huge amount of market fragmentation, with the number of firms growing more quickly than the population. This contrasts with the striking pattern of market concentration we see in many areas.27 Secondly, it implies that if no new firms were introduced – e.g. because this was made illegal – then output would reach infinity in finite time. This seems to imply that there is a huge market failure: private incentives to create new firms massively reduce long-run social welfare.

- Despite these problems, the model does raise the possibility that an apparent knife-edge holds in reality due to certain equilibrating pressures. Even if this model isn’t quite right, there may still be equilibrating pressures of some sort.28

- Overall, this model slightly raises my expectation that long-run growth will be exponential.29

This research shifted my beliefs in a few ways:

- I put more probability (~75%) on semi-endogenous growth models explaining the recent period of exponential growth.30

- So I put more weight on 21st century growth being sub-exponential.

- We’ll see later that these models imply that sufficiently advanced AI could drive explosive growth. So I put more weight on this possibility as well.

- It was harder than I expected to for growth theories to adequately explain why income growth should be exponential in a steady state (rather than sub- or super-exponential). So I put more probability on the recent period of exponential growth being transitory, rather than part of a steady state.

- For example, the recent period could be a transition between past super-exponential growth and future sub-exponential growth, or a temporary break in a longer pattern of super-exponential growth.

- This widens the range of future trajectories that I regard as being plausible.

4.3.3 Growth models extrapolating the super-exponential trend

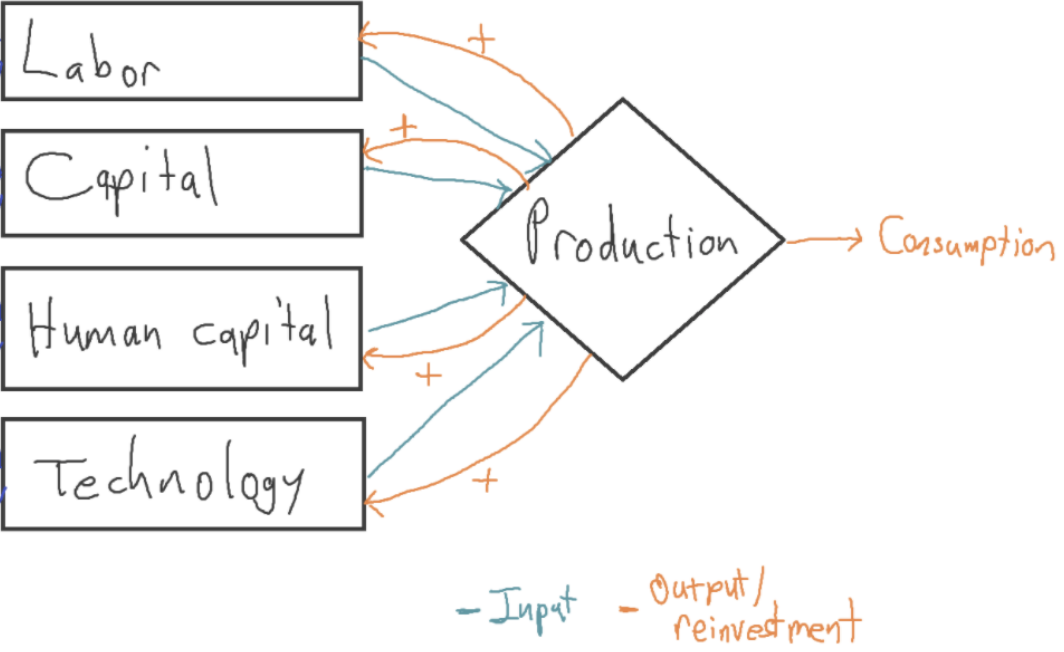

Some growth models extrapolate the long-run super-exponential trend to predict explosive growth in the future.31 Let’s call them long-run explosive models. The ones I’m aware of are ‘fully endogenous’, meaning all inputs are endogenous.32

Crucially, long-run explosive models claim that more output → more people. This makes sense (for example) when food is scarce: more output means more food, allowing the population to grow. This assumption is important, so it deserves a name. Let’s say these models make population accumulable. More generally, an input is accumulable just if more output → more input.33

The term ‘accumulable’ is from the growth literature; the intuition behind it is that the input can be accumulated by increasing output.

It’s significant for an input to be accumulable as it allows a feedback loop to occur: more output → more input → more output →… Population being accumulable is the most distinctive feature of long-run explosive models.

Long-run explosive models also make technology accumulable: more output → more people → more ideas (technological progress).

All growth models, even exogenous ones, imply that capital is accumulable: more output → more reinvestment → more capital.34 In this sense, long-run explosive models are a natural extension of the exogenous growth models discussed above: a similar mechanism typically used to explain capital accumulation is used to explain the accumulation of technology and labor.

We can roughly represent long-run explosive models as follows:35

The orange arrows show that all the inputs are accumulable: a marginal increase in output leads to an increase in the input. Fully endogenous growth models like these attempt to model more of the world than exogenous growth models, and so are more ambitious and debatable; but they potentially have greater explanatory power.

Why do these models predict super-exponential growth? The intuitive reason is that, with so many accumulable inputs, the feedback loop between the inputs and output is powerful enough that growth becomes faster and faster over time.

More precisely, the key is increasing returns to scale in accumulable inputs: when we double the level of every accumulable input, output more than doubles.36

Why are there increasing returns to scale? The key is the insight, from Romer (1990), that technology is non-rival. If you use a new solar panel design in your factory, that doesn’t prevent me from using that same design in my factory; whereas if you use a particular machine/worker, that does prevent me from using that same machine/worker.

Imagine doubling the quantity of labor and capital, holding technology fixed. You could literally replicate every factory and worker inside it, and make everything you currently make a second time. Output would double. Crucially, you wouldn’t need to double the level of technology because ideas are non-rival: twice as many factories could use the same stock of ideas without them ‘running out’.

Now imagine also doubling the level of technology. We’d still have twice as many factories and twice as many workers, but now each factory would now be more productive. Output would more than double. This is increasing returns to scale: double the inputs, more than double the output.37

Long-run explosive models assume that capital, labor and technology are all accumulable. Even if they include a fixed input like land, there are typically increasing returns to accumulable inputs. This leads to super-exponential growth as long unless the diminishing returns to technology R&D are very steep.38 For a wide of plausible parameter values, these models predict super-exponential growth.39

The key feedback loop driving increasing returns and super-exponential growth in these models can be summarized as more ideas (technological progress) → more output → more people → more ideas→…

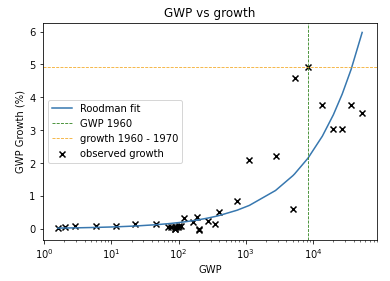

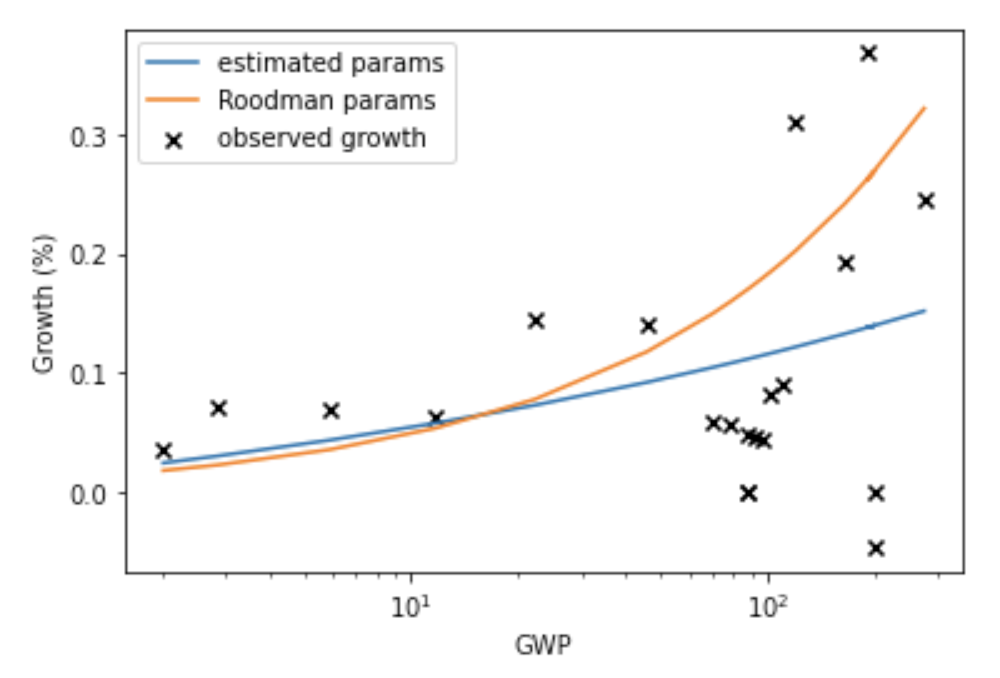

These models seem to be a good fit to the long-run GWP data. The model in Roodman (2020) implies that GWP follows a ‘power-law’, which seems to fit the data well.

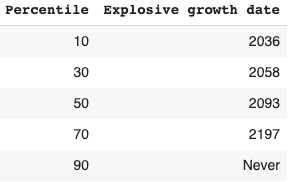

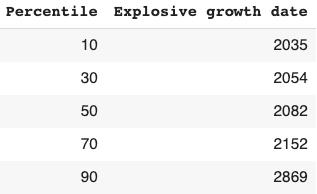

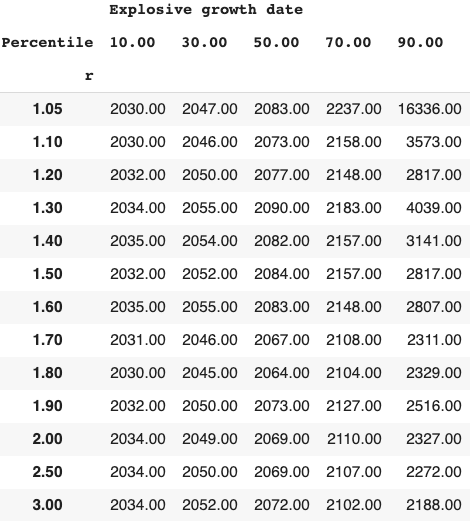

Long-run explosive models fitted to the long-run GWP data typically predict that explosive growth (>30% per year) is a few decades away. For example, you can ask the model in Roodman (2020) ‘When will be the first year of explosive growth?’ Its median prediction is 2043 and the 80% confidence range is [2034, 2065].

4.3.3.1 Evaluating models extrapolating the super-exponential trend

The key question of this section is: Do the growth models of the explosive growth story give us reason to think 21st century growth will be super-exponential? My answer in this section is ‘no’, because the models are not well suited to describing post-1900 growth. In addition, it’s unclear how much we should trust their description of pre-1900 growth. (However, the next section argues these models can be trusted if we develop sufficiently powerful AI systems.)

4.3.3.1.1.Problem 1: Long-run explosive models are not suitable for describing post-1900 growth

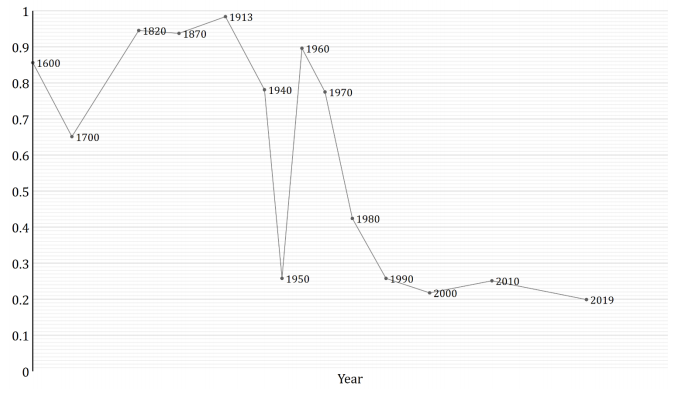

The central problem is that long-run explosive models assume population is accumulable.40 While it is plausible than in pre-modern times more output → more people, this hasn’t been true in developed countries over the last ~140 years. In particular, since ~1880 fertility rates have declined despite increasing GDP/capita.41 This is known as the demographic transition. Since then, more output has not led to more people, but to richer and better educated people: more output → more richer people. Population is no longer accumulable (in the sense that I’ve defined the term).42 The feedback loop driving super-exponential growth is broken: more ideas → more output → more richer people → more ideas.

How would this problem affect the models’ predictions? If population is not accumulable, then the returns to accumulable inputs are lower, and so growth is slower. We’d expect long-run explosive models to predict faster growth than we in fact observe after ~1880; in addition we wouldn’t expect to see super-exponential growth after ~1880.43

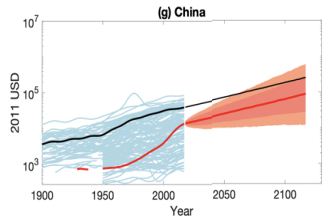

Indeed, this is what the data shows. Long-run explosive models are surprised at how slow GWP growth is since 1960 (more), and surprised at how slow frontier GDP/capita growth is since 1900 (more). It is not surprising that a structural change means a growth model is no longer predictively accurate: growth models are typically designed to work in bounded contexts, rather than being universal theories of growth.

A natural hypothesis is that the reason why long-run explosive models are a poor fit to the post-1900 data is that they make an assumption about population that has been inaccurate since ~1880. The recent data is not evidence against long-run explosive models per se, but confirmation that their predictions can only be trusted when population is accumulable.

This explanation is consistent with some prominent idea-based theories of very long-run growth.44 These theories use the same mechanism as long-run explosive models to explain pre-1900 super-exponential growth: labor and technology are accumulable, so there are increasing returns to accumulable inputs,45 so there’s super-exponential growth. They feature the same ideas feedback loop: more ideas → more output → more people → more ideas→…46

These idea-based theories are made consistent with recent exponential growth by adding an additional mechanism that makes the fertility rate drop once the economy reaches a mature stage of development,47 mimicking the effect of the demographic transition. After this point, population isn’t accumulable and the models predict exponential growth by approximating some standard endogenous or semi-endogenous model.48

These idea-based models provide a good explanation of very long-run growth and modern growth. They increase my confidence in the main claim of this section: long-run explosive models are a poor fit to the post-1900 data because they (unrealistically) assume population is accumulable. However, idea-based models are fairly complex and were designed to explain long-run patterns in GDP/capita and population; this should make us wary to trust them too much.49

4.3.3.1.2 Problem 2: It is unclear how much we should trust long-run explosive models’ explanation of pre-1900 growth

None of the problems discussed above dispute the explosive growth story’s explanation of pre-1900 growth. How much weight should we put on its account?

It emphasizes the non-rivalry of ideas and the mechanism of increasing returns to accumulable factors. This mechanism implies growth increased fairly smoothly over hundreds and thousands of years.50 We saw that the increasing-returns mechanism plays a central role in several prominent models of long-run growth.51

However, most papers on very long run growth emphasize a different explanation, where a structural transition occurs around the industrial revolution.52 Rather than a smooth increase, this suggests a single step-change in growth occurred around the industrial revolution, without growth increasing before or after the step-change.53

Though a ‘step-change’ view of long-run growth rates will have a lesser tendency to predict explosive growth by 2100, it would not rule it out. For this, you would have to explain why step change increases have occurred in the past, but no more will occur in the future.54

How much weight should we place in the increasing-returns mechanism versus the step-change view? The ancient data points are highly uncertain, making it difficult to adjudicate empirically.55 Though GWP growth seems to have increased across the whole period 1500 – 1900, this is compatible with there being one slow step-change.56

There is some informative evidence:

- Kremer (1993) gives evidence for the increasing-returns mechanism. He looks at the development of 5 isolated regions and finds that the technology levels of the regions in 1500 are perfectly rank-correlated with their initial populations in 10,000 BCE. This is just what the increasing returns mechanism would predict.57

- Roodman (2020) gives evidence for the step-change view. Roodman finds that his own model, which uses the increasing-returns mechanism, is surprised by the speed of growth around the industrial revolution (see more).

Overall, I think it’s likely that the increasing-returns mechanism plays an important role in explaining very long-run growth. As such I think we should take long-run explosive models seriously (if population is accumulable). That said, they are not the whole story; important structural changes happened around the industrial revolution.58

4.3.4 Summary of theoretical models used to extrapolate GWP out to 2100

I repeat the questions asked at the start of this section, now with their answers:

- Do the growth models of the standard story give us reason beyond the empirical data to think 21st century growth will be exponential or sub-exponential?

- Yes, plausible models imply that growth will be sub-exponential. Interestingly, I didn’t find convincing reasons to expect exponential growth.

- Do the growth models of the explosive growth story give us reason beyond the empirical data to think 21st century growth will be super-exponential?

- No, long-run explosive models assume population is accumulable, which isn’t accurate after ~1880.

- However, the next section argues that advanced AI could make this assumption accurate once more. So I think these models do give us reason to expect explosive growth if sufficiently advanced AI is developed.

| STANDARD STORY | EXPLOSIVE GROWTH STORY | |

| Preferred data set | Frontier GDP/capita since 1900 | GWP since 10,000 BCE |

| Predicted shape of long-run growth | Exponential or sub-exponential | Super-exponential (for a while, and then eventually sub-exponential) |

| Models used to extrapolate GWP to 2100 | Exogenous growth models | Endogenous growth model, where population and technology are accumulable. |

| Evaluation | Semi-endogenous growth models are plausible and predict 21st century growth will be sub-exponential. Theories predicting exponential growth rely on problematic knife-edge conditions. | Population is no longer accumulable, so we should not trust these models by default. However, advanced AI systems could make this assumption realistic again, in which case the prediction of super-exponential can be trusted. |

4.4 Advanced AI could drive explosive growth

It is possible that significant advances in AI could allow capital to much more effectively substitute for labor.59 Capital is accumulable, so this could lead to increasing returns to accumulable inputs, and so to super-exponential growth.60 I’ll illustrate this point from two complementary perspectives.

4.4.1 AI robots as a form of labor

First, consider a toy scenario in which Google announces tomorrow that it’s developed AI robots that can perform anytask that a human laborer can do for a smaller cost. In this (extreme!) fiction, AI robots can perfectly substitute for all human labor. We can write (total labor) = (human labor) + (AI labor). We can invest output to build more AI robots,61 and so increase the labor supply: more output → more labor (AI robots). In other words, labor is accumulable again. When this last happened there was super-exponential growth, so our default expectation should be that this scenario will lead to super-exponential growth.

To look at it another way, AI robots would reverse the effect of the demographic transition. Before that transition, the following feedback loop drove increasing returns to accumulable inputs and super-exponential growth:

More ideas → more output → more labor (people) → more ideas →…

With AI robots there would be a closely analogous feedback loop:

More ideas → more output → more labor (AI robots) → more ideas →…

| PERIOD | FEEDBACK LOOP? | IS TOTAL LABOR ACCUMULABLE? | PATTERN OF GROWTH |

| Pre-1880 | Yes: More ideas → more output → more people → more ideas →… | Yes | GWP grows at an increasing rate. |

| 1880 – present | No: More ideas → more output → more richer people → more ideas →… | No | GWP grows at a ~constant rate. |

| AI robot scenario | Yes: More ideas → more output → more AI systems → more ideas →… | Yes | GWP grows at an increasing rate. |

Indeed, plugging the AI robot scenario into a wide variety of growth models, including exogenous growth models, you find that increased returns to accumulable inputs drives super-exponential growth for plausible parameter values.62

This first perspective, analysing advanced AI as a form of labor, emphasizes the similarity of pre-1900 growth dynamics to those of a possible future world with advanced AI. If you think that the increasing-returns mechanism increased growth in the past, it’s natural to think that the AI robot scenario would increase growth again.63

4.4.2 AI as a form of capital

There are currently diminishing returns to accumulating more capital, holding the amount of labor fixed. For example, imagine creating more and more high-quality laptops and distributing them around the world. At first, economic output would plausibly increase as the laptops made people more productive at work. But eventually additional laptops would make no difference as there’d be no one to use them. The feedback loop ‘more output → more capital → more output →…’ peters out.

Advances in AI could potentially change this. By automating wide-ranging cognitive tasks, they could allow capital to substitute more effectively for labor. As a result, there may no longer be diminishing returns to capital accumulation. AI systems could replace both the laptops and the human workers, allowing capital accumulation to drive faster growth.64

Economic growth models used to explain growth since 1900 back up this point. In particular, if you adjust these models by assuming that capital substitutes more effectively for labor, they predict increases in growth.

The basic story is: capital substitutes more effectively for labor → capital’s share of output increases → larger returns to accumulable inputs → faster growth. In essence, the feedback loop ‘more output → more capital → more output → …’ becomes more powerful and drives faster growth.

What level of AI is required for explosive (>30%) growth in these models? The answer varies depending on the particular model:65

- Often the crucial condition is that the elasticity of substitution between capital and labor rises above 1. This means that some (perhaps very large) amount of capital can completely replace any human worker, though it is a weaker condition than perfect substitutability.66

- In the task-based model of Aghion et al. (2017), automating a fixed set of tasks leads to only a temporary boost in growth. A constant stream of automation (or full automation) is needed to maintain faster growth.67

- Appendix C discusses the conditions for super-exponential growth in a variety of such models (see here).

Overall, what level of AI would be sufficient for explosive growth? Based on a number of models, I think that explosive growth would require AI that substantially accelerates the automation of a very wide range of tasks in the production of goods and services, R&D, and the implementation of new technologies. The more rapid the automation, and the wider the range of tasks, the faster growth could become.68

It is worth emphasizing that these models are simple extensions of standard growth models; the only change is to assume that capital can substitute more effectively for labor. With this assumption, semi-endogenous models with reasonable parameter values predict explosive growth, as do exogenous growth models with constant returns to labor and capital.69

A draft literature review on the possible growth effects of advanced AI includes many models in which AI increases growth via this mechanism (capital substituting more effectively for labor). In addition, it discusses several other mechanisms by which AI could increase growth, e.g. changing the mechanics of idea discovery and changing the savings rate.70

4.4.3 Combining the two perspectives

Both the ‘AI robots’ perspective and the ‘AI as a form of capital’ perspective make a similar point: if advanced AI can substitute very effectively for human workers, it could precipitate explosive growth by increasing the returns to accumulable inputs. In many growth models with plausible parameter values this scenario leads to explosive growth.

Previously, we said we should not trust long-run explosive models as they unrealistically assume population is accumulable. We can now qualify this claim. We should not trust these models unless AI systems are developed that can replace human workers.

4.4.4 Could sufficiently advanced AI be developed in time for explosive growth to occur this century?

This is not a focus of this report, but other evidence suggests that this scenario is plausible:

- A survey of AI practitioners asked them about the probability of developing AI that would enable full automation.71 Averaging their responses, they assigned ~30% or ~60% probability to this possibility by 2080, depending on how the question is framed.72

- My colleague Joe Carlsmith’s report estimates the computational power needed to match the human brain. Based on this and other evidence, my colleague Ajeya Cotra’s draft report estimates when we’ll develop human-level AI; she finds we’re ~70% likely to do so by 2080.

- In a previous report I estimated the probability of developing human-level AI based on analogous historical developments. My framework finds a ~15% probability of human-level AI by 2080.

4.5 Objections to explosive growth

My responses are brief, and I encourage interested readers to read Appendix A, which discusses these and other objections in more detail.

4.5.1 What about diminishing returns to technological R&D?

Objection: There is good evidence that ideas are getting harder to find. In particular, it seems that exponential growth in the number of researchers is needed to sustain constant exponential growth in technology (TFP).

Response: The models I have been discussing take this dynamic into account. They find that, with realistic parameter values, increasing returns to accumulable inputs is powerful enough to overcome diminishing returns to technological progress if AI systems can replace human workers. This is because the feedback loop ‘more output → more labor (AI systems) → more output’ allows research effort to grow super-exponentially , leading to super-exponential TFP growth despite ideas becoming harder to find (see more).

Related objection: You claimed above that the demographic transition caused super-exponential growth to stop. This is why you think advanced AI could restart super-exponential growth. But perhaps the real cause was that we hit more sharply diminishing returns to R&D in the 20th century.

Response: This could be true. Even if true, though, this wouldn’t rule out explosive growth occurring this century: it would still be possible that returns to R&D will become less steep in the future and the historical pattern of super-exponential growth will resume.73

However, I investigated this possibility and came away thinking that diminishing returns probably didn’t explain the end of super-exponential growth.

- Various endogenous growth models suggest that, had population remained accumulable throughout the 20th century, growth would have been super-exponential despite the sharply diminishing returns to R&D that we have observed.

- Conversely, these models suggest that the demographic transition would have ended super-exponential growth even if diminishing returns to R&D had been much less steep.

- This all suggests that the demographic transition, not diminishing returns, is the crucial factor in explaining the end of super-exponential growth (see more).

That said, I do think it’s reasonable to be uncertain about why super-exponential growth came to an end. The following diagram summarizes some possible explanations for the end of super-exponential growth in the 20th century, and their implications for the plausibility of explosive growth this century.

4.5.2 30% growth is very far out of the observed range

Objection: Explosive growth is so far out of the observed range! Even when China was charging through catch-up growth it never sustained more than 10% growth. So 30% is out of the question.

Response: Ultimately, this is not a convincing objection. If you had applied this reasoning in the past, you would have been repeatedly led into error. The 0.3% GWP growth of 1400 was higher than the previously observed range, and the 3% GWP growth of 1900 was higher than the previously observed range. There is historical precedent for growth increasing to levels far outside of the previously observed range (see more).

4.5.3 Models predicting explosive growth have implausible implications

Objection: Endogenous growth models imply output becomes infinite in a finite time. This is impossible and we shouldn’t trust such unrealistic models.

Response: First, models are always intended to apply only within bounded regimes; this doesn’t mean they are bad models. Clearly these endogenous growth models will stop applying before we reach infinite output (e.g. when we reach physical limits); they might still be informative before we reach this point. Secondly, not all models predicting explosive growth have this implication; some models imply that growth will rise without limit but never go to infinity (see more).

4.5.4 There’s no evidence of explosive growth in any economic sub-sector

Objection: If GWP growth rates were soon going to rise to 30%, we’d see signs of this in the current economy. But we don’t – Nordhaus (2021) looks for such signs and doesn’t find them.

Response: The absence of these signs in macroeconomic data is reason to doubt explosive growth will occur within the next couple of decades. Beyond this time frame, it is hard to draw conclusions. Further, it’s possible that the recent fast growth of machine learning is an early sign of explosive growth (see more).

4.5.5 Why think AI automation will be different to past automation?

Objection: We have been automating parts of our production processes and our R&D processes for many decades, without growth increasing. Why think AI automation will be different?

Response: To cause explosive growth, AI would have to drive much faster and widespread automation than we have seen over the previous century. If AI ultimately enabled full automation, models of automation suggest that the consequences for growth would be much more radical than those from the partial automation we have had in the past (see more).

4.5.6 Automation limits

Objection: Aghion et al. (2017) considers a model where growth is bottlenecked by tasks that are essential but hard to improve. If we’re unable to automate just one essential task, this would prevent explosive growth.

Response: This correctly highlights that AI may lead to very widespread automation without explosive growth occurring. One possibility is that an essential task isn’t automated because we care intrinsically about having a human perform the task, e.g. a carer.

I don’t think this provides a decisive reason to rule out explosive growth. Firstly, it’s possible that we will ultimately automate all essential tasks, or restructure work-flows to do without them. Secondly, there could be a significant boost in growth rates, at least temporarily, even without full automation (see more).74

4.5.7 Limits to how fast a human economy can grow

Objection: The economic models predicting explosive growth ignore many possible bottlenecks that might slow growth. Examples include regulation of the use of AI systems, extracting and transporting important materials, conducting physical experiments on the world needed to make social and technological progress, delays for humans to adjust to new technological and social innovations, fundamental limits to how advanced technology can become, fundamental limits of how quickly complex systems can grow, and other unanticipated bottlenecks.75

Response: I do think that there is some chance that one of these bottlenecks will prevent explosive growth. On the other hand, no individual bottleneck is certain to apply and there are some reasons to think we could grow at 30% per year:

- There will be huge incentives to remove bottlenecks to growth, and if there’s just one country that does this it would be sufficient.

- Large human economies have already grown at 10% per year (admittedly via catch up growth), explosive growth would only be 3X as fast.76

- Humans oversee businesses growing at 30% per year, and individual humans can adjust to 30% annual increases in wealth and want more.

- AI workers could run much faster than human workers.77

- Biological populations can grow faster than 30% a year, suggesting that it is physically possible for complex systems to grow this quickly.78

The arguments on both sides are inconclusive and inevitably speculative. I feel deeply uncertain about how fast growth could become before some bottleneck comes into play, but personally place less than 50% probability on a bottleneck preventing 30% GWP growth. That said, I have spent very little time thinking about this issue, which would be a fascinating research project in its own right.

4.5.8 How strong are these objections overall?

I find some of the objections unconvincing:

- Diminishing returns. The models implying that full automation would lead to explosive growth take diminishing returns into account.

- 30% is far from the observed range. Ruling out 30% on this basis would have led us astray in the past by ruling out historical increases in growth.

- Models predicting explosive growth have implausible implications. We need not literally believe that output will go to infinity to trust these models, and there are models that predict explosive growth without this implication.

I find other objections partially convincing:

- No evidence of explosive growth in any economic sub-sector. Trends in macroeconomic variables suggest there won’t be explosive growth in the next 20 years.

- Automation limits. A few essential but unautomated tasks might bottleneck growth, even if AI drives widespread automation.

- Limits to how fast a human economy can grow. There are many possible bottlenecks on the growth of a human economy; we have limited evidence on whether any of these would prevent 30% growth in practice.

Personally, I assign substantial probability (> 1/3) that the AI robot scenario would lead to explosive growth despite these objections.

4.6 Conclusion

The standard story points to the constant exponential growth of frontier GDP/capita over the last 150 years. Theoretical considerations suggest 21st century growth is more likely to be sub-exponential than exponential, as slowing population growth leads to slowing technological progress. I find this version of the standard story highly plausible.

The explosive growth story points to the significant increases in GWP growth over the last 10,000 years. It identifies an important mechanism explaining super-exponential growth before 1900: increasing returns to accumulable inputs. If AI allows capital to substitute much more effectively for human labor, a wide variety of models predict that increasing returns to accumulable inputs will again drive super-exponential growth. On this basis, I think that ‘advanced AI drives explosive growth’ is a plausible scenario from the perspective of economics.

It is reasonable to be skeptical of all the growth models discussed in the report. It is hard to get high quality evidence for or against different growth models, and empirical efforts to adjudicate between them often give conflicting results.79 It is possible that we do not understand key drivers of growth. Someone with this view should probably adopt the ignorance story: growth has increased significantly in the past, we don’t understand why, and so we should not rule out significant increases in growth occurring in the future. If someone wishes to rule out explosive growth, they must positively reject any theory that implies it is plausible; this is hard to do from a position of ignorance.

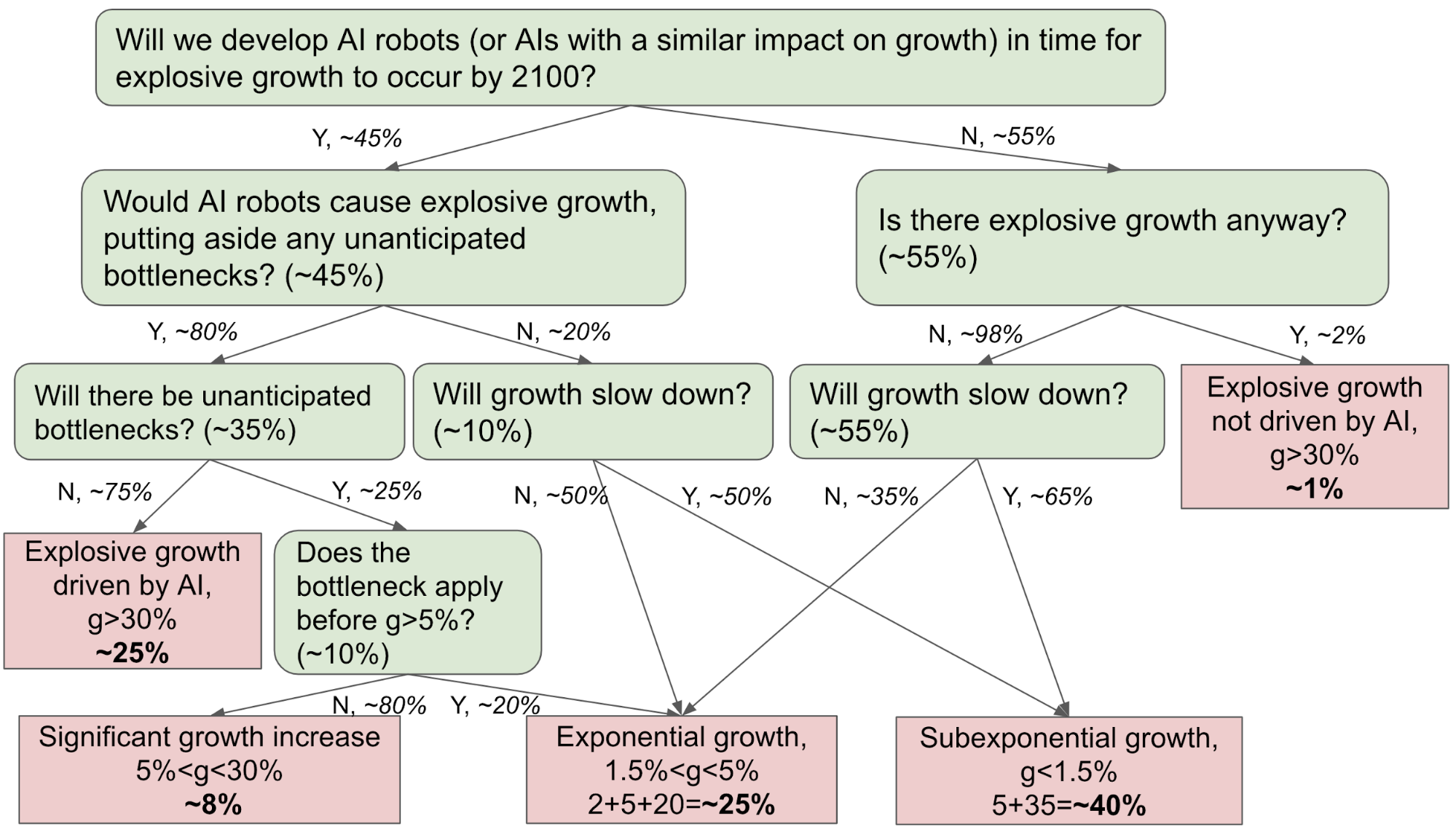

Overall, I assign > 10% probability to explosive growth occurring this century. This is based on > 30% that we develop sufficiently advanced AI in time, and > 1/3 that explosive growth actually occurs conditional on this level of AI being developed.80 Barring this kind of progress in AI, I’m most inclined to expect sub-exponential growth. In this case, projecting GWP is closely entangled with forecasting the development of advanced AI.

4.6.1 Are we claiming ‘this time is different’?

If you extrapolate the returns from R&D efforts over the last century, you will not predict that sustaining these efforts might lead to explosive growth this century. Achieving 3% growth in GDP/capita, let alone 30%, seems like it will be very difficult. When we forecast non-trivial probability of explosive growth, are we essentially claiming ‘this time will be different because AI is special’?

In a certain sense, the answer is ‘yes’. We’re claiming that economic returns to AI R&D will ultimately be much greater than the average R&D returns over the past century.

In another sense, the answer is ‘no’. We’re suggesting that sufficiently powerful AI would, by allowing capital to replace human labor, lead to a return to a dynamic present throughout much of human history where labor was accumulable. With this dynamic reestablished, we’re saying that ‘this time will be the same’: this time, as before, the economic consequence of an accumulable labor force will be super-exponential growth.

4.7 Further research

- Why do experts rule out explosive growth? This report argues that one should not confidently rule out explosive growth. In particular, I suggest assigning > 10% to explosive growth this century. Experts seem to assign much lower probabilities to explosive growth. Why is this? What do they make of the arguments of the report?

- Investigate evidence on endogenous growth theory.

- Assess Kremer’s rank-correlation argument. Does the ‘more people → more innovation’ story actually explain the rank correlation, or are there other better explanations?

- Investigate theories of long-run growth. How important is the increasing returns mechanism compared to other mechanisms in explaining the increase in long-run growth?

- Empirical evidence on different growth theories. What can 20th century empirical evidence tell us about the plausibility of various growth theories? I looked into this briefly and it seemed as if the evidence did not paint a clear picture.

- Are we currently seeing the early signs of explosive GDP growth?

- How long before explosive growth of GDP would we see signs of it in some sector of the economy?

- What exactly would these signs look like? What can we learn from the economic signs present in the UK before the onset of the industrial revolution?

- Does the fast growth of current machine learning resemble these signs?

- Do returns to technological R&D change over time? How uneven has the technological landscape been in the past? Is it common to have long periods where R&D progress is difficult punctuated by periods where it is easier? More technically, how much does the ‘fishing out’ parameter change over time?

- Are there plausible theories that predict exponential growth? Is there a satisfactory explanation for the constancy of frontier per capita growth in the 20th century that implies that this trend will continue even if population growth slows? Does this explanation avoid problematic knife-edge conditions?

- Is there evidence of super-exponential growth before the industrial revolution? My sensitivity analysis suggested that there is, but Ben Garfinkel did a longer analysis and reached a different conclusion. Dig into this apparent disagreement.

- Length of data series: How long must the data series be for there to be clear evidence of super-exponential growth?

- Type of data: How much difference does it make if you use population vs GWP data?

- How likely is a bottleneck to prevent an AI-driven growth explosion?

5. Structure of the rest of the report

The rest of the report is not designed to be read end to end. It consists of extended appendices that expand upon specific claims made in the main report. Each appendix is designed so that it can be read end to end.

The appendices are as follows:

- Objections to explosive growth (see here).

- This is a long section, which contains many of the novel contributions of this report.

- It’s probably the most important section to read after the main report, expanding upon objections to explosive growth in detail.

- Exponential growth is a knife-edge condition in many growth models (see here).

- I investigate one reason to think long-run growth won’t be exponential: exponential growth is a knife-edge condition in many economic growth models.

- This is not a core part of my argument for explosive growth.

- The section has three key takeaways:

- Sub-exponential is more plausible than exponential growth, out to 2100.

- There don’t seem to be especially strong reasons to expect exponential growth, raising the theoretical plausibility of stagnation and of explosive growth.

- Semi-endogenous models offer the best explanation of the exponential trend. When you add to these models the assumption that capital and substitute effectively for human labor, they predict explosive growth. This raises my probability that advanced AI could drive explosive growth.

- Conditions for super-exponential growth (see here).

- I report the conditions for super-exponential growth (and thus for explosive growth) in a variety of economic models.

- These include models of very long-run historical growth, and models designed to explain modern growth altered by the assumption that capital can substitute for labor.

- I draw some tentative conclusions about what kinds of AI systems may be necessary for explosive growth to occur.

- This section is math-heavy.

- Ignorance story (see here).

- I briefly explain what I call the ‘ignorance story’, how it might relate to the view that there was a step-change in growth around the industrial revolution, and how much weight I put on this story.

- Standard story (see here).

- I explain some of the models used to project long-run GWP by the standard story.

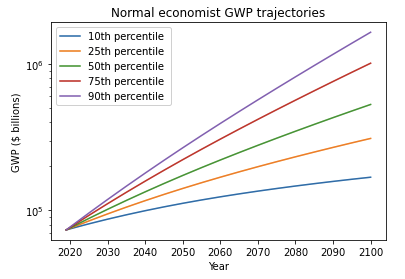

- These models forecast GWP/capita to grow at about 1-2% annually out to 2100.

- I find that the models typically only use post-1900 data and assume that technology will grow exponentially. However, the models provide no more support for this claim than is found in the uninterpreted empirical data.

- Other endogenous models do provide support for this claim. I explore such models in Appendix B.

- I conclude that these models are suitable for projecting growth to 2100 on the assumption that 21st growth resembles 20th century growth. They are not well equipped to assess the probability of a structural break occurring, after which the pattern of 20th growth no longer applies.

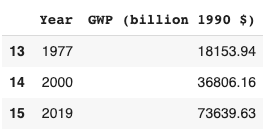

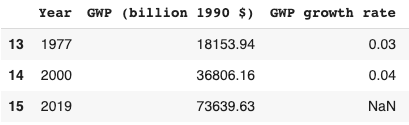

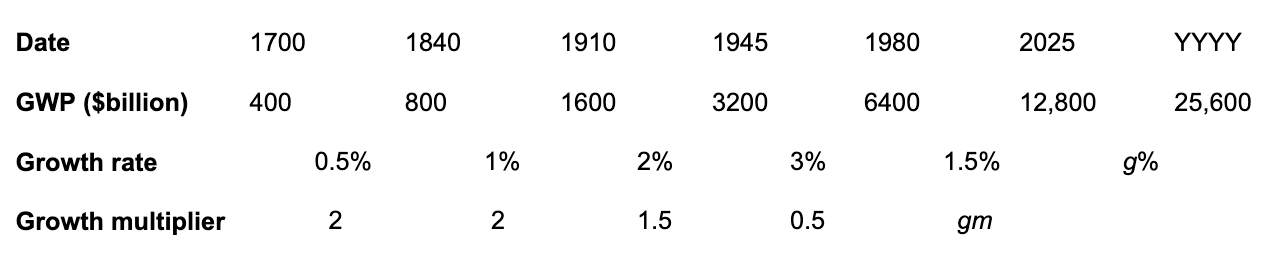

- Explosive growth before 2100 is robust to accounting for today’s slow GWP growth (see here)

- Long-run explosive models predict explosive growth within a few decades. From an outside view perspective81, it is reasonable to put some weight on such models. But these models typically imply growth should already be at ~7%, which we know is false.

- I adjust for this problem, developing a ‘growth multiplier’ model. It maintains the core mechanism driving increases in growth in the explosive growth story, but anchors its predictions to the fact that GWP growth over the last 20 years has been about 3.5%. As a result, its prediction of explosive growth is delayed by about 40 years.

- From an outside view perspective, I personally put more weight on the ‘growth multiplier model’ than Roodman’s long-run explosive model.

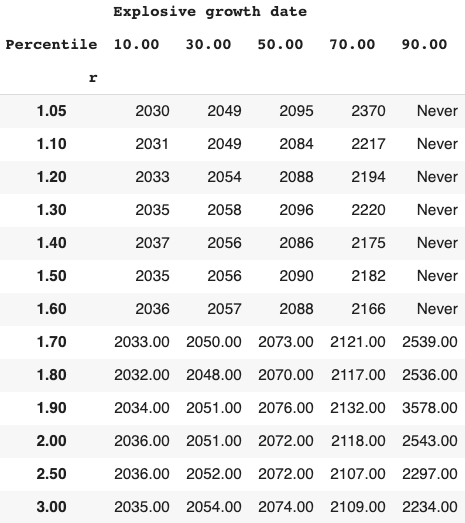

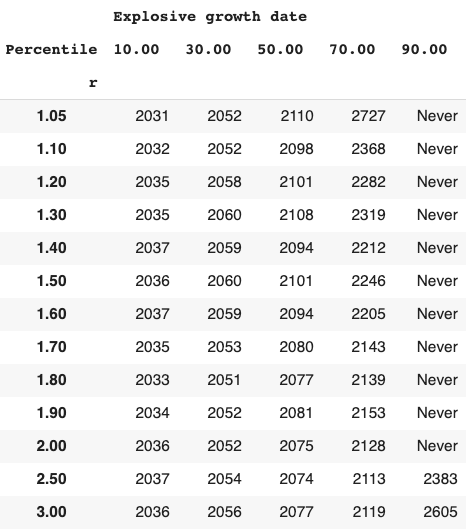

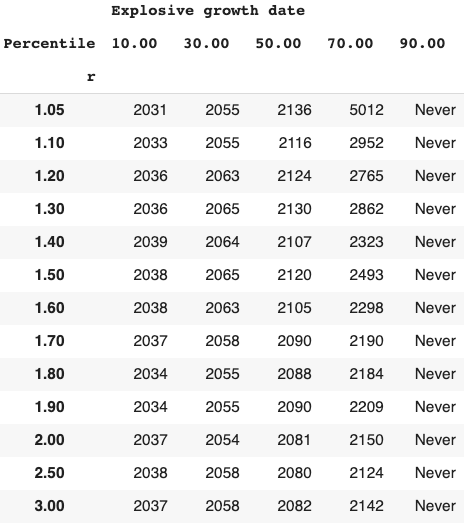

- In this section, I explain the growth multiplier model and conduct a sensitivity analysis on its results.

- How I decide my probability of explosive growth (see here).

- Currently I put ~30% on explosive growth occurring by 2100. This section explains my reasoning.

- Links to reviews of the report (see here).

- Technical appendices (see here).

- These contain a number of short technical analyses that support specific claims in the report.

- I only expect people to read these if they follow a link from another section.

6. Appendix A: Objections to explosive growth

Currently, I don’t find any of these objections entirely convincing. Nonetheless, taken together, the objections shift my confidence away from the explosive growth story and towards the ignorance story instead.

I initially discuss general objections to explosive growth, then objections targeted specifically at using long-run growth data to argue for explosive growth.

Here are the objections, in the order in which I address them:

General objections to explosive growth

Partially convincing objections

No evidence of explosive growth in any subsector of the economy

Growth models predicting explosive growth are unconfirmed

Why think AI automation will be different to past automation?

Automation limits

Diminishing returns to R&D (+ ‘search limits’)

Baumol tasks

Ultimately unconvincing objections

Explosive growth is so far out of the observed range

Models predicting explosive growth have unrealistic implications

Objections to using long-run growth to argue for explosive growth

Partially convincing objections

The ancient data points are unreliable

Recent data shows that super-exponential growth in GWP has come to an end

Frontier growth shows a clear slowdown

Slightly convincing objections

Long-run explosive models don’t anchor predictions to current growth levels

Long-run explosive models don’t discount pre-modern data

Long-run explosive models don’t seem to apply to time before the agricultural revolution; why expect them to apply to a new future growth regime?

6.1 General objections to explosive growth

6.1.1 No evidence of explosive growth in any sub sector of the economy

Summary of objection: If GWP growth rates were soon going to rise to 30%, we’d see signs of this in the current economy. We’d see 30% growth in sectors of the economy that have the potential to account for the majority of economic activity. For example, before the industrial revolution noticeably impacted GDP, the manufacturing sector was growing much faster than the rest of the economy. But no sector of the economy shows growth anywhere near 30%; so GWP won’t be growing at 30% any time soon.

Response: I think this objection might rule out explosive growth in the next few decades, but I’d need to see further investigation to be fully convinced of this.

I agree that there should be signs of explosive growth before it registers on any country’s GDP statistics. Currently, this makes me somewhat skeptical that there will be explosive growth in the next two decades. However, I’m very uncertain about this due to being ignorant about several key questions.

- How long before explosive growth of GDP would we see signs of it in some sector of the economy?

- What exactly would these signs look like?

- Are there early signs of explosive growth in the economy?

I’m currently very unsure about all three questions above, and so am unsure how far into the future this objection rules out explosive growth. The next two sections say a little more about the third question.

6.1.1.1.Does the fast growth of machine learning resemble the early signs of explosive growth?

With regards the penultimate question, Open Philanthropy believes that there is a non-negligible chance (> 15%) of very powerful AI systems being developed in the next three decades. The economic impact of machine learning is already growing fast with use in Google’s search algorithm, targeted ads, product recommendations, translation, and voice recognition. One recent report forecasts an average of 42% annual growth of the deep learning market between 2017 and 2023.

Of course, many small sectors show fast growth for a time and do not end up affecting the overall rate of GWP growth! It is the further fact that machine learning seems to be a general purpose technology, whose progress could ultimately lead to the automation of large amounts of cognitive labor, that raises the possibility that its fast growth might be a precursor of explosive growth.

6.1.1.2 Are there signs of explosive growth in US macroeconomic variables?

Nordhaus (2021) considers the hypothesis that explosive growth will be driven by fast productivity growth in the IT sector. He proposes seven empirical tests of this hypothesis. The tests make predictions about patterns in macroeconomic variables like TFP, real wages, capital’s share of total income, and the price and total amount of capital. He runs these tests with US data. Five of the tests suggest that we’re not moving towards explosive growth; the other two suggest we’re moving towards it only very slowly, such that a naive extrapolation implies explosive growth will happen around 2100.82

Nordhaus runs three of his tests with data specific to the IT sector.83 This data is more fine-grained than macroeconomic variables, but it’s still much broader than machine learning as a whole. The IT data is slightly more optimistic about explosive growth, but still suggests that it won’t happen within the next few decades.84

These empirical tests suggest that, as of 2014, the patterns in US macroeconomic variables are not what you’d expect if explosive growth driven by AI R&D was happening soon. But how much warning should we expect these tests to give? I’m not sure. Nordhaus himself says that his ‘conclusion is tentative and is based on economic trends to date’. I would expect patterns in macroeconomic variables to give more warning than trends in GWP or GDP, but less warning than trends in the economic value of machine learning. Similarly, I’d expect IT-specific data to give more warning than macroeconomic variables, but less than data specific to machine learning.

Brynjolfsson (2017)85 suggests economic effects will lag decades behind the potential of the technology’s cutting edge, and that national statistics could underestimate the longer term economic impact of technologies. As a consequence, disappointing historical data should not preclude forward-looking technological optimism.86

Overall, Nordhaus’ analysis reduces my probability that we will see explosive growth by 2040 (three decades after his latest data point) but it doesn’t significantly change my probability that we see it in 2050 – 2100. His analysis leaves open the possibility that we are seeing the early signs of explosive growth in data relating to machine learning specifically.

6.1.2 The evidence for endogenous growth theories is weak

Summary of objection: Explosive growth from sufficiently advanced AI is predicted by certain endogenous growth models, both theories of very long-run growth and semi-endogenous growth models augmented with the assumption that capital can substitute for labor.

The mechanism posited by these models is increasing returns to accumulable inputs.

But these endogenous growth models, and the mechanisms behind them, have not been confirmed. So we shouldn’t pay particular attention to their predictions. In fact, these models falsely predict that larger economies should grow faster.

Response summary:

- There is some evidence for endogenous growth models.

- Endogenous growth models do not imply that larger economies should grow faster than smaller ones.

- As well as endogenous growth models, some exogenous growth models predict that AI could bring about explosive growth by increasing the importance of capital accumulation: more output → more capital → more output →… (see more).

The rest of this section goes into the first two points in more detail.

6.1.2.1 Evidence for endogenous growth theories

6.1.2.1.1 Semi-endogenous growth models

These are simply standard semi-endogenous growth theories. Under realistic parameter values, they predict explosive growth when you add the assumption that capital can substitute for labor (elasticity of substitution > 1).

What evidence is there for these theories?

- Semi-endogenous growth theories are inherently plausible. They extend standard exogenous theories with the claim that directed human effort can lead to technological progress.

- Appendix B argues that semi-endogenous growth theories offer a good explanation of the recent period of exponential growth.

- However, there have not been increasing returns to accumulable inputs in the recent period of exponential growth because labor has not been accumulable. This might make us doubt the predictions of semi-endogenous models in a situation in which there are increasing returns to accumulable inputs, and thus doubt their prediction of explosive growth.

6.1.2.1.2 Theories of very long-run growth featuring increasing returns

Some theories of very long-run growth feature increasing returns to accumulable inputs, as they make technology accumulable and labor accumulable (in the sense that more output → more people). If AI makes labor accumulable again, these theories predict there will be explosive growth under realistic parameter values.

What evidence is there for these theories?

- These ‘increasing returns’ models seem to correctly describe the historical pattern of accelerating growth.87 However, the data is highly uncertain and it is possible that growth did not accelerate between 5000 BCE and 1500. If so, this would undermine the empirical evidence for these theories.

- Other evidence comes from Kremer (1993). He looks at five regions – Flinders Island, Tasmania, Australia, the Americas and the Eurasian continent – that were isolated from one another 10,000 years ago and had significantly varying populations. Initially all regions contained hunter gathers, but by 1500 CE the technology levels of these regions had significantly diverged. Kremer shows that the 1500 technology levels of these regions were perfectly rank-correlated with their initial populations, as predicted by endogenous growth models.

6.1.2.2 Endogenous growth models are not falsified by the faster growth of smaller economies.

Different countries share their technological innovations. Smaller economies can grow using the innovations of larger economies, and so the story motivating endogenous growth models does not predict that countries with larger economies should grow faster. As explained by Jones (1997):

The Belgian economy does not grow solely or even primarily because of ideas invented by Belgians… this fact makes it difficult… to test the model with cross-section evidence [of different countries across the same period of time]. Ideally one needs a cross-section of economies that cannot share ideas.

In other words, the standard practice of separating technological progress into catch-up growth and frontier growth is fully consistent with applying endogenous growth theories to the world economy. Endogenous growth models are not falsified by the faster growth of smaller economies.

6.1.3 Why think AI automation will be different to past automation?

Objection: Automation is nothing new. Since 1900, there’s been massive automation in both production and R&D (e.g. no more calculations by hand). But growth rates haven’t increased. Why should future automation have a different effect?

Response: If AI merely continues the previous pace of automation, then indeed there’s no particular reason to think it would cause explosive growth. However, if AI allows us to approach full automation, then it may well do so.

A plausible explanation for why previous automation hasn’t caused explosive growth is that growth ends up being bottlenecked by non-automated tasks. For example, suppose there are three stages in the production process for making a cheese sandwich: make the bread, make the cheese, combine the two together. If the first two stages are automated and can proceed much more quickly, the third stage can still bottleneck the speed of sandwich production if it isn’t automated. Sandwich production as a whole ends up proceeding at the same pace as the third stage, despite the automation of the first two stages.

Note, whether this dynamic occurs depends on people’s preferences, as well as on the production possibilities. If people were happy to just consume bread by itself and cheese by itself, all the necessary steps would have been automated and output could have grown more quickly.

The same dynamic as with sandwich production can happen on the scale of the overall economy. For example, hundreds of years ago agriculture was a very large share of GDP. Total GDP growth was closely related to productivity growth in agriculture. But over the last few hundred years, the sector has been increasingly automated and its productivity has risen significantly. People in developed countries now generally have plenty of food. But as a result, GDP in developed countries is now more bottlenecked by things other than agriculture. Agriculture is now only a small share of GDP, and so productivity gains in agriculture have little effect on overall GDP growth.

Again this relates to people’s preferences. Once people have plenty of food, they value further food much less. This reduces the price of food, and reduces agriculture’s share of GDP. If people had wanted to consume more and more food without limit, agriculture’s share of the economy would not have fallen so much.88

So, on this account, the reason why automation doesn’t lead to growth increases is because the non-automated sectors bottleneck growth.

Clearly, this dynamic won’t apply if there is full automation, for example if we develop AI systems that can replace human workers in any task. There would be no non-automated sectors left to bottleneck growth. This insight is consistent with models of automation, for example Growiec (2020) and Aghion et al. (2017) – they find that the effect of full automation is qualitatively different from that of partial automation and leads to larger increases in growth.

The next section discusses whether full automation is plausible, and whether we could have explosive growth without it.

6.1.4 Automation limits

Objection: Aghion et al. (2017) considers a growth model that does a good job in explaining the past trends in automation and growth. In particular, their model is consistent with the above explanation for why automation has not increased growth in the past: growth ends up being bottlenecked by non-automated tasks.

In their model, output is produced by a large number of tasks that are gross complements. Intuitively, this means that each task is essential. More precisely, if we hold performance on one task fixed, there is a limit to how large output can be no matter how well we perform other tasks.89 As a result, ‘output and growth end up being determined not by what we are good at, but by what is essential but hard to improve’.