Open Philanthropy is interested in when AI systems will be able to perform various tasks that humans can perform (“AI timelines”). To inform our thinking, I investigated what evidence the human brain provides about the computational power sufficient to match its capabilities. This is the full report on what I learned. A medium-depth summary is available here. The executive summary below gives a shorter overview.

1 Introduction

1.1 Executive summary

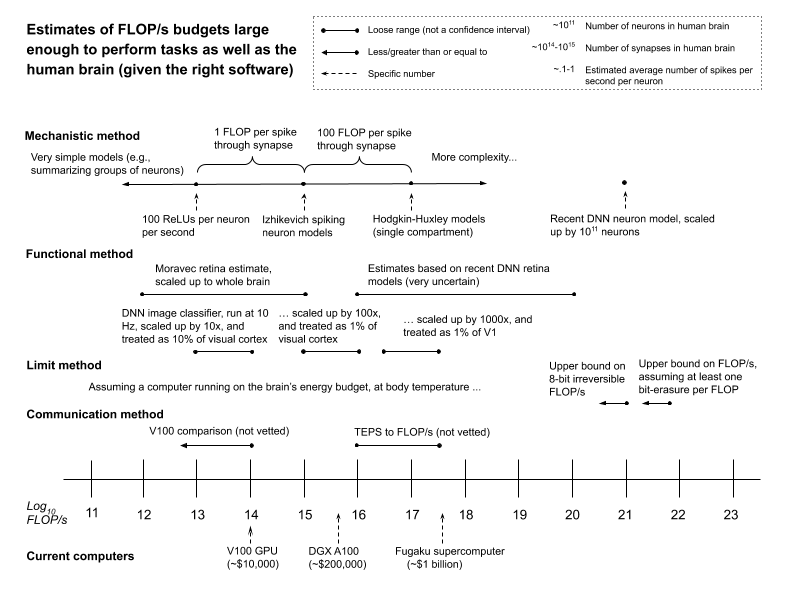

Let’s grant that in principle, sufficiently powerful computers can perform any cognitive task that the human brain can. How powerful is sufficiently powerful? I investigated what we can learn from the brain about this. I consulted with more than 30 experts, and considered four methods of generating estimates, focusing on floating point operations per second (FLOP/s) as a metric of computational power.

These methods were:

- Estimate the FLOP/s required to model the brain’s mechanisms at a level of detail adequate to replicate task-performance (the“mechanistic method”).1

- Identify a portion of the brain whose function we can already approximate with artificial systems, and then scale up to a FLOP/s estimate for the whole brain (the “functional method”).

- Use the brain’s energy budget, together with physical limits set by Landauer’s principle, to upper-bound required FLOP/s (the “limit method”).

- Use the communication bandwidth in the brain as evidence about its computational capacity (the “communication method”). I discuss this method only briefly.

None of these methods are direct guides to the minimum possible FLOP/s budget, as the most efficient ways of performing tasks need not resemble the brain’s ways, or those of current artificial systems. But if sound, these methods would provide evidence that certain budgets are, at least, big enough (if you had the right software, which may be very hard to create – see discussion in section 1.3).2

Here are some of the numbers these methods produce, plotted alongside the FLOP/s capacity of some current computers.

These numbers should be held lightly. They are back-of-the-envelope calculations, offered alongside initial discussion of complications and objections. The science here is very far from settled.

For those open to speculation, though, here’s a summary of what I’m taking away from the investigation:

- Mechanistic method estimates suggesting that 1013-1017 FLOP/s is enough to match the human brain’s task-performance seem plausible to me. This is partly because various experts are sympathetic to these estimates (others are more skeptical), and partly because of the direct arguments in their support. Some considerations from this method point to higher numbers; and some, to lower numbers. Of these, the latter seem to me stronger.3

- I give less weight to functional method estimates, primarily due to uncertainties about (a) the FLOP/s required to fully replicate the functions in question, (b) what the relevant portion of the brain is doing (in the case of the visual cortex), and (c) differences between that portion and the rest of the brain (in the case of the retina). However, I take estimates based on the visual cortex as some weak evidence that the mechanistic method range above (1013-1017 FLOP/s) isn’t much too low. Some estimates based on recent deep neural network models of retinal neurons point to higher numbers, but I take these as even weaker evidence.

- I think it unlikely that the required number of FLOP/s exceeds the bounds suggested by the limit method. However, I don’t think the method itself airtight. Rather, I find some arguments in the vicinity persuasive (though not all of them rely directly on Landauer’s principle); various experts I spoke to (though not all) were quite confident in these arguments; and other methods seem to point to lower numbers.

- Communication method estimates may well prove informative, but I haven’t vetted them. I discuss this method mostly in the hopes of prompting further work.

Overall, I think it more likely than not that 1015 FLOP/s is enough to perform tasks as well as the human brain (given the right software, which may be very hard to create). And I think it unlikely (<10%) that more than 1021 FLOP/s is required.4 But I’m not a neuroscientist, and there’s no consensus in neuroscience (or elsewhere).

I offer a few more specific probabilities, keyed to one specific type of brain model, in the appendix.5 My current best-guess median for the FLOP/s required to run that particular type of model is around 1015 (note that this is not an estimate of the FLOP/s uniquely “equivalent” to the brain – see discussion in section 1.6).

As can be seen from the figure above, the FLOP/s capacities of current computers (e.g., a V100 at ~1014 FLOP/s for ~$10,000, the Fugaku supercomputer at ~4×1017 FLOP/s for ~$1 billion) cover the estimates I find most plausible.6 However:

- Computers capable of matching the human brain’s task performance would also need to meet further constraints (for example, constraints related to memory and memory bandwidth).

- Matching the human brain’s task-performance requires actually creating sufficiently capable and computationally efficient AI systems, and I do not discuss how hard this might be (though note that training an AI system to do X, in machine learning, is much more resource-intensive than using it to do X once trained).7

So even if my best-guesses are right, this does not imply that we’ll see AI systems as capable as the human brain anytime soon.

Acknowledgements: This report emerged out of Open Philanthropy’s engagement with some arguments suggested by one of our technical advisors, Dario Amodei, in the vein of the mechanistic/functional methods (see citations throughout the report for details). However, my discussion should not be treated as representative of Dr. Amodei’s views; the project eventually broadened considerably; and my conclusions are my own. My thanks to Dr. Amodei for prompting the investigation, and to Open Philanthropy’s technical advisors Paul Christiano and Adam Marblestone for help and discussion with respect to different aspects of the report. I am also grateful to the following external experts for talking with me. In neuroscience: Stephen Baccus, Rosa Cao, E.J. Chichilnisky, Erik De Schutter, Shaul Druckmann, Chris Eliasmith, davidad (David A. Dalrymple), Nick Hardy, Eric Jonas, Ilenna Jones, Ingmar Kanitscheider, Konrad Kording, Stephen Larson, Grace Lindsay, Eve Marder, Markus Meister, Won Mok Shim, Lars Muckli, Athanasia Papoutsi, Barak Pearlmutter, Blake Richards, Anders Sandberg, Dong Song, Kate Storrs, and Anthony Zador. In other fields: Eric Drexler, Owain Evans, Michael Frank, Robin Hanson, Jared Kaplan, Jess Riedel, David Wallace, and David Wolpert. My thanks to Dan Cantu, Nick Hardy, Stephen Larson, Grace Lindsay, Adam Marblestone, Jess Riedel, and David Wallace for commenting on early drafts (or parts of early drafts) of the report; to six other neuroscientists (unnamed) for reading/commenting on a later draft; to Ben Garfinkel, Catherine Olsson, Chris Sommerville, and Heather Youngs for discussion; to Nick Beckstead, Ajeya Cotra, Allan Dafoe, Tom Davidson, Owain Evans, Katja Grace, Holden Karnofsky, Michael Levine, Luke Muehlhauser, Zachary Robinson, David Roodman, Carl Shulman, Bastian Stern, and Jacob Trefethen for valuable comments and suggestions; to Charlie Giattino, for conducting some research on the scale of the human brain; to Asya Bergal for sharing with me some of her research on Landauer’s principle; to Jess Riedel for detailed help with the limit method section; to AI Impacts for sharing some unpublished research on brain-computer equivalence; to Rinad Alanakrih for help with image permissions; to Robert Geirhos, IEEE, and Sage Publications for granting image permissions; to Jacob Hilton and Gregory Toepperwein for help estimating the FLOP/s costs of different models; to Hannah Aldern and Anya Grenier for help with recruitment; to Eli Nathan for extensive help with the website and citations; to Nik Mitchell, Andrew Player, Taylor Smith, and Josh You for help with conversation notes; and to Nick Beckstead for guidance and support throughout the investigation.

1.2 Caveats

(This section discusses some caveats about the report’s epistemic status, and some notes on presentation. Those eager for the main content, however uncertain, can skip to section 1.3.)

Some caveats:

- Little if any of the evidence surveyed in this report is particularly conclusive. My aim is not to settle the question, but to inform analysis and decision-making that must proceed in the absence of conclusive evidence, and to lay groundwork for future work.

- I am not an expert in neuroscience, computer science, or physics (my academic background is in philosophy).

- I sought out a variety of expert perspectives, but I did not make a rigorous attempt to ensure that the experts I spoke to were a representative sample of opinion in the field. Various selection effects influencing who I interviewed plausibly correlate with sympathy towards lower FLOP/s requirements.8

- For various reasons, the research approach used here differs from what might be expected in other contexts. Key differences include:

- I give weight to intuitions and speculations offered by experts, as well as to factual claims by experts that I have not independently verified (these are generally documented in conversation notes approved by the experts themselves).

- I report provisional impressions from initial research.

- My literature reviews on relevant sub-topics are not comprehensive.

- I discuss unpublished papers where they appear credible.

- My conclusions emerge from my own subjective synthesis of the evidence I engaged with.

- There are ongoing questions about the baseline reliability of various kinds of published research in neuroscience and cognitive science.9 I don’t engage with this issue explicitly, but it is an additional source of uncertainty.

A few other notes on presentation:

- I have tried to keep the report accessible to readers with a variety of backgrounds.

- The endnotes are frequent and sometimes lengthy, and they contain more quotes and descriptions of my research process than is academically standard. This is out of an effort to make the report’s reasoning transparent to readers. However, the endnotes are not essential to the main content, and I suggest only reading them if you’re interested in more details about a particular point.

- I draw heavily on non-verbatim notes from my conversations with experts, made public with their approval and cited/linked in endnotes. These notes are also available here.

- I occasionally use the word “compute” as a shorthand for “computational power.”

- Throughout the rest of the report, I use a form of scientific notation, in which “XeY” means “X×10Y.” Thus, 1e6 means 1,000,000 (a million); 4e8 means 400,000,000 (four hundred million); and so on. I also round aggressively.

1.3 Context

(This section briefly describes what prompts Open Philanthropy’s interest in the topic of this report. Those primarily interested in the main content can skip to Section 1.4.)

This report is part of a broader effort at Open Philanthropy to investigate when advanced AI systems might be developed (“AI timelines”) – a question that we think decision-relevant for our grant-making related to potential risks from advanced AI.10 But why would an interest in AI timelines prompt an interest in the topic of this report in particular?

Some classic analyses of AI timelines (notably, by Hans Moravec and Ray Kurzweil) emphasize forecasts about when available computer hardware will be “equivalent,” in some sense (see section 1.6 for discussion), to the human brain.11

A basic objection to predicting AI timelines on this basis alone is that you need more than hardware to do what the brain does.12 In particular, you need software to run on your hardware, and creating the right software might be very hard (Moravec and Kurzweil both recognize this, and appeal to further arguments).13

In the context of machine learning, we can offer a more specific version of this objection: the hardware required to run an AI system isn’t enough; you also need the hardware required to train it (along with other resources, like data).14 And training a system requires running it a lot. DeepMind’s AlphaGo Zero, for example, trained on ~5 million games of Go.15

Note, though, that depending on what sorts of task-performance will result from what sorts of training, a framework for thinking about AI timelines that incorporated training requirements would start, at least, to incorporate and quantify the difficulty of creating the right software more broadly.16 This is because training turns computation, data, and other resources into software you wouldn’t otherwise know how to make.

What’s more, the hardware required to train a system is related to the hardware required to run it.17 This relationship is central to Open Philanthropy’s interest in the topic of this report, and to an investigation my colleague Ajeya Cotra has been conducting, which draws on my analysis. That investigation focuses on what brain-related FLOP/s estimates, along with other estimates and assumptions, might tell us about when it will be feasible to train different types of AI systems. I don’t discuss this question here, but it’s an important part of the context. And in that context, brain-related hardware estimates play a different role than they do in forecasts like Moravec’s and Kurzweil’s.

1.4 FLOP/s basics

(This section discusses what FLOP/s are, and why I chose to focus on them. Readers familiar with FLOP/s and happy with this choice can skip to Section 1.5.)

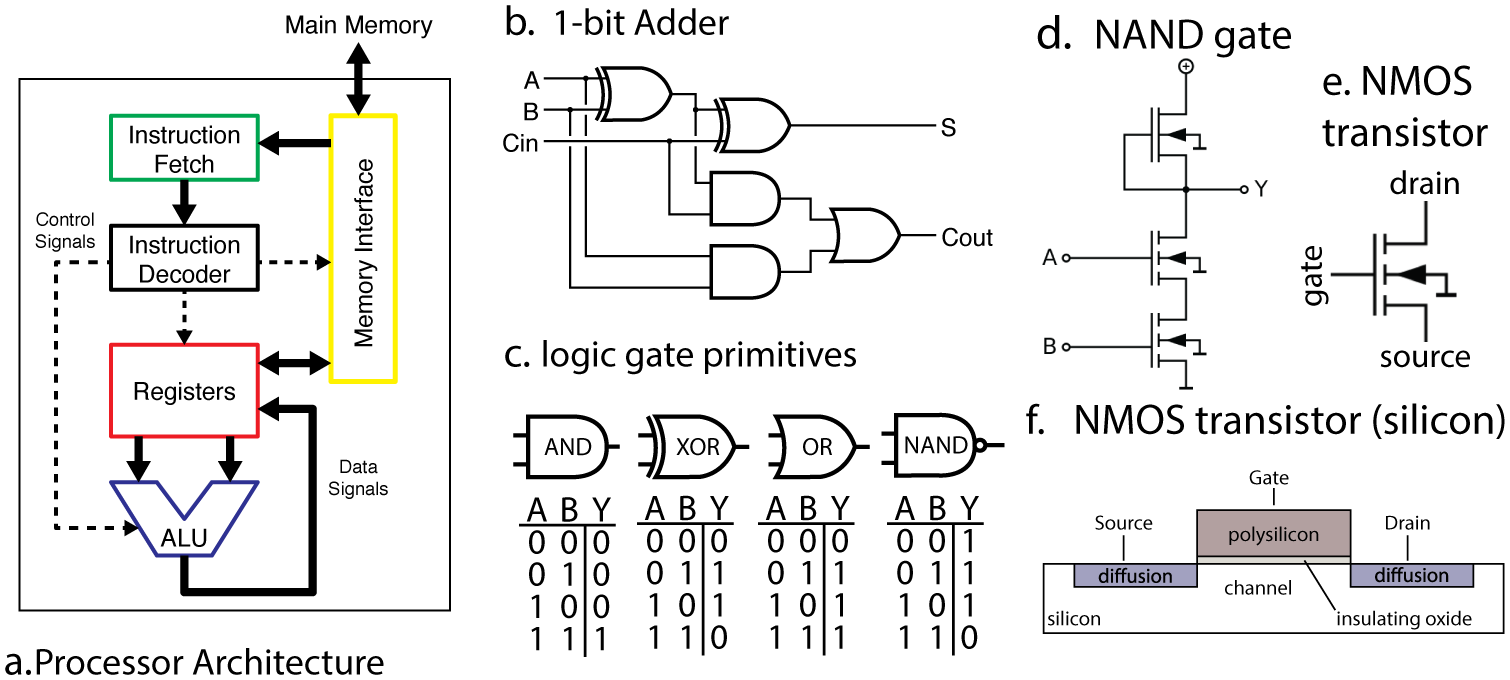

Computational power is multidimensional – encompassing, for example, the number and type of operations performed per second, the amount of memory stored at different levels of accessibility, and the speed with which information can be accessed and sent to different locations.18

This report focuses on operations per second, and in particular, on “floating point operations.”19 These are arithmetic operations (addition, subtraction, multiplication, division) performed on a pair of floating point numbers – that is, numbers represented as a set of significant digits multiplied by some other number raised to some exponent (like scientific notation). I’ll use “FLOPs” to indicate floating point operations (plural), and “FLOP/s” to indicate floating point operations per second.

My central reason for focusing on FLOP/s is that various brain-related FLOP/s estimates are key inputs to the framework for thinking about training requirements, mentioned above, that my colleague Ajeya Cotra has been investigating, and they were the focus of Open Philanthropy’s initial exploration of this topic, out of which this report emerged. Focusing on FLOP/s in particular also limits the scope of what is already a fairly broad investigation; and the availability of FLOP/s is one key contributor to recent progress in AI.20

Still, the focus on FLOP/s is a key limitation of this analysis, as other computational resources are just as crucial to task-performance: if you can’t store the information you need, or get it where it needs to be fast enough, then the units in your system that perform FLOPs will be some combination of useless and inefficiently idle.21 Indeed, my understanding is that FLOP/s are often not the relevant bottleneck in various contexts related to AI and brain modeling.22 And further dimensions an AI system’s implementation, like hardware architecture, can introduce significant overheads, both in FLOP/s and other resources.23

Ultimately, though, once other computational resources are in place, and other overheads have mostly been eliminated or accounted for, you need to actually perform the FLOP/s that a given time-limited computation requires. In order to isolate this quantity, I proceed on the idealizing assumption that non-FLOP resources are available in amounts adequate to make full use of all of the FLOP/s in question (but not in unrealistically extreme abundance), without significant overheads.24 All talk of the “FLOP/s sufficient to X” assumes this caveat.

This means you can’t draw conclusions about which concrete computers can replicate human-level task performance directly from the FLOP/s estimates in this report, even if you think those estimates credible. Such computers will need to meet further constraints.25

Note, as well, that these estimates do not depend on the assumption that the brain performs operations analogous to FLOPs, or on any other similarities between brain architectures and computer architectures.26 The report assumes that the tasks the brain performs can also be performed using a sufficient number of FLOP/s, but the causal structure in the brain that gives rise to task-performance could in principle take a wide variety of unfamiliar forms.

1.5 Neuroscience basics

(This section reviews some of the neural mechanisms I’ll be discussing, in an effort to make the report’s content accessible to readers without a background in neuroscience.27 Those familiar with signaling mechanisms in the brain – neurons, neuromodulators, gap junctions – can skip to Section 1.5.1).

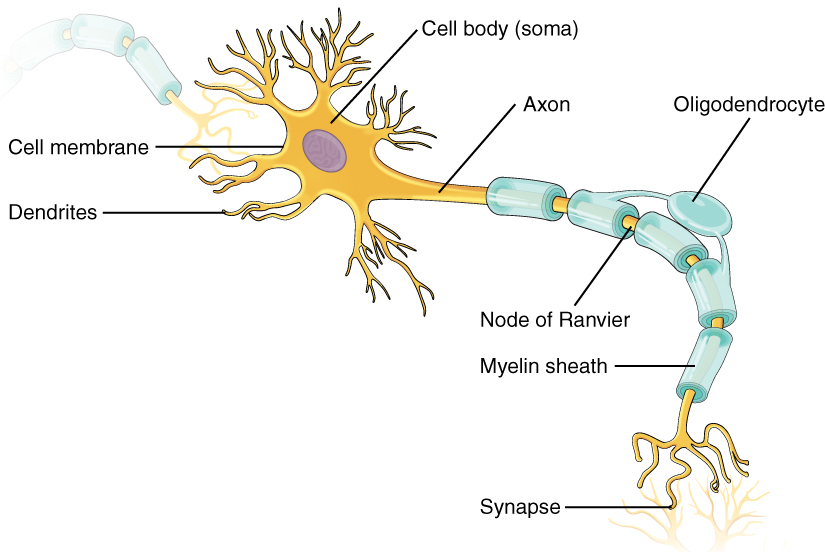

The human brain contains around 100 billion neurons, and roughly the same number of non-neuronal cells.28 Neurons are cells specialized for sending and receiving various types of electrical and chemical signals, and other non-neuronal cells send and receive signals as well.29 These signals allow the brain, together with the rest of the nervous system, to receive and encode sensory information from the environment, to process and store this information, and to output the complex, structured motor behavior constitutive of task performance.30

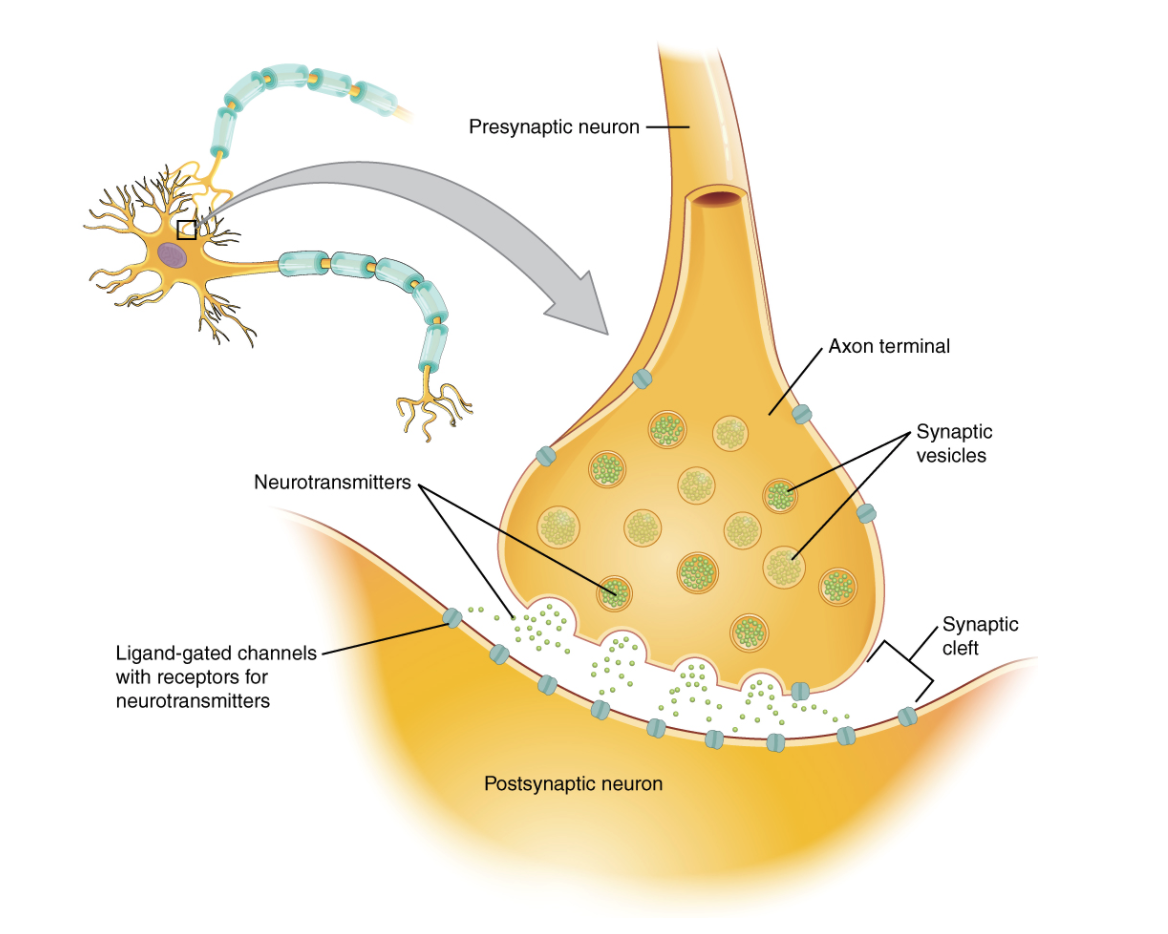

We can divide a typical neuron into three main parts: the soma, the dendrites, and the axon.31 The soma is the main body of the cell. The dendrites are extensions of the cell that branch off from the soma, and which typically receive signals from other neurons. The axon is a long, tail-like projection from the soma, which carries electrical impulses away from the cell body. The end of the axon splits into branches, the ends of which are known as axon terminals, which reach out to connect with other cells at locations called synapses. A typical synapse forms between the axon terminal of one neuron (the presynaptic neuron) and the dendrite of another (the postsynaptic neuron), with a thin zone of separation between them known as the synaptic cleft.32

The cell as a whole is enclosed in a membrane that has various pumps that regulate the concentration of certain ions – such as sodium (Na+), potassium (K+) and chloride (Cl–) – inside it.33 This regulation creates different concentrations of these ions inside and outside the cell, resulting in a difference in the electrical potential across the membrane (the membrane potential).34 The membrane also contains proteins known as ion channels, which, when open, allow certain types of ions to flow into and out of the cell.35

If the membrane potential in a neuron reaches a certain threshold, then a particular set of voltage-gated ion channels open to allow ions to flow into the cell, creating a temporary spike in the membrane potential (an action potential).36 This spike travels down the axon to the axon terminals, where it causes further voltage-gated ion channels to open, allowing an influx of calcium ions into the pre-synaptic axon terminal. This calcium can trigger the release of molecules known as neurotransmitters, which are stored in sacs called vesicles in the axon terminal.37

These vesicles merge with the cell membrane at the synapse, allowing the neurotransmitter they contain to diffuse across the synaptic cleft and bind to receptors on the post-synaptic neuron. These receptors can cause (directly or indirectly, depending on the type of receptor) ion channels on the post-synaptic neuron to open, thereby altering the membrane potential in that area of that cell.38

The expected size of the impact (excitatory or inhibitory) that a spike through a synapse will have on the post-synaptic membrane potential is often summarized via a parameter known as a synaptic weight.40 This weight changes on various timescales, depending on the history of activity in the pre-synaptic and post-synaptic neuron, together with other factors. These changes, along with others that take place within synapses, are grouped under the term synaptic plasticity.41 Other changes also occur in neurons on various timescales, affecting the manner in which neurons respond to synaptic inputs (some of these changes are grouped under the term intrinsic plasticity).42 New synapses, dendritic spines, and neurons also grow over time, and old ones die.43

There are also a variety of other signaling mechanisms in the brain that this basic story does not include. For example:

- Other chemical signals: Neurons can also send and receive other types of chemical signals – for example, molecules known as neuropeptides, and gases like nitric oxide – that can diffuse more broadly through the space in between cells, across cell membranes, or via the blood.44 The chemicals neurons release that influence the activity of groups of neurons (or other cells) are known as neuromodulators.45

- Glial cells: Non-neuronal cells in the brain known as glia have traditionally been thought to mostly perform functions to do with maintenance of brain function, but they may be involved in task-performance as well.46

- Electrical synapses: In addition to the chemical synapses discussed above, there are also electrical synapses that allow direct, fast, and bi-directional exchange of electrical signals between neurons (and between other cells). The channels mediating this type of connection are known as gap junctions.

- Ephaptic effects: Electrical activity in neurons creates electric fields that may impact the electrical properties of neighboring neurons.47

- Other forms of axon signaling: The process of firing an action potential has traditionally been thought of as a binary decision.48 However, some recent evidence indicates that processes within a neuron other than “to fire or not to fire” can matter for synaptic communication.49

- Blood flow: Blood flow in the brain correlates with neural activity, which has led some to suggest that it might be playing a role in information-processing.50

This is not a complete list of all the possible signaling mechanisms that could in principle be operative in the brain.51 But these are some of the most prominent.

1.5.1 Uncertainty in neuroscience

I want to emphasize one other meta-point about neuroscience: namely, that our current understanding of how the brain processes information is extremely limited.52 This was a consistent theme in my conversations with experts, and one of my clearest take-aways from the investigation as a whole.53

One problem is that we need better tools. For example:

- Despite advances, we can only record the spiking activity of a limited number of neurons at the same time (techniques like fMRI and EEG are much lower resolution).54

- We can’t record from all of a neuron’s synapses or dendrites simultaneously, making it hard to know what patterns of overall synaptic input and dendritic activity actually occur in vivo.55

- We also can’t stimulate all of a neuron’s synapses and/or dendrites simultaneously, making it hard to know how the cell responds to different inputs (and hence, which models can capture these responses).56

- Techniques for measuring many lower-level biophysical mechanisms and processes, such as possible forms of ion channel plasticity, remain very limited.57

- Results in model animals may not generalize to e.g. humans.58

- Results obtained in vitro (that is, in a petri dish) may not generalize in vivo (that is, in a live functioning brain).59

- The tasks we can give model animals like rats to perform are generally very simple, and so provide limited evidence about more complex behavior.60

Tools also constrain concepts. If we can’t see or manipulate something, it’s unlikely to feature in our theories.61 And certain models of e.g. neurons may receive scant attention simply because they are too computation-intensive to work with, or too difficult to constrain with available data.62

But tools aren’t the only problem. For example, when Jonas and Kording (2017) examined a simulated 6502 microprocessor – a system whose processing they could observe and manipulate to arbitrary degrees – using analogues of standard neuroscientific approaches, they found that “the approaches reveal interesting structure in the data but do not meaningfully describe the hierarchy of information processing in the microprocessor” (p. 1).63 And artificial neural networks that perform complex tasks are difficult (though not necessarily impossible) to interpret, despite similarly ideal experimental access.64

We also don’t know what high-level task most neural circuits are performing, especially outside of peripheral sensory/motor systems. This makes it very hard to say what models of such circuits are adequate.65

It would help if we had full functional models of the nervous systems of some simple animals. But we don’t.66 For example, the nematode worm Caenorhabditis elegans (C. elegans) has only 302 neurons, and a map of the connections between these neurons (the connnectome) has been available since 1986.67 But we have yet to build a simulated C. elegans that behaves like the real worm across a wide range of contexts.68

All this counsels pessimism about the robustness of FLOP/s estimates based on our current neuroscientific understanding. And it increases the relevance of where we place the burden of proof. If we start with a strong default view about the complexity of the brain’s task-performance, and then demand proof to the contrary, our standards are unlikely to be met.

Indeed, my impression is that various “defaults” in this respect play a central role in how experts approach this topic. Some take simple models that have had some success as a default, and then ask whether we have strong reason to think additional complexity necessary;69 others take the brain’s biophysical complexity as a default, and then ask if we have strong reason to think that a given type of simplification captures everything that matters.70

Note the distinction, though, between how we should do neuroscience, and how we should bet now about where such science will ultimately lead, assuming we had to bet. The former question is most relevant to neuroscientists; but the latter is what matters here.

1.6 Clarifying the question

Consider the set of cognitive tasks that the human brain can perform, where task performance is understood as the implementation of a specified type of relationship between a set of inputs and a set of outputs.71 Examples of such tasks might include:

- Reading an English-language description of a complex software problem, and, within an hour, outputting code that solves that problem.72

- Reading a randomly selected paper submitted to the journal Nature, and, within a week, outputting a review of the paper of quality comparable to an average peer-reviewer.73

- Reading newly-generated Putnam Math competition problems, and, within six hours, outputting answers that would receive a perfect score by standard judging criteria.74

Defining tasks precisely can be arduous. I’ll assume such precision is attainable, but I won’t try to attain it, since little in what follows depends on the details of the tasks in question. I’ll also drop the adjective “cognitive” in what follows.

I will also assume that sufficiently powerful computers can in principle perform these tasks (I focus solely on non-quantum computers – see endnote for discussion of quantum brain hypotheses).75 This assumption is widely shared both within the scientific community and beyond it. Some dispute it, but I won’t defend it here.76

The aim of the report is to evaluate the extent to which the brain provides evidence, for some number of FLOP/s F, that for any task T that the human brain can perform, T can be performed with F.77 As a proxy for FLOP/s numbers with this property, I will sometimes talk about the FLOP/s sufficient to run a “task-functional model,” by which I mean a computational model that replicates a generic human brain’s task-performance. Of course, some brains can do things others can’t, but I’ll assume that at the level of precision relevant to this report, human brains are roughly similar, and hence that if F FLOP/s is enough to replicate the task performance of a generic human brain, roughly F is enough to replicate any task T the human brain can perform.78

The project here is related to, but distinct from, directly estimating the minimum FLOP/s sufficient to perform any task the brain can perform. Here’s an analogy. Suppose you want to build a bridge across the local river, and you’re wondering if you have enough bricks. You know of only one such bridge (the “old bridge”), so it’s natural to look there for evidence. If the old bridge is made of bricks, you could count them. If it’s made of something else, like steel, you could try to figure out how many bricks you need to do what a given amount of steel does. If successful, you’ll end up confident that e.g. 100,000 bricks is enough to build such a bridge, and hence that the minimum is less than this. But how much less is still unclear. You studied an example bridge, but you didn’t derive theoretical limits on the efficiency of bridge-building.

That said, Dr. Paul Christiano expected there to be at least some tasks such (a) the brain’s methods of performing them are close to maximally efficient, and (b) these methods use most of the brain’s resources (see endnote).79 I don’t investigate this claim here, but if true, it would make data about the brain more directly relevant to the minimum adequate FLOP/s budget.

The project here is also distinct from estimating the FLOP/s “equivalent” to the human brain. As I discuss in the report’s appendix, I think the notion of “the FLOP/s equivalent to the brain” requires clarification: there are a variety of importantly different concepts in the vicinity.

To get a flavor of this, consider the bridge analogy again, but assume that the old bridge is made of steel. What number of bricks would be “equivalent” to the old bridge? The question seems ill-posed. It’s not that bridges can’t be built from bricks. But we need to say more about what we want to know.

I group the salient possible concepts of the “FLOP/s equivalent to the human brain” into four categories:

- FLOP/s required for task-performance, with no further constraints on how the tasks need to be performed.80

- FLOP/s required for task-performance + brain-like-ness constraints – that is, constraints on the similarity between how the AI system does it, and how the brain does it.

- FLOP/s required for task-performance + findability constraints – that is, constraints on what sorts of training processes and engineering efforts would be able to create the AI system in question.

- Other analogies with human-engineered computers.

All these categories have their own problems (see section A.5 for a summary chart). The first is closest to the report’s focus, but as just noted, it’s hard (at least absent further assumptions) to estimate directly using example systems. The second faces the problem of identifying a non-arbitrary brain-like-ness constraint that picks out a unique number of FLOP/s, without becoming too much like the first. The third brings in a lot of additional questions about what sorts of systems are what sorts of findable. And the fourth, I suggest, either collapses into the first or second, or raises its own questions.

In the hopes of avoiding some of these problems, I have kept the report’s framework broad. The brain-based FLOP/s budgets I’m interested in don’t need to be uniquely “equivalent” to the brain, or as small as theoretically possible, or accommodating of any constraints on brain-like-ness or findability. They just need to be big enough, in principle, to perform the tasks in question.

A few other clarifications:

- Properties construed as consisting in something other than the implementation of a certain type of input-output relationship (for example, properties like phenomenal consciousness, moral patienthood, or continuity with a particular biological human’s personal identity – to the extent they are so construed) are not included in the definition of the type of task-performance I have in mind. Systems that replicate this type of task-performance may or may not also possess such properties, but what matters here are inputs and outputs.81

- Many tasks require more than a brain. For example, they may require something like a body, or rely partly on information-processing taking place outside the brain.82 In those cases, I’m interested in the FLOP/s sufficient to replicate the brain’s role.

1.7 Existing literature

(This section reviews existing literature.83 Those interested primarily in the report’s substantive content can skip to Section 2.)

A lot of existing research is relevant to estimating the FLOP/s sufficient to run a task-functional model. But efforts in the mainstream academic literature to address this question directly are comparatively rare (a fact that this report does not alter). Many existing estimates are informal, and they often do not attempt much justification of their methods or background assumptions. The specific question they consider also varies, and their credibility varies widely.84

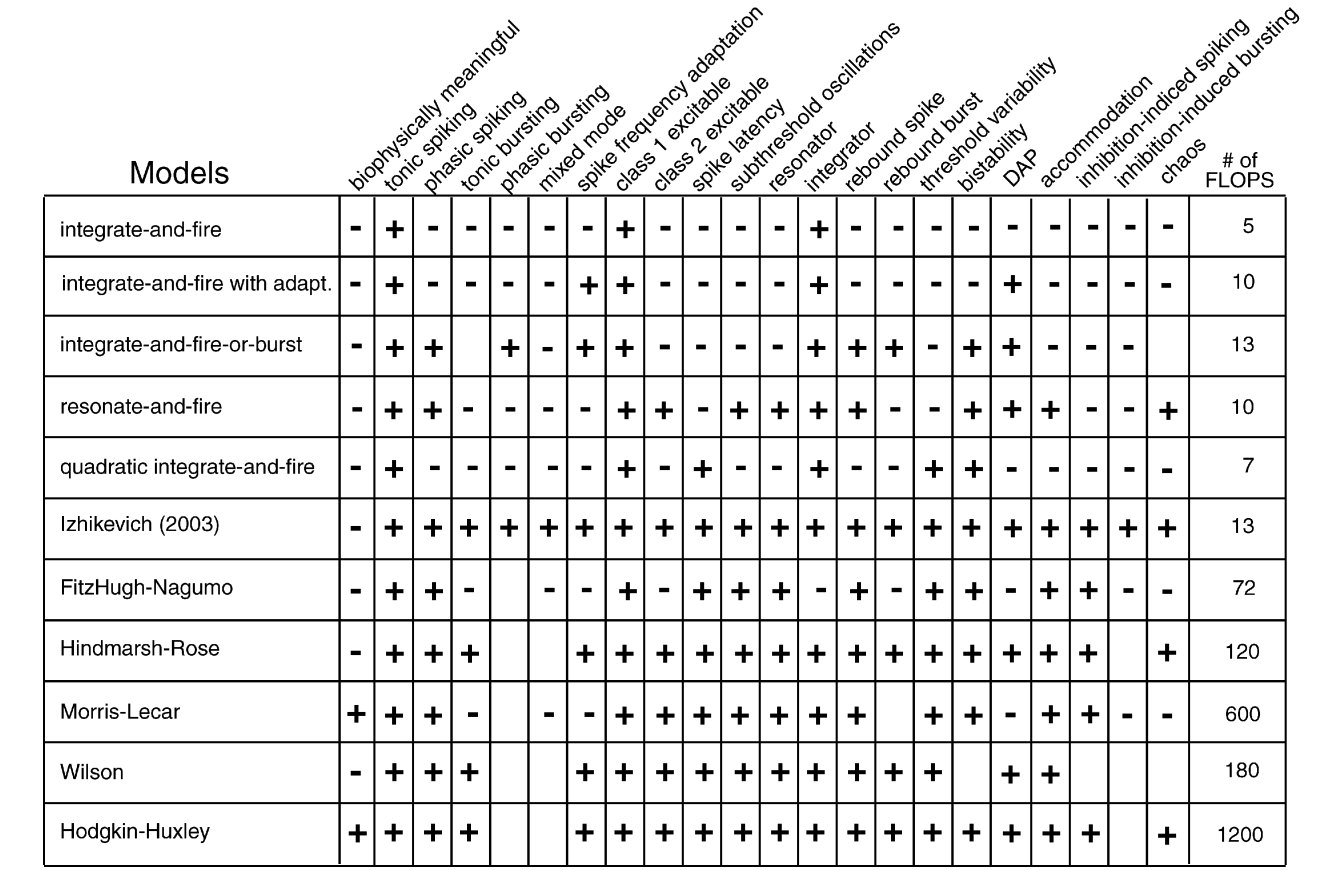

1.7.1 Mechanistic method estimates

The most common approach assigns a unit of computation (such as a calculation, a number of bits, or a possibly brain-specific operation) to a spike through a synapse, and then estimates the rate of spikes through synapses by multiplying an estimate of the average firing rate by an estimate of the number of synapses.85 Thus, Merkle (1989),86 Mead (1990),87 Freitas (1996),88 Sarpeshkar (1997),89 Bostrom (1998),90 Kurzweil (1999)),91 Dix (2005),92 Malickas (2007),93 and Tegmark (2017)94 are all variations on this theme.95 Their estimates range from ~1e12 to ~1e17 (though using basic different units of computation),96 but the variation results mainly from differences in estimated synapse count and average firing rate, rather than differences in substantive assumptions about how to make estimates of this kind.97 In this sense, the helpfulness of these estimates is strongly correlated: if the basic approach is wrong, none of them are a good guide.

Other estimates use a similar approach, but include more complexity. Sarpeshkar (2010) includes synaptic conductances (see discussion in section 2.1.1.2.2), learning, and firing decisions in a lower bound estimate (6e16 FLOP/s);98 Martins et al. (2012) estimate the information-processing rate of different types of neurons in different regions, for a total of ~5e16 bits/sec in the whole brain;99 and Kurzweil (2005) offers an upper bound estimate for a personality-level simulation of 1e19 calculations per second – an estimate that budgets 1e3 calculations per spike through synapse to capture nonlinear interactions in dendrites.100 Still others attempt estimates based on protein interactions (Thagard (2002), 1e21 calculations/second);101 microtubules (Tuszynski (2006), 1e21 FLOP/s),102 individual neurons (von Neumann (1958), 1e11 bits/second);103 and possible computations performed by dendrites and other neural mechanisms (Dettmers (2015), 1e21 FLOP/s).104

A related set of estimates comes from the literature on brain simulations. Ananthanarayanan et al. (2009) estimates >1e18 FLOP/s to run a real-time human brain simulation;105 Waldrop (2012) cites Henry Markram as estimating 1e18 FLOP/s to run a very detailed simulation;106 Markram, in a 2018 video (18:28), estimates that you’d need ~4e29 FLOP/s to run a “real-time molecular simulation of the human brain”;107 and Eugene Izhikevich estimates that a real-time brain simulation would require ~1e6 processors running at 384 GHz.108

Sandberg and Bostrom (2008) also estimate the FLOP/s requirements for brain emulations at different levels of detail. Their estimates range from 1e15 FLOP/s for an “analog network population model,” to 1e43 FLOP/s for emulating the “stochastic behavior of single molecules.”109 They report that in an informal poll of attendees at a workshop on whole brain emulation, the consensus appeared to be that the required level of resolution would fall between “Spiking neural network” (1e18 FLOP/s), and “Metabolome” (1e25 FLOP/s).110

Despite their differences, I group all of these estimates under the broad heading of the “mechanistic method,” as all of them attempt to identify task-relevant causal structure in the brain’s biological mechanisms, and quantify it in some kind of computational unit.

1.7.2 Functional method estimates

A different class of estimates focus on the FLOP/s sufficient to replicate the function of some portion of the brain, and then attempt to scale up to an estimate for the brain as a whole (the “functional method”). Moravec (1988), for example, estimates the computation required to do what the retina does (1e9 calculations/second) and then scales up (1e14 calc/s).111 Merkle (1989) performs a similar retina-based calculation and gets 1e12-1e14 ops/sec.112

Kurzweil (2005) offers a functional method estimate (1e14 calcs/s) based on work by Lloyd Watts on sound localization,113 another (1e15 calcs/s) based on an cerebellar simulation at the University of Texas;114 and a third (1e14 calcs/s), in his 2012 book, based on the FLOP/s he estimates is required to emulate what he calls a “pattern recognizer” in the neocortex.115 Drexler (2019) uses the FLOP/s required for various deep learning systems (specifically: Google’s Inception architecture, Deep Speech 2, and Google’s neural machine translation model) to generate various estimates he takes to suggest that 1e15 FLOP/s is sufficient to match the brain’s functional capacity.116

1.7.3 Limit method estimates

Sandberg (2016) uses Landauer’s principle to generate an upper bound of ~2e22 irreversible operations per second in the brain – a methodology I consider in more detail in Section 4.117 De Castro (2013) estimates a similar limit, also from Landauer’s principle, on perceptual operations performed by the parts of the brain involved in rapid, automatic inference (1e23 operations per second).118 I have yet to encounter other attempts to bound the brain’s overall computation via Landauer’s principle,119 though many papers discuss related issues in the brain and in biological systems more broadly.120

1.7.4 Communication method estimates

AI Impacts estimates the communication capacity of the brain (measured as “traversed edges per second” or TEPS), then combines this with an observed ratio of TEPS to FLOP/s in some human-engineered computers, to arrive an estimate of brain FLOP/s (~1e16-3e17 FLOP/s).121 I discuss methods in this broad category – what I call, the “communication method” – in Section 5.

Let’s turn now to evaluating the methods themselves. Rather than looking at all possible ways of applying them, my discussion will focus on what seem to me like the most plausible approaches I’m aware of, and the most important arguments/objections.

2 The mechanistic method

The first method I’ll be discussing – the “mechanistic method” – attempts to estimate the computation required to model the brain’s biological mechanisms at a level of detail adequate to replicate task performance.

Simulating the brain in extreme detail would require enormous amounts of computational power.122 Which details would need to be included in a computational model, and which, if any, could be left out or summarized?

The approach I’ll pursue focuses on signaling between cells. Here, the idea is that for a process occurring in a cell to matter to task-performance, it needs to affect the type of signals (e.g. neurotransmitters, neuromodulators, electrical signals at gap junctions, etc.) that cell sends to other cells.123 Hence, a model of that cell that replicates its signaling behavior (that is, the process of receiving signals, “deciding” what signals to send out, and sending them) would replicate the cell’s role in task-performance, even if it leaves out or summarizes many other processes occuring in the cell. Do that for all the cells in the brain involved in task-performance, and you’ve got a task-functional model.

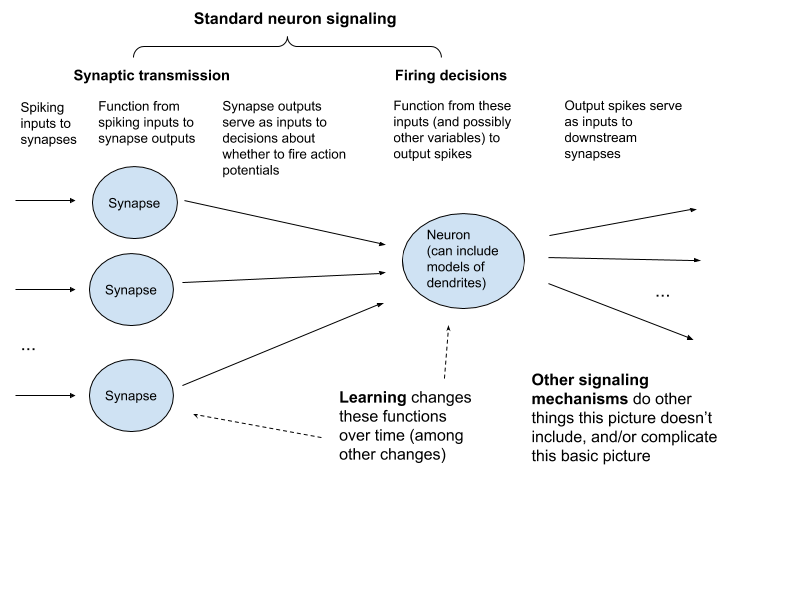

I’ll divide the signaling processes that might need to be modeled into three categories:

- Standard neuron signaling.124 I’ll divide this into two parts:

- Synaptic transmission. The signaling process that occurs at a chemical synapse as a result of a spike.

- Firing decisions. The processes that cause a neuron to spike or not spike, depending on input from chemical synapses and other variables.

- Learning. Processes involved in learning and memory formation (e.g., synaptic plasticity, intrinsic plasticity, and growth/death of cells and synapses), where not covered by (1).

- Other signaling mechanisms. Any other signaling mechanisms (neuromodulation, electrical synapses, ephaptic effects, glial signaling, etc.) not covered by (1) or (2).

As a first-pass framework, we can think of synaptic transmission as a function from spiking inputs at synapses to some sort of output impact on the post-synaptic neuron; and of firing decisions as (possibly quite complex) functions that take these impacts as inputs, and then produce spiking outputs – outputs which themselves serve as inputs to downstream synaptic transmission. Learning changes these functions over time (though it can involve other changes as well, like growing new neurons and synapses). Other signaling mechanisms do other things, and/or complicate this basic picture.

This isn’t an ideal carving, but hopefully it’s helpful regardless.125 Here’s the mechanistic method formula that results:

Total FLOP/s = FLOP/s for standard neuron signaling +

FLOP/s for learning +

FLOP/s for other signaling mechanisms

I’m particularly interested in the following argument:

- You can capture standard neuron signaling and learning with somewhere between ~1e13-1e17 FLOP/s overall.

- This is the bulk of the FLOP/s burden (other processes may be important to task-performance, but they won’t require comparable FLOP/s to capture).

I’ll discuss why one might find (I) and (II) plausible in what follows. I don’t think it at all clear that these claims are true, but they seem plausible to me, partly on the merits of various arguments I’ll discuss, and partly because some of the experts I engaged with were sympathetic (others were less so). I also discuss some ways this range could be too high, and too low.

2.1 Standard neuron signaling

Here is the sub-formula for standard neuron signaling:

FLOP/s for standard neuron signaling = FLOP/s for synaptic transmission + FLOP/s for firing decisions

I’ll budget for each in turn.

2.1.1 Synaptic transmission

Let’s start with synaptic transmission. This occurs as a result of spikes through synapses, so I’ll base this budget on spikes through synapses per second × FLOPs per spike through synapse (I discuss some assumptions this involves below).

2.1.1.1 Spikes through synapses per second

How many spikes through synapses happen per second?

As noted above, the human brain has roughly 100 billion neurons.126 Synapse count appears to be more uncertain,127 but most estimates I’ve seen fall in the range of an average of 1,000-10,000 synapses per neuron, and between 1e14 and 1e15 overall.128

How many spikes arrive at a given synapse per second, on average?

- Maximum neuron firing rates can exceed 100 Hz,129 but in vivo recordings suggest that neurons usually fire at lower rates – between 0.01 and 10 Hz.130

- Experts I engaged with tended to use average firing rates of 1-10 Hz.131

- Energy costs limit spiking. Lennie (2003), for example, uses energy costs to estimate a 0.16 Hz average in the cortex, and 0.94 Hz “using parameters that all tend to underestimate the cost of spikes.”132 He also estimates that “to sustain an average rate of 1.8 spikes/s/neuron would use more energy than is normally consumed by the whole brain” (13 Hz would require more than the whole body).133

- Existing recording methods may bias towards active cells.134 Shoham et al. (2005), for example, suggests that recordings may overlook large numbers of “silent” neurons that fire infrequently (on one estimate for the cat primary visual cortex, >90% of neurons may qualify as “silent”).135

Synthesizing evidence from a number of sources, AI Impacts offers a best guess average of 0.1-2 Hz. This sounds reasonable to me (I give most weight to the metabolic estimates). I’ll use 0.1-1 Hz, partly because Lennie (2003) treats 0.94 Hz as an overestimate, and partly because I’m mostly sticking with order-of-magnitude level precision. This suggests an overall range of ~1e13-1e15 spikes through synapses per second (1e14-1e15 synapses × 0.1-1 spikes per second).136

Note that many of the mechanistic method estimates reviewed in 1.6.1 assume a higher average spiking rate, often in the range of 100 Hz.137 For the reasons listed above, I think 100 Hz too high. ~10 Hz seems more possible (though it requires Lennie (2003) to be off by 1-2 orders of magnitude, and my best guess is lower): in that case, we’d add an orders of magnitude to the high-end estimates below.

2.1.1.2 FLOPs per spike through synapse

How many FLOPs do we need to capture what matters about the signaling that occurs when a spike arrives at a synapse?

2.1.1.2.1 A simple model

A simple answer is: one FLOP. Why might one think this?

One argument is that in the context of standard neuron signaling (setting aside learning), what matters about a spike through a synapse is that it increases or decreases the post-synaptic membrane potential by a certain amount, corresponding to the synaptic weight. This could be modeled as a single addition operation (e.g., add the synaptic weight to the post-synaptic membrane potential). That is, one FLOP (of some precision, see below).138

We can add several complications without changing this picture much:139

- Some estimates treat a spike through a synapse as multiplication by a synaptic weight. But spikes are binary, so in a framework based on individual spikes, you’re really only “multiplying” the synaptic weight by 0 or 1 (e.g., if the neuron spikes, then multiply the weight by 1, and add it to the post-synaptic membrane potential; otherwise, multiply it by 0, and add the result – 0 – to the post-synaptic membrane potential).

- In artificial neural networks, input neuron activations are sometimes analogized to non-binary spike rates (e.g., average numbers of spikes over some time interval), which are multiplied by synaptic weights and then summed.140 This would be two FLOPs (or one Multiply-Accumulate). But since such rates take multiple spikes to encode, this analogy plausibly suggests less than two FLOPs per spike through synapse.

How precise do these FLOPs need to be?141 That depends on the number of distinguishable synaptic weights/membrane potentials. Here are some relevant estimates:

- Koch (1999) suggests “between 6 and 7 bits of resolution” for variables like neuron membrane potential.142

- Bartol et al. (2015) suggest a minimum of “4.7 bits of information at each synapse” (they don’t estimate a maximum).143

- Sandberg and Bostrom (2008) cite evidence for ~1 bit, 3-5 bits, and 0.25 bits stored at each synapse.144

- Zador (2019) suggests “a few” bits/synapse to specify graded synaptic strengths.145

- Lahiri and Ganguli (2013) suggest that the number of distinguishable synaptic strengths can be “as small as two”146 (though they cite Enoki et al. (2009) as indicating greater precision).147

A standard FLOP is 32 bits, and half-precision is 16 – well in excess of these estimates. Some hardware uses even lower-precision operations, which may come closer. I’d guess that 8 bits would be adequate.

If we assume 1 (8-bit) FLOP per spike through synapse, we get an overall estimate of 1e13-1e15 (8-bit) FLOP/s for synaptic transmission. I won’t continue to specify the precision I have in mind in what follows.

2.1.1.2.2 Possible complications

Here are a few complications this simple model leaves out.

Stochasticity

Real chemical synaptic transmission is stochastic. Each vesicle of neurotransmitter has a certain probability of release, conditional on a spike arriving at the synapse, resulting in variation in synaptic efficacy across trials.148 This isn’t necessarily a design defect. Noise in the brain may have benefits,149 and we know that the brain can make synapses reliable.150

Would capturing the contribution of this stochasticity to task performance require many extra FLOP/s, relative to a deterministic model? My guess is no.

- The relevant probability distribution (a binomial distribution, according to Siegelbaum et al. (2013c), (p. 270)), appears to be fairly simple, and Dr. Paul Christiano, one of our technical advisors, thought that sampling from an approximation of such a distribution would be cheap.151

- My background impression is that in designing systems for processing information, adding noise is easy; limiting noise is hard (though this doesn’t translate directly into a FLOPs number).

- Despite the possible benefits of noise, my guess is that the brain’s widespread use of stochastic synapses has a lot to do with resource constraints (more reliable synapses require more neurotransmitter release sites).152

- Many neural network models don’t include this stochasticity.153

That said, one expert I spoke with (Prof. Erik De Schutter) thought it an open question whether the brain manipulates synaptic stochasticity in computationally complex ways.154

Synaptic conductances

The ease with which ions can flow into the post-synaptic cell at a given synapse (also known as the synaptic conductance) changes over time as the ion channels activated by synaptic transmission open and close.155 The simple “addition” model above doesn’t include this – rather, it summarizes the impact of a spike through synapse as a single, instantaneous increase or decrease to post-synaptic membrane potential.

Sarpeshkar (2010), however, appears to treat the temporal dynamics of synaptic conductances as central to the computational function of synapses.156 He assumes, as a lower bound, that “the 20 ms second-order filter response due to each synapse is 40 FLOPs,” and that such operations occur on every spike.157

I’m not sure exactly what Sarpeshkar (2010) has in mind here, but it seems plausible to me that the temporal dynamics of a neuron’s synaptic conductances can influence membrane potential, and hence spike timing, in task-relevant ways.158 One expert also emphasized the complications to neuron behavior introduced by the conductance created by a particular type of post-synaptic receptor called an NMDA-receptor – conductances that Beniaguev et al. (2020) suggest may substantially increase the complexity of a neuron’s I/O (see discussion in Section 2.1.1.2).159 That said, two experts thought it likely that synaptic conductances could either be summarized fairly easily or left out entirely.160

Sparse FLOPs and time-steps per synapse

Estimates based on spikes through synapses assume that you don’t need to budget any FLOPs for when a synapse doesn’t receive a spike, but could have. Call this the “sparse FLOPs assumption.”161 In current neural network implementations, the analogous situation (e.g., artificial neuron activations of 0) creates inefficiencies, which some new hardware designs aim to avoid.162 But this seems more like an engineering challenge than a fundamental feature of the brain’s task-performance.

Note, though, that for some types of brain simulation, budgets would be based on time-steps per synapse instead, regardless of what is actually happening at synapse over that time. Thus, for a simulation of a 1e14-1e15 synapses run at 1 ms resolution (1000 timesteps per second), you’d get 1e17-1e18 timesteps per synapse – a number that would then be multiplied by your FLOPs budget per time-step at each synapse; and smaller time-steps would yield higher numbers. Not all brain simulations do this (see, e.g., Ananthanarayanan et al. (2009), who simulate time-steps at neurons, but events at synapse),163 but various experts use it as a default methodology.164

Going forward, I’ll assume that on simple models of synaptic transmission where the synaptic weight is not changing during time-steps without spikes, we don’t need to budget any FLOPs for those time-steps (the budgets for different forms of synaptic plasticity are different story, and will be covered in the learning section). If this is wrong, though, it could increase budgets by a few orders of magnitude (see Section 2.4.1).

Others

There are likely many other candidate complications that the simple model discussed above does not include. There is intricate molecular machinery located at synapses, much of which is still not well-understood. Some of this may play a role in synaptic plasticity (see Section 2.2 below), or just in maintaining a single synaptic weight (itself a substantive task), but some may be relevant to standard neuron signaling as well.165

Higher-end estimate

I’ll use 100 FLOPs per spike through synapse as a higher-end FLOP/s budget for synaptic transmission. This would at least cover Sarpeshkar’s 40 FLOP estimate, and provide some cushion for other things I might be missing, including some more complex manipulations of synaptic stochasticity.

With 1 FLOP per spike through synapse as a low-end, and 100 FLOPs as a high end, we get 1e13-1e17 FLOP/s overall. Firing rate models might suggest lower numbers; other complexities and unknowns, along with estimates based on time-steps rather than spikes, higher numbers.

2.1.2 Firing decisions

The other component of standard neuron signaling is firing decisions, understood as mappings from synaptic inputs to spiking outputs.

One might initially think these likely irrelevant: there are 3-4 orders of magnitude more synapses than neurons, so one might expect events at synapses to dominate the FLOP/s burden.166 But as just noted, we’re counting FLOPs at synapses based on spikes, not time-steps. Depending on the temporal-resolution we use (this varies across models), the number of time-steps per second (often ≥1000) plausibly exceeds the average firing rate (~0.1-1 Hz) by 3-4 orders of magnitude as well. Thus, if we need to compute firing decisions every time-step, or just generally more frequently than the average firing rate, this could make up for the difference between neuron and synapse count (I discuss this more in Section 2.1.2.5). And firing decisions could be more complex than synaptic transmission for other reasons as well.

Neuroscientists implement firing decisions using neuron models that can vary enormously in their complexity and biological realism. Herz et al. (2006) group these models into five rough categories:167

- Detailed compartmental models. These attempt detailed reconstruction of a neuron’s physical structure and the electrical properties of its dendritic tree. This tree is modeled using many different “compartments” that can each have different membrane potentials.

- Reduced compartmental models. These include fewer distinct compartments, but still more than one.

- Single compartment models. These ignore the spatial structure of the neuron entirely and focus on the impact of input currents on the membrane potential in a single compartment.

- The Hodgkin-Huxley model, a classic model in neuroscience, is a paradigm example of a single compartment model. It models different ionic conductances in the neuron using a series of differential equations. According to Izhikevich (2004), it requires ~120 FLOPs per 0.1 ms of simulation – ~1e6 FLOP/s overall.168

- My understanding is that “integrate-and-fire”-type models – another classic neuron model, but much more simplified – would also fall into this category. Izhikevich (2004) suggests that these require ~5-13 FLOPs per ms per cell, 5000-13,000 FLOP/s overall.169

- Cascade models. These models abstract away from ionic conductances, and instead attempt to model a neuron’s input-output mapping using a series of higher-level linear and non-linear mathematical operations, together with sources of noise. The “neurons” used in contemporary deep learning can be seen as variants of models in this category.170 These cascade models can also incorporate operations meant to capture transformations of synaptic inputs that occur in dendrites.171

- Black box models. These neglect biological mechanisms altogether.

Prof. Erik De Schutter also mentioned that greater computing power has made even more biophysically realistic models available.172 And models can in principle be arbitrarily detailed.

Which of these models (if any) would be adequate to capture what matters about firing decisions? I’ll consider four categories of evidence: the predictive success of different neuron models; some specific arguments about the computational power of dendrites; a collection of other considerations; and expert opinion/practice.

2.1.2.1 Predicting neuron behavior

Let’s first look at the success different models have had in predicting neuron spike patterns.

2.1.2.1.1 Standards of accuracy

How accurate do these predictions need to be? The question is still open.

In particular, debate in neuroscience continues about whether and when to focus on spike rates (e.g., the average number of spikes over a given period), vs. the timings of individual spikes.173

- Many results in neuroscience focus on rates,174 as do certain neural prostheses.175

- In some contexts, it’s fairly clear that spike timings can be temporally precise.176

- One common argument for rates appeals to variability in a neuron’s response to repeated exposure to the same stimulus.177 My impression is that this argument is not straightforward to make rigorous, but it seems generally plausible to me that if rates are less variable than timings, they are also better suited to information-processing.178

- A related argument is that in networks of artificial spiking neurons, adding a single spike results in very different overall behavior.179 This plausibly speaks against very precisely-timed spiking in the brain, since the brain is robust to forms of noise that can shift spike timings180 as well as to our adding spikes to biological networks.181

My current guess is that in many contexts, but not all, spike rates are sufficient.

Even if we settled this debate, though, we’d still need to know how accurately the relevant rates/timings would need to be predicted.182 Here, a basic problem is that in many cases, we don’t know what tasks a neuron is involved in performing, or what role it’s playing. So we can’t validate a model by showing that it suffices to reproduce a given neuron’s role in task-performance – the test we actually care about.183

In the absence of such validation, one approach is to try to limit the model’s prediction error to within the trial-by-trial variability exhibited by the biological neuron.184 But if you can’t identify and control all task-relevant inputs to the cell, it’s not always clear what variability is or is not task-relevant.185

Nor is it clear how much progress a given degree of predictive success represents.186 Consider an analogy with human speech. I might be able to predict many aspects of human conversation using high-level statistics about common sounds, volume variations, turn-taking, and so forth, without actually being able to replicate or generate meaningful sentences. Neuron models with some predictive success might be similarly off the mark (and similar meanings could also presumably be encoded in different ways: e.g., “hello,” “good day,” “greetings,” etc.).187

2.1.2.1.2 Existing results

With these uncertainties in mind, let’s look at some existing efforts to predict neuron spiking behavior with computational models (these are only samples from a very large literature, which I do not attempt to survey).188

Many of these come with important additional caveats:

- Many model in vitro neuron behavior, which may differ from in vivo behavior in important ways.189

- Some use simpler models to predict the behavior of more detailed models. But we don’t really know how good the detailed models are, either.190

- We are very limited in our ability to collect in vivo data about the spatio-temporal input patterns at dendrites. This makes it hard to tell how models respond to realistic input patterns.191 And we know that certain behaviors (for example, dendritic non-linearities) are only triggered by specific input patterns.192

- We can’t stimulate neurons with arbitrary input patterns. This makes it hard to test their full range of behavior.193

- Models that predict spiking based on current injection into the soma skip whatever complexity might be involved in capturing processing that occurs in dendrites.194

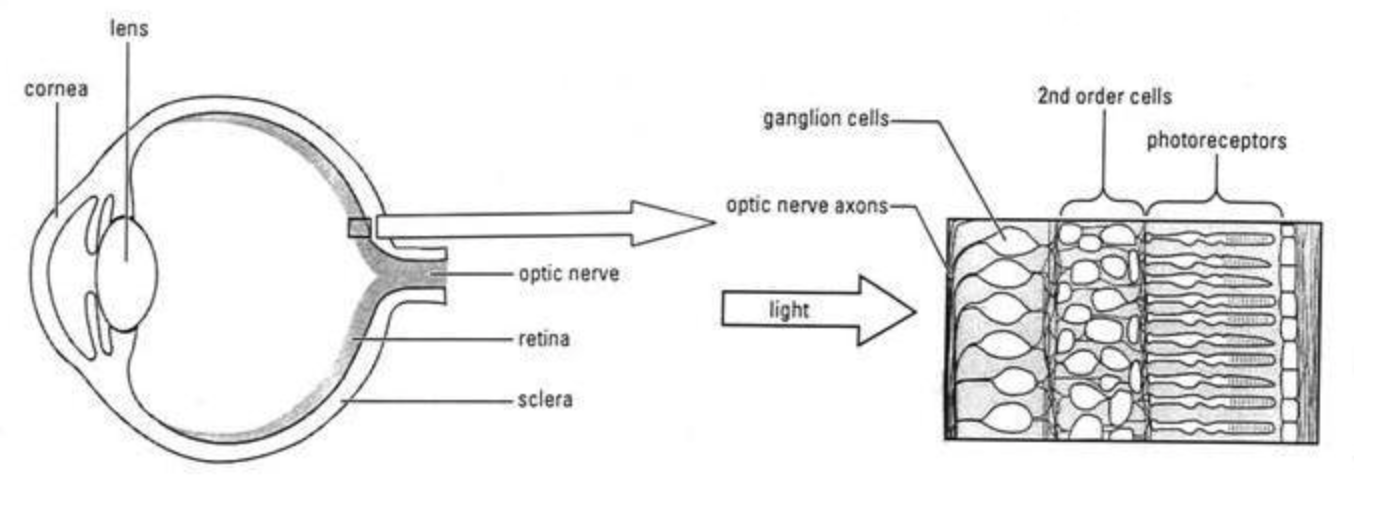

A number of the results I looked at come from the retina, a thin layer of neural tissue in the eye, responsible for the first stage of visual processing. This processing is largely (though not entirely) feedforward:195 the retina receives light signals via a layer of ~100 million photoreceptor cells (rods and cones),196 processes them in two further cell layers, and sends the results to the rest of the brain via spike patterns in the optic nerve – a bundle of roughly a million axons of neurons called retinal ganglion cells.197

I focused on the retina in particular partly because it’s the subject of a prominent functional method estimate in the literature (see Section 3.1.1), and partly because it offers advantages most other neural circuits don’t: we know, broadly, what task it’s performing (initial visual processing); we know what the relevant inputs (light signals) and outputs (optic nerve spike trains) are; and we can measure/manipulate these inputs/outputs with comparative ease.199 That said, as I discuss in Section 3.1.2, it may also be an imperfect guide to the brain as a whole.

Here’s a table with various modeling results that purport to have achieved some degree of success. Most of these I haven’t investigated in detail, and don’t have a clear sense of the significance of the quoted results. And as I discuss in later sections, some of the deep neural network models (e.g., Beniaguev et al. (2020), Maheswaranathan et al. (2019), Batty et al. (2017)) are very FLOP/s intensive (~1e7-1e10 FLOP/s per cell).200 A more exhaustive investigation could estimate the FLOP/s costs of all the listed models, but I won’t do that here.

| SOURCE | MODEL TYPE | THING PREDICTED | STIMULI | RESULTS |

|---|---|---|---|---|

| Beniaguev et al. (2020) | Temporally convolutional network with 7 layers and 128 channels per layer | Spike timing and membrane potential of a detailed model of a Layer 5 cortical pyramidal cell | Random synaptic inputs | “accurately, and very efficiently, capture[s] the I/O of this neuron at the millisecond resolution … For binary spike prediction (Fig. 2D), the AUC is 0.9911. For somatic voltage prediction (Fig. 2E), the RMSE is 0.71mV and 94.6% of the variance is explained by this model” |

| Maheswaranathan et al. (2019) | Three-layer convolutional neural network | Retinal ganglion cell (RGC) spiking in isolated salamander retina | Naturalistic images | >0.7 correlation coefficient (retinal reliability is 0.8) |

| Ujfalussy et al. (2018) | Hierarchical cascade of linear-nonlinear subunits | Membrane potential of in-vivo validated biophysical model of L2/3 pyramidal cell | In vivo-like input patterns | “Linear input integration with a single global dendritic nonlinearity achieved above 90% prediction accuracy.” |

| Batty et al. (2017) | Shared two-layer recurrent network | RGC spiking in isolated primate retina | Natural images | 80% of explainable variance. |

| 2016 talk (39:05) by Markus Meister | Linear-non-linear | RGC spiking (not sure of experimental details) | Naturalistic movie | 80% correlation with real response (cross-trial correlation of real responses was around 85-90%). |

| Naud et al. (2014) | Two compartments, each modeled with a pair of non-linear differential equations and a small number of parameters that approximate the Hodgkin-Huxley equations | In vitro spike timings of layer 5 pyramidal cell | Noisy current injection into the soma and apical dendrite | “The predicted spike trains achieved an averaged coincidence rate of 50%. The scaled coincidence rate obtained by dividing by the intrinsic reliability (Jolivet et al. (2008a); Naud and Gerstner (2012b)) was 72%, which is comparable to the state-of-the performance for purely somatic current injection which reaches up to 76% (Naud et al. (2009)).” |

| Bomash et al. (2013) | Linear-non-linear | RGC spiking in isolated mouse retina | Naturalistic and artificial | “the model cells carry the same amount of information,” “the quality of the information is the same.” |

| Nirenberg and Pandarinath (2012) | Linear-non-linear | RGC spiking in isolated mouse retina | Natural scenes movie | “The firing patterns … closely match those of the normal retina,”; brain would map the artificial spike trains to the same images “90% of the time.” |

| Naud and Gerstner (2012a) | Review of a number of simplified neuron models, including Adaptive Exponential Integrate and Fire (AdEx) and Spike Response Model (SRM) | In vitro spike timings of various neuron types | Simulating realistic conditions in vitro by injecting a fluctuating current into the soma | “Performances are very close to optimal,” considering variation in real neuron responses. “For models like the AdEx or the SRM, [the percentage of predictable spikes predicted] ranged from 60% to 82% for pyramidal neurons, and from 60% to 100% for fast-spiking interneurons.” |

| Gerstner and Naud (2009) | Threshold model | In vivo spiking activity of neuron in the lateral geniculate nucleus (LGN) | Visual stimulation of the retina | Predicted 90.5% of spiking activity |

| Gerstner and Naud (2009) | Integrate-and-fire model with moving threshold | In vitro spike timings of (a) a pyramidal cell, and (b) an interneuron | Random current injection | 59.6% of pyramidal cell spikes, 81.6% of interneuron spikes. |

| Song et al. (2007) | Multi-input multi-output model | Spike trains in the CA3 region of the rat hippocampus while it was performing a memory task | Input spike trains recorded from rat hippocampus | “The model predicts CA3 output on a msec-to-msec basis according to the past history (temporal pattern) of dentate input, and it does so for essentially all known physiological dentate inputs and with approximately 95% accuracy.” |

| Pillow et al. (2005) | Leaky integrate and fire model | RGC spiking in in vitro macaque retina | Artificial (“pseudo-random stimulus”) | “The fitted model predicts the detailed time structure of responses to novel stimuli, accurately capturing the interaction between the spiking history and sensory stimulus selectivity.” |

| Brette and Gerstner (2005) | Adaptive Exponential Integrate-and-fire Model | Spike timings for detailed, conductance-based neuron model | Injection of noisy synaptic conductances | “Our simple model predicts correctly the timing of 96% of the spikes (+/- 2 ms)…” |

| Rauch et al. (2003) | Integrate-and-fire model with spike-frequency-dependent adaptation/facilitation | In vitro firing of rat neocortical pyramidal cells | In vivo-like noisy current injection into the soma. | “the integrate-and-fire model with spike-frequency- dependent adaptation /facilitation is an adequate model reduction of cortical cells when the mean spike frequency response to in vivo–like currents with stationary statistics is considered.” |

| Poirazi et al. (2003) | Two-layer neural network | Detailed biophysical model of a pyramidal neuron | “An extremely varied, spatially heterogeneous set of synaptic activation patterns” | 94% of variance explained (a single-layer network explained 82%) |

| Keat et al. (2001) | Linear-non-linear | RGC spiking in salamander and rabbit isolated retinas, and retina/LGN spiking in anesthetized cat | Artificial (“random flicker stimulus’) | “The simulated spike trains are about as close to the real spike trains as the real spike trains are across trials.” |

What should we take away from these results? Without much of an understanding of the details here, my current high-level take-away is that it seems like some models do pretty well in some conditions, but in many cases, these conditions aren’t clearly informative about in vivo behavior across the brain, and absent better functional understanding and experimental access, it’s hard to say exactly what level of predictive accuracy is required, in response to what types of inputs. There are also incentives to present research in an optimistic light, and contexts in which our models do much worse won’t have ended up on the list (though note, as well, that additional predictive accuracy need not require additional FLOP/s – it may be that we just haven’t found the right models yet).

Let’s look at some other considerations.

2.1.2.2 Dendritic computation

Some neuron models don’t include dendrites. Rather, they treat dendrites as directly relaying synaptic inputs to the soma.

A common objection to such models is that dendrites can do more than this.201 For example:

- The passive membrane properties of dendrites (e.g. resistance, capacitance, and geometry) can create nonlinear interactions between synaptic inputs.202

- Active, voltage-dependent channels can create action potentials within dendrites, some of which can backpropagate through the dendritic tree.203

Effects like these are sometimes called “dendritic computation.”204

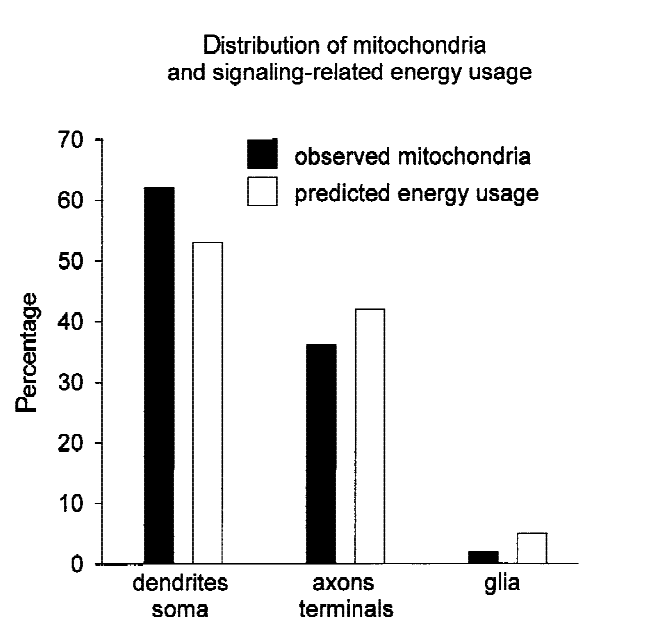

My impression is that the importance of dendritic computation to task-performance remains somewhat unclear: many results are in vitro, and some may require specific patterns of synaptic input.205 That said, one set of in vivo measurements found very active dendrites: specifically, dendritic spike rates 5-10x larger than somatic spike rates,206 which the authors take to suggest that dendritic spiking might dominate the brain’s energy consumption.207 Energy is scarce, so if true, this would suggest that dendritic spikes are important for something. And dendritic dynamics appear to be task-relevant in a number of neural circuits.208

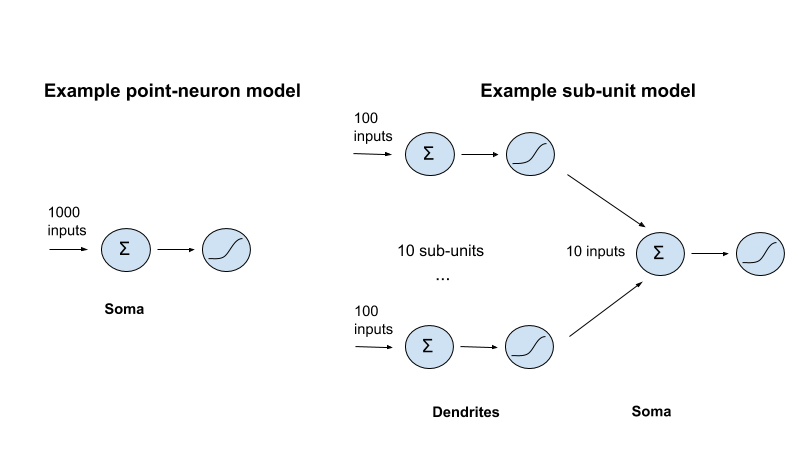

How many extra FLOP/s do you need to capture dendritic computation, relative to “point neuron models” that don’t include dendrites? Some considerations suggest fairly small increases:

- A number of experts thought that models incorporating a small number of additional dendritic sub-units or compartments would likely be adequate.209

- It may be possible to capture what matters about dendritic computation using a “point neuron” model.210

- Some active dendritic mechanisms may function to “linearize” the impact at the soma of synaptic inputs that would otherwise decay, creating an overall result that looks more like direct current injection.211

- Successful efforts to predict neuron responses to task-relevant inputs (e.g., retinal responses to natural movies) would cover dendritic computation automatically (though at least some prominent forms of dendritic computation don’t happen in the retina).212

Tree structure

One of Open Philanthropy’s technical advisors (Dr. Dario Amodei) also suggests a more general constraint. Many forms of dendritic computation, he suggests, essentially amount to non-linear operations performed on sums of subsets of a neuron’s synaptic inputs.213 Because dendrites are structured as a branching tree, the number of such non-linearities cannot exceed the number of inputs,214 and thus the FLOP/s costs they can impose is limited.215 Feedbacks created by active dendritic spiking could complicate this picture, but the tree structure will still limit communication between branches. Various experts I spoke with were sympathetic to this kind of argument,216 though one was skeptical.217

Here’s a toy illustration of this idea.218 Consider a point neuron model that adds up 1000 synaptic inputs, and then passes them through a non-linearity. To capture the role of dendrites, you might modify this model by adding, say, 10 dendritic subunits, each performing a non-linearity on the sum of 100 synaptic inputs, the outputs of which are summed at the soma and then passed through a final non-linearity (multi-layer approaches in this broad vicinity are fairly common).219

If we budget 1 FLOP per addition operation, and 10 per non-linearity (this is substantial overkill for certain non-linearities, like a ReLU),220 we get the following budgets:

Point neuron model:

Soma: 1000 FLOPs (additions) + 10 FLOPs (non-linearity)

Total: 1010 FLOPs

Sub-unit model:

Dendrites: 10 (subunits) × (100 FLOPs (additions) + 10 FLOPs (non-linearity))

Soma: 10 FLOPs (additions) + 10 FLOPs (non-linearity)

Total: 1120 FLOPs

The totals aren’t that different (in general, the sub-unit model requires 11 additional FLOPs per sub-unit), even if the sub-unit model can do more interesting things. And if the tree-structure caps the number of non-linearities (and hence, sub-units) at the number of inputs, then the maximum increase is a factor of ~11×.221 This story would alter if, for example, subunits could be fully connected, with each receiving all synaptic inputs, or all the outputs from subunits in a previous layer. But this fits poorly with a tree structured physiology.

Note, though, that the main upshot of this argument is that dendritic non-linearities won’t add that much computation relative to a model that budgets 1 FLOP per input connection per time-step. Our budget for synaptic transmission above, however, was based on spikes through synapses per second, not time-steps per synapse per second. In that context, if we assume that dendritic non-linearities need to be computed every time-step, then adding e.g. 100 or 1000 extra dendritic non-linearities per neuron could easily increase our FLOP/s budget by 100 or 1000x (see endnote for an example).222 That said, my impression is that many actual ANN models of dendritic computation use fewer sub-units, and it may be possible to avoid computing firing decisions/dendritic non-linearities every time-step as well – see brief discussion in section 2.1.2.5.

Cortical neurons as deep neural networks

What about evidence for larger FLOP/s costs from dendritic computation? One interesting example is Beniaguev et al. (2020), who found that they needed a very large deep neural network (7 layers, 128 channels per layer) to accurately predict the outputs of a detailed biophysical model of a cortical neuron, once they added conductances from a particular type of receptor (NMDA receptors).223 Without these conductances, they could do it with a much smaller network (a fully connected DNN with 128 hidden units and only one hidden layer), suggesting that it’s the dynamics introduced by NMDA-conductances in particular, as opposed to the behavior of the detailed biophysical model more broadly, that make the task hard.224

This 7-layer network requires a lot of FLOPs: roughly 2e10 FLOP/s per cell.225 Scaled up by 1e11 neurons, this would be ~2e21 FLOP/s overall. And these numbers could yet be too small: perhaps you need greater temporal/spatial resolution, greater prediction accuracy, a more complex biophysical model, etc., not to mention learning and other signaling mechanisms, in order to capture what matters.

I think that this is an interesting example of positive evidence for very high FLOP/s estimates. But I don’t treat it as strong evidence on its own. This is partly out of general caution about updating on single studies (or even a few studies) I haven’t examined in depth, especially in a field as uncertain as neuroscience. But there are also a few more specific ways these numbers could be too high:

- It may be possible to use a smaller network, given a more thorough search. Indeed, the authors suggest that this is likely, and have made data available to facilitate further efforts.226

- They focus on predicting both membrane potential and individual spikes very precisely.

- This is new (and thus far unpublished) work, and I’m not aware of other results of this kind.

The authors also suggest an interestingly concrete way to validate their hypothesis: namely, teach a cortical L5 pyramidal neuron to implement a function that this kind of 7-layer network can implement, such as classifying handwritten digits.227 If biological neurons can perform useful computational tasks thought to require very large neural networks to perform, this would indeed be very strong evidence for capacities exceeding what simple models countenance.228 That said, “X is needed to predict the behavior of Y” does not imply that “Y can do anything X can do” (consider, for example, a supercomputer and a hurricane).

Overall, I think that dendritic computation is probably the largest source of uncertainty about the FLOP/s costs of firing decisions. I find the Beniaguev et al. (2020) results suggestive of possible lurking complexity; but I’m also moved somewhat by the relative simplicity of some common abstract models of dendritic computation, by the tree-structure argument above, and by experts who thought dendrites unlikely to imply a substantial increase in FLOP/s.

2.1.2.3 Crabs, locusts, and other considerations

Here are some other considerations relevant to the FLOP/s costs of firing decisions.

Other experimentally accessible circuits

The retina is not the only circuit where we have (a) some sense of what task it’s performing, and (b) relatively good experimental access. Here are two others I looked at that seem amenable to simplified modeling.